首页 > 代码库 > Tachyon基本使用09-----Running Spark on Tachyon

Tachyon基本使用09-----Running Spark on Tachyon

一、配置scala环境

1.实验环境

Node1 192.168.1.1 master Node2 192.168.1.2 worker Node3 192.168.1.3 worker

2.检查java环境(每个节点)

# java -version java version "1.7.0_05" Java(TM) SE Runtime Environment (build 1.7.0_05-b05) Java HotSpot(TM) 64-Bit Server VM (build 23.1-b03, mixed mode) #

3.下载并解压scala

[root@node1 soft]# tar -zxf scala-2.11.2.tgz -C /usr/local/ [root@node1 soft]# ln -s /usr/local/scala-2.11.2/ /usr/local/scala [root@node1 soft]#

4.配置scala环境

[root@node1 soft]# cat /etc/profile.d/scala.sh SCALA_HOME=/usr/local/scala PATH=$SCALA_HOME/bin:$PATH [root@node1 soft]# . /etc/profile.d/scala.sh [root@node1 soft]#

5.测试scala环境

[root@node1 soft]# scala -version Scala code runner version 2.11.2 -- Copyright 2002-2013, LAMP/EPFL [root@node1 soft]#

6.将scala环境部署到其它节点

[root@node1 soft]# scp -r/usr/local/scala-2.11.2/ node2:/usr/local/ [root@node1 soft]# scp-r /usr/local/scala-2.11.2/ node3:/usr/local/ [root@node1 soft]# sshnode2 ln -s /usr/local/scala-2.11.2/ /usr/local/scala [root@node1 soft]# sshnode3 ln -s /usr/local/scala-2.11.2/ /usr/local/scala [root@node1 soft]# scp/etc/profile.d/scala.sh node2:/etc/profile.d/ scala.sh 100% 55 0.1KB/s 00:00 [root@node1 soft]# scp/etc/profile.d/scala.sh node3:/etc/profile.d/ scala.sh 100% 55 0.1KB/s 00:00 [root@node1 soft]#

二、安装Spark集群

1.下载并解压spark

[root@node1 soft]# tar -zxf spark-0.9.1-bin-hadoop2.tgz -C /usr/local/ [root@node1 soft]# ln -s /usr/local/spark-0.9.1-bin-hadoop2/ /usr/local/spark [root@node1 soft]#

2.配置spark环境变量

[root@node1 soft]# cat /etc/profile.d/spark.sh SPARK_HOME=/usr/local/spark PATH=$SPARK_HOME/bin:$PATH [root@node1 soft]# . /etc/profile.d/spark.sh [root@node1 soft]#

3.修改spark-env.sh配置文件

[root@node1 conf]# pwd /usr/local/spark/conf [root@node1 conf]# cp spark-env.sh.template spark-env.sh [root@node1 conf]# cat spark-env.sh|grep -v ^$|grep -v ^# exportSCALA_HOME=/usr/local/scala exportJAVA_HOME=/usr/java/default exportSPARK_MASTER_IP=192.168.1.1 exportSPARK_WORKER_MEMORY=512m exportSPARK_WORKER_PORT=7077 [root@node1 conf]#

4.配置slaves

[root@node1 conf]# pwd /usr/local/spark/conf [root@node1 conf]# cat slaves # A Spark Worker will be started on each of the machines listed below. node1 node2 node3 [root@node1 conf]#

5.将Spark的程序文件和配置文件拷贝分发到从节点机器上

[root@node1 ~]# scp -r/usr/local/spark-0.9.1-bin-hadoop2/ node2:/usr/local/ [root@node1 ~]# scp -r/usr/local/spark-0.9.1-bin-hadoop2/ node3:/usr/local/ [root@node1 ~]# sshnode2 ln -s /usr/local/spark-0.9.1-bin-hadoop2/ /usr/local/spark [root@node1 ~]# sshnode3 ln -s /usr/local/spark-0.9.1-bin-hadoop2/ /usr/local/spark [root@node1 ~]# scp/etc/profile.d/spark.sh node2:/etc/profile.d/ spark.sh 100% 55 0.1KB/s 00:00 [root@node1 ~]# scp/etc/profile.d/spark.sh node3:/etc/profile.d/ spark.sh 100% 55 0.1KB/s 00:00 [root@node1 ~]#

6.启动Spark集群

[root@node1 ~]# cd /usr/local/spark [root@node1 spark]#sbin/start-all.sh

7.查看spark进程

[root@node1 spark]# jps 32903 Master 33071 Jps [root@node1 spark]# ssh node2 jps 20357 Jps 20297 Worker [root@node1 spark]# ssh node3 jps 12596 Jps 12538 Worker [root@node1 spark]#

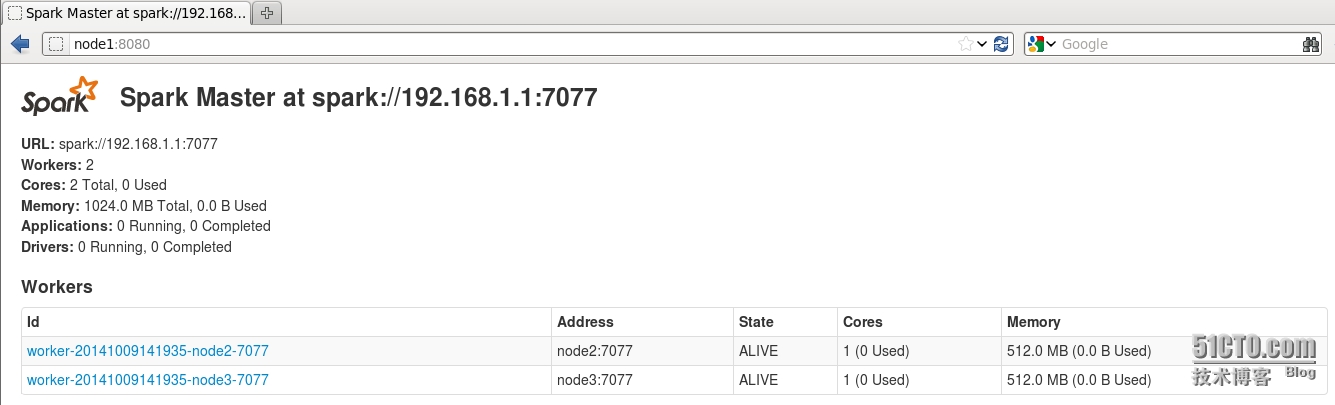

8.查看Spark Web UI

三、配置Spark结合Tachyon

1.修改spark-env.sh添加Tachyon客户端jar包环境变量

export SPARK_CLASSPATH=/usr/local/tachyon/client/target/tachyon-client-0.5.0-jar-with-dependencies.jar:$SPARK_CLASSPATH

2.创建并配置core-site.xml文件

[root@node1 conf]# pwd /usr/local/spark/conf [root@node1 conf]# catcore-site.xml <configuration> <property> <name>fs.tachyon.impl</name> <value>tachyon.hadoop.TFS</value> </property> </configuration> [root@node1 conf]#

3.同步修改的配置文件到其它节点

[root@node1 conf]# scpcore-site.xml node2:/usr/local/spark/conf/ core-site.xml 100% 135 0.1KB/s 00:00 [root@node1 conf]# scpcore-site.xml node3:/usr/local/spark/conf/ core-site.xml 100% 135 0.1KB/s 00:00 [root@node1 conf]# scpspark-env.sh node2:/usr/local/spark/conf/ spark-env.sh 100%1498 1.5KB/s 00:00 [root@node1 conf]# scpspark-env.sh node3:/usr/local/spark/conf/ spark-env.sh 100%1498 1.5KB/s 00:00 [root@node1 conf]#

4.启动tachyon

[root@node1 ~]#tachyon-start.sh all Mount

5.启动Spark

[root@node1 ~]# /usr/local/spark/sbin/start-all.sh

6.测试spark

[root@node1 conf]#MASTER=spark://192.168.1.1:7077 spark-shell

scala> val s =sc.textFile("tachyon://node1:19998/gcf")

scala> s.count

scala>s.saveAsTextFile("tachyon://node1:19998/count")7.查看Spark保存的文件

[root@node1 spark]#tachyon tfs ls /count 375.00 B 10-09-2014 16:40:17:485 In Memory /count/part-00000 369.00 B 10-09-2014 16:40:18:218 In Memory /count/part-00001 0.00 B 10-09-2014 16:40:18:548 In Memory /count/_SUCCESS [root@node1 spark]#

四、配置Spark结合Tachyon HA

1.修改core-site.xml添加一个属性

<property> <name>fs.tachyon-ft.impl</name> <value>tachyon.hadoop.TFS</value> </property>

2.修改spark-env.sh

exportSPARK_JAVA_OPTS=" -Dtachyon.zookeeper.address=node1:2181,node2:2181,node3:2181 -Dtachyon.usezookeeper=true $SPARK_JAVA_OPTS "

3.复制修改的配置文件到其它节点

[root@node1 conf]# scpcore-site.xml node2:/usr/local/spark/conf/ core-site.xml 100% 252 0.3KB/s 00:00 [root@node1 conf]# scpcore-site.xml node3:/usr/local/spark/conf/ core-site.xml 100% 252 0.3KB/s 00:00 [root@node1 conf]# scpspark-env.sh node2:/usr/local/spark/conf/ spark-env.sh 100%1637 1.6KB/s 00:00 [root@node1 conf]# scpspark-env.sh node3:/usr/local/spark/conf/ spark-env.sh 100% 1637 1.6KB/s 00:00 [root@node1 conf]#

4.重启Spark

[root@node1 spark]# pwd /usr/local/spark [root@node1 spark]#sbin/start-all.sh

5.测试

[root@node1 spark]#MASTER=spark://192.168.1.1:7077 spark-shell

scala> val s = sc.textFile("tachyon-ft://activeHost:19998/gcf")

scala>s.saveAsTextFile("tachyon-ft://activeHost:19998/count-ft")本文出自 “tachyon” 博客,请务必保留此出处http://ucloud.blog.51cto.com/3869454/1564216

Tachyon基本使用09-----Running Spark on Tachyon

声明:以上内容来自用户投稿及互联网公开渠道收集整理发布,本网站不拥有所有权,未作人工编辑处理,也不承担相关法律责任,若内容有误或涉及侵权可进行投诉: 投诉/举报 工作人员会在5个工作日内联系你,一经查实,本站将立刻删除涉嫌侵权内容。