首页 > 代码库 > Heartbeat+Drbd+Mysql高可用(HA)集群架构的部署

Heartbeat+Drbd+Mysql高可用(HA)集群架构的部署

主机环境 redhat6.5 64位

实验环境 服务端1 ip 172.25.25.111 主机名:server1.example.com

服务端2 ip172.25.25.112 主机名:server2.example.com

安装包 heartbeat-3.0.4-2.el6.x86_64.rpm

heartbeat-devel-3.0.4-2.el6.x86_64.rpm

ldirectord-3.9.5-3.1.x86_64.rpm

heartbeat-libs-3.0.4-2.el6.x86_64.rpm

drbd-8.4.3.tar.gz

防火墙状态:关闭

前面的博文中提过mysql的源码安装和drbd的内核模块的添加,有些报错的解决、安装、测试这里不再重复,只是稍微提一下,感兴趣的可以看前面的博文。

1.安装heartbeat、修改主配置文件及测试

1.安装heartbeat、修改主配置文件(服务端1)

[root@server1 mnt]# ls #安装包

heartbeat-3.0.4-2.el6.x86_64.rpm heartbeat-devel-3.0.4-2.el6.x86_64.rpm ldirectord-3.9.5-3.1.x86_64.rpm

heartbeat-libs-3.0.4-2.el6.x86_64.rpm

[root@server1 mnt]# yum install * -y #安装/mnt所有

[root@server1 mnt]# cd /usr/share/doc/heartbeat-3.0.4/ #切换

[root@server1 heartbeat-3.0.4]# ls

apphbd.cf authkeys AUTHORS ChangeLog COPYING COPYING.LGPL ha.cf haresources README

[root@server1 heartbeat-3.0.4]# cp ha.cf haresources authkeys/etc/ha.d/ #拷贝主配置文件、资源配置文件、认证文件到指定目录

[root@server1 heartbeat-3.0.4]# cd /etc/ha.d/

[root@server1 ha.d]# ls

authkeys ha.cf harc haresources rc.d README.config resource.d shellfuncs

[root@server1 ha.d]# vim ha.cf #进入主配置文件

34 logfacility local0

48 keepalive 2

56 deadtime 30

61 warntime 10

71 initdead 60

76 udpport 719 #udp协议端口号

91 bcast eth0

157 auto_failback on

#结点主机名,那个写在前面那个就是主,另一个就是备,如下:服务端1是主,服务端2是备

211 node server1.example.com

212 node server2.example.com

220 ping 172.25.25.250

253 respawn hacluster /usr/lib64/heartbeat/ipfail

259 apiauth ipfail gid=haclient uid=hacluster

[root@server1 ha.d]# vim haresources

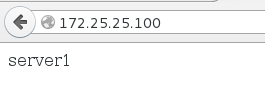

150 server1.example.com IPaddr::172.25.25.100/24/eth0 httpd #主机名是主结点的主机名、添加虚拟ip、httpd服务

[root@server1 ha.d]# vim authkeys #进入认证文件

23 auth 1 #认证

24 1 crc #认证方式crc

root@server1 ha.d]# chmod 600 authkeys #修改认证文件的权限

[[root@server1 ha.d]# /etc/init.d/heartbeat start #开启heartbeat

服务端2和服务端1的配置完全相同,可以在服务端2安装好heartbeat的情况下,将服务端1配置好的文件考过去,放在相同的目录下,然后启动服务端2的heartbeat服务,如下;

[root@server1 ha.d]# scp ha.cf haresources authkeys root@172.25.25.112:/etc/ha.d/ #拷贝

root@172.25.25.112‘s password:

[root@server2 ha.d]# /etc/init.d/heartbeat start #启动(服务端2)

在测试之前,服务端1和服务端2要安装httpd且有内容不同的测试页。注意:不要手动启动httpd,系统会自动启动。

2.测试 172.25.25.100

[root@server1 ha.d]# /etc/init.d/httpd stop #将服务端1的httpd停止,测试

Stopping httpd: [ OK ]

[root@server1 ha.d]# /etc/init.d/httpd start #开启httpd

Starting httpd: [ OK ]

2.编译、安装drbd内核模块及测试

1.编译、安装drbd内核模块

[root@server1 mnt]# ls

drbd-8.4.3.tar.gz

[root@server1 mnt]# tar zxf drbd-8.4.3.tar.gz #解压

[root@server1 mnt]# ls

drbd-8.4.3 drbd-8.4.3.tar.gz

[root@server1 mnt]# cd drbd-8.4.3

[root@server1 drbd-8.4.3]# ./configure --enable-spec --with-km

[root@server1 drbd-8.4.3]# rpmbuild -bb drbd.spec

error: File /root/rpmbuild/SOURCES/drbd-8.4.3.tar.gz: No suchfile or directory

[root@server1 drbd-8.4.3]# cp /mnt/drbd-8.4.3.tar.gz/root/rpmbuild/SOURCES/

[root@server1 drbd-8.4.3]# rpmbuild -bb drbd.spec

[root@server1 drbd-8.4.3]# rpmbuild -bb drbd-km.spec

[root@server1 drbd-8.4.3]# cd /root/rpmbuild/RPMS/x86_64/ #切换目录

[root@server1 x86_64]# ls #生成10个安装包

drbd-8.4.3-2.el6.x86_64.rpm

drbd-bash-completion-8.4.3-2.el6.x86_64.rpm

drbd-debuginfo-8.4.3-2.el6.x86_64.rpm

drbd-heartbeat-8.4.3-2.el6.x86_64.rpm

drbd-km-2.6.32_431.el6.x86_64-8.4.3-2.el6.x86_64.rpm

drbd-km-debuginfo-8.4.3-2.el6.x86_64.rpm

drbd-pacemaker-8.4.3-2.el6.x86_64.rpm

drbd-udev-8.4.3-2.el6.x86_64.rpm

drbd-utils-8.4.3-2.el6.x86_64.rpm

drbd-xen-8.4.3-2.el6.x86_64.rpm

[root@server1 x86_64]# yum install * -y #安装所有的包

[root@server1 x86_64]# scp * root@172.25.25.112:/mnt #将包传给服务端2

[root@server2 mnt]# rpm -vih * #安装

Preparing... ########################################### [100%]

1:drbd-utils ########################################### [ 10%]

2:drbd-bash-completion ########################################### [ 20%]

3:drbd-heartbeat ########################################### [ 30%]

4:drbd-pacemaker ########################################### [ 40%]

5:drbd-udev ########################################### [ 50%]

6:drbd-xen ########################################### [ 60%]

7:drbd ###########################################[ 70%]

8:drbd-km-2.6.32_431.el6.########################################### [80%]

9:drbd-km-debuginfo ########################################### [ 90%]

10:drbd-debuginfo ###########################################[100%]

在服务端1和服务端2虚拟机中各添加一块虚拟磁盘或划分一个新的分区,然后进行下面的步骤:

[root@server1 x86_64]# cd /etc/drbd.d/ #切换到drbd的主配置文件目录

[root@server1 drbd.d]# vim dbdata.res #创建一个文件,后缀必须是.res

1 resource dbdata{

2 meta-disk internal;

3 device /dev/drbd1;

4 syncer {

5 verify-alg sha1; #设置主备机之间通信使用的信息算法

6 }

#每个主机的说明以"on"开头,后面是主机名.在后面的{}中为这个主机的配置

7 on server1.example.com{ #主机名

8 disk /dev/vdb; #磁盘名称

9 address172.25.29.1:7789; #ip加端口,端口是指定的

10 }

11 onserver2.example.com{

12 disk /dev/vdb;

13 address172.25.29.2:7789;

14 }

15 }

[root@server1 drbd.d]# scp dbdata.resroot@172.25.29.2:/etc/drbd.d/

root@172.25.29.2‘s password:

#服务端1和服务端2同时初始化 ,同时开启drbd

[root@server1 drbd.d]# drbdadm create-md dbdata #初始化

--== Thank you for participating in the globalusage survey ==--

The server‘s response is:

Writing meta data...

initializing activity log

NOT initializing bitmap

New drbd meta data block successfully created.

[root@server2 drbd.d]# drbdadm create-md dbdata

--== Thank you for participating in the globalusage survey ==--

The server‘s response is:

Writing meta data...

initializing activity log

NOT initializing bitmap

New drbd meta data block successfully created.

[root@server1 drbd.d]# /etc/init.d/drbd start #开启drbd

[root@server2 drbd.d]# /etc/init.d/drbd start

[root@server1 drbd.d]# cat /proc/drbd #查看

version: 8.4.3 (api:1/proto:86-101)

GIT-hash: 89a294209144b68adb3ee85a73221f964d3ee515 build byroot@server1.example.com, 2016-10-08 15:10:25

1: cs:Connectedro:Secondary/Secondary ds:Inconsistent/Inconsistent C r-----

ns:0 nr:0 dw:0 dr:0al:0 bm:0 lo:0 pe:0 ua:0 ap:0 ep:1 wo:f oos:2097052

[root@server2 drbd.d]# cat /proc/drbd

version: 8.4.3 (api:1/proto:86-101)

GIT-hash: 89a294209144b68adb3ee85a73221f964d3ee515 build byroot@server1.example.com, 2016-10-08 15:10:25

1: cs:Connectedro:Secondary/Secondary ds:Inconsistent/Inconsistent C r-----

ns:0 nr:0 dw:0 dr:0al:0 bm:0 lo:0 pe:0 ua:0 ap:0 ep:1 wo:f oos:2097052

#从上面可以看出两个都是secondary

[root@server1 drbd.d]# drbdadm primary dbdata --force #强制使服务端1变成primary

[root@server1 drbd.d]# cat /proc/drbd #查看,服务端1和服务端2正在同步

version: 8.4.3 (api:1/proto:86-101)

GIT-hash: 89a294209144b68adb3ee85a73221f964d3ee515 build byroot@server1.example.com, 2016-10-08 15:10:25

1: cs:SyncSource ro:Primary/Secondaryds:UpToDate/Inconsistent C r-----

ns:496640 nr:0 dw:0dr:497304 al:0 bm:30 lo:0 pe:4 ua:0 ap:0 ep:1 wo:f oos:1604508

[===>................]sync‘ed: 23.7% (1604508/2097052)K

finish: 0:00:16 speed:98,508 (98,508) K/sec

[root@server1 drbd.d]# cat /proc/drbd

version: 8.4.3 (api:1/proto:86-101)

GIT-hash: 89a294209144b68adb3ee85a73221f964d3ee515 build byroot@server1.example.com, 2016-10-08 15:10:25

1: cs:SyncSourcero:Primary/Secondary ds:UpToDate/Inconsistent C r---n-

ns:1566436 nr:0 dw:0dr:1569432 al:0 bm:94 lo:0 pe:16 ua:3 ap:0 ep:1 wo:f oos:546716

[=============>......]sync‘ed: 74.1% (546716/2097052)K

finish: 0:00:08 speed:64,596 (64,596) K/sec

[root@server1 drbd.d]# cat /proc/drbd #同步完成,服务端1为primary

version: 8.4.3 (api:1/proto:86-101)

GIT-hash: 89a294209144b68adb3ee85a73221f964d3ee515 build byroot@server1.example.com, 2016-10-08 15:10:25

1: cs:Connectedro:Primary/Secondary ds:UpToDate/UpToDate C r-----

ns:2097052 nr:0 dw:0dr:2097716 al:0 bm:128 lo:0 pe:0 ua:0 ap:0 ep:1 wo:f oos:0

[root@server2 drbd.d]# cat /proc/drbd

version: 8.4.3 (api:1/proto:86-101)

GIT-hash: 89a294209144b68adb3ee85a73221f964d3ee515 build byroot@server1.example.com, 2016-10-08 15:10:25

1: cs:Connectedro:Secondary/Primary ds:UpToDate/UpToDate C r-----

ns:0 nr:2097052dw:2097052 dr:0 al:0 bm:128 lo:0 pe:0 ua:0 ap:0 ep:1 wo:f oos:0

[root@server1 drbd.d]# mkfs.ext4 /dev/drbd1 #格式化

3.将drdb、mysql写进heartbeat的文件里且测试

1.将drdb、mysql写进heartbeat的文件(服务端1)

[root@server1 ~]# yum install mysql-server -y #服务端1和服务端2都要安装mysql,不要手动启动

[root@server1 ~]# cat /proc/drbd #服务端1和服务端2要同为secondary

version: 8.4.3 (api:1/proto:86-101)

GIT-hash: 89a294209144b68adb3ee85a73221f964d3ee515 build byroot@server1.example.com, 2016-10-02 15:19:31

1: cs:Connectedro:Secondary/Secondary ds:UpToDate/UpToDate C r-----

ns:171868 nr:0 dw:0dr:171868 al:0 bm:18 lo:0 pe:0 ua:0 ap:0 ep:1 wo:f oos:0

[root@server1 ~]# cd /etc/ha.d/ #切换到heartbeat的配置文件路径

[root@server1 ha.d]# vim haresources #进入

150 server1.example.com IPaddr::172.25.25.100/24/eth0drbddisk::dbdata Filesystem::/dev/drbd1::/var/lib/mysql::ext4 mysqld #将/dev/drbd1挂载到/var/lib/mysql目录下,开启mysql

[root@server1 ~]# /etc/init.d/heartbeat stop #停止heartbeat

Stopping High-Availability services: Done.

[root@server1 ~]# /etc/init.d/heartbeat status #查看状态

heartbeat is stopped. No process

[root@server1 ~]# /etc/init.d/heartbeat start

Starting High-Availability services: INFO: Resource is stopped

Done.

[root@server1 ~]# /etc/init.d/heartbeat start #开启heartbeat

Starting High-Availability services: INFO: Resource is stopped

Done.

[root@server1 ha.d]# scp haresourcesroot@172.25.25.112:/etc/ha.d/

root@172.25.25.112‘s password:

服务端2

[root@server2 ha.d]# /etc/init.d/heartbeat stop

Stopping High-Availability services: Done.

[root@server2 ha.d]# /etc/init.d/heartbeat start

Starting High-Availability services: INFO: Resource is stopped

Done.

2.测试

[root@server3 ~]# ping 172.25.25.100 #ping通了

64 bytes from 172.25.29.100: icmp_seq=1 ttl=64 time=0.485 ms

64 bytes from 172.25.29.100: icmp_seq=2 ttl=64 time=0.292 ms

64 bytes from 172.25.29.100: icmp_seq=3 ttl=64 time=0.289 ms

[root@server3 ~]# arp -an|grep 172.25.25.100 #查看ip

? (172.25.25.100) at 52:54:00:ec:8b:36 [ether] on eth0

[root@server1 ~]# ip addr show eth0 #和服务端1的一样

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdiscpfifo_fast state UP qlen 1000

link/ether52:54:00:ec:8b:36 brd ff:ff:ff:ff:ff:ff

inet 172.25.25.111/24brd 172.25.25.255 scope global eth0

inet 172.25.25.100/24brd 172.25.25.255 scope global secondary eth0

inet6fe80::5054:ff:feec:8b36/64 scope link

valid_lft foreverpreferred_lft forever

[root@server1 ~]# df #磁盘也挂载上了

Filesystem 1K-blocks Used Available Use%Mounted on

/dev/mapper/VolGroup-lv_root 7853764 1290388 6164428 18% /

tmpfs 510200 0 510200 0% /dev/shm

/dev/vda1 495844 33467 436777 8% /boot

/dev/drbd1 4128284 95176 3823404 3% /var/lib/mysql

[root@server1 ~]# mysql #mysql也打开了

mysql> show databases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| mysql |

| test |

+--------------------+

3 rows in set (0.00 sec)

[root@server2 ha.d]# mysql

ERROR 2002 (HY000): Can‘t connect to local MySQL server throughsocket ‘/var/lib/mysql/mysql.sock‘ (2)

[root@server1 ~]# /etc/init.d/heartbeat stop #当服务端1的heartbeat停止之后

Stopping High-Availability services: Done.

[root@server1 ~]# mysql

ERROR 2002 (HY000): Can‘t connect to local MySQL server throughsocket ‘/var/lib/mysql/mysql.sock‘ (2)

[root@server3 ~]# arp -an|grep 172.25.25.100 #查看ip

? (172.25.25.100) at 52:54:00:85:1a:3b [ether] on eth0

[root@server2 ha.d]# ip addr show eth0 #服务端2在服务

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdiscpfifo_fast state UP qlen 1000

link/ether52:54:00:85:1a:3b brd ff:ff:ff:ff:ff:ff

inet 172.25.25.112/24brd 172.25.25.255 scope global eth0

inet6fe80::5054:ff:fe85:1a3b/64 scope link

valid_lft foreverpreferred_lft forever

[root@server2 ha.d]# df #挂载上了

Filesystem 1K-blocks Used Available Use%Mounted on

/dev/mapper/VolGroup-lv_root 7853764 1188980 6265836 16% /

tmpfs 510200 0 510200 0% /dev/shm

/dev/vda1 495844 33467 436777 8% /boot

/dev/drbd1 4128284 95176 3823404 3% /var/lib/mysql

[root@server1 ~]# /etc/init.d/heartbeat start #当服务端1的heartbeat启动之后

Starting High-Availability services: INFO: Resource is stopped

Done.

[root@server3 ~]# arp -an|grep 172.25.25.100 #又回到了服务端1

? (172.25.25.100) at 52:54:00:ec:8b:36 [ether] on eth0

[root@server1 ~]# ip addr show eth0

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdiscpfifo_fast state UP qlen 1000

link/ether52:54:00:ec:8b:36 brd ff:ff:ff:ff:ff:ff

inet 172.25.25.111/24brd 172.25.25.255 scope global eth0

inet 172.25.25.100/24brd 172.25.25.255 scope global secondary eth0

inet6 fe80::5054:ff:feec:8b36/64scope link

valid_lft foreverpreferred_lft forever

本文出自 “不忘初心,方得始终” 博客,请务必保留此出处http://12087746.blog.51cto.com/12077746/1860294

Heartbeat+Drbd+Mysql高可用(HA)集群架构的部署