首页 > 代码库 > 关于web爬虫的tips

关于web爬虫的tips

网站爬虫限制默认在心中

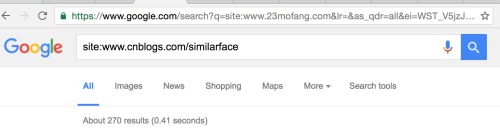

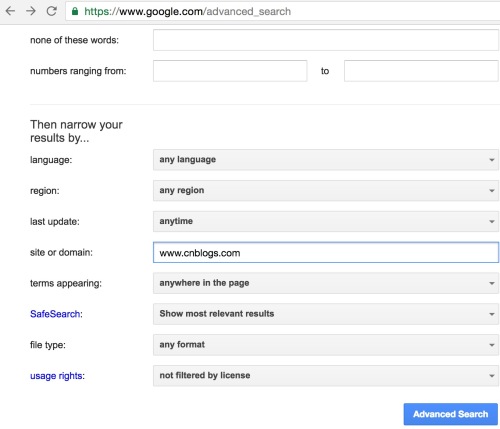

robots.txt爬一个网站怎么预测爬的量

每个网站都使用各种各样的技术,怎么确定网站使用的技术

pip install builtwith>>> import builtwith

>>> builtwith.parse(‘http://www.douban.com‘)

{u‘javascript-frameworks‘: [u‘jQuery‘], u‘tag-managers‘: [u‘Google Tag Manager‘], u‘analytics‘: [u‘Piwik‘]}

#网站的所属者 pip install python-whois >>> print whois.whois(‘cnblogs.com‘) { "updated_date": [ "2014-11-12 00:00:00", "2014-11-12 01:07:15" ], "status": [ "clientDeleteProhibited https://icann.org/epp#clientDeleteProhibited", "clientTransferProhibited https://icann.org/epp#clientTransferProhibited" ], "name": "du yong", "dnssec": "unsigned", "city": "Shanghai", "expiration_date": [ "2021-11-12 00:00:00", "2021-11-11 04:00:00" ], "zipcode": "201203", "domain_name": [ "CNBLOGS.COM", "cnblogs.com" ], "country": "CN", "whois_server": "whois.35.com", "state": "Shanghai", "registrar": "35 Technology Co., Ltd.", "referral_url": "http://www.35.com", "address": "Room 312, No.22 BOXIA Rd, Pudong New District", "name_servers": [ "NS3.DNSV4.COM", "NS4.DNSV4.COM", "ns3.dnsv4.com", "ns4.dnsv4.com" ], "org": "Shanghai Yucheng Information Technology Co. Ltd.", "creation_date": [ "2003-11-12 00:00:00", "2003-11-11 04:00:00" ], "emails": [ "abuse@35.cn", "dudu.yz@gmail.com" ] }

本文出自 “similarface” 博客,请务必保留此出处http://similarface.blog.51cto.com/3567714/1861494

关于web爬虫的tips

声明:以上内容来自用户投稿及互联网公开渠道收集整理发布,本网站不拥有所有权,未作人工编辑处理,也不承担相关法律责任,若内容有误或涉及侵权可进行投诉: 投诉/举报 工作人员会在5个工作日内联系你,一经查实,本站将立刻删除涉嫌侵权内容。