首页 > 代码库 > 构建高可用负载均衡—CentOS6.4+Haproxy+Keepalive

构建高可用负载均衡—CentOS6.4+Haproxy+Keepalive

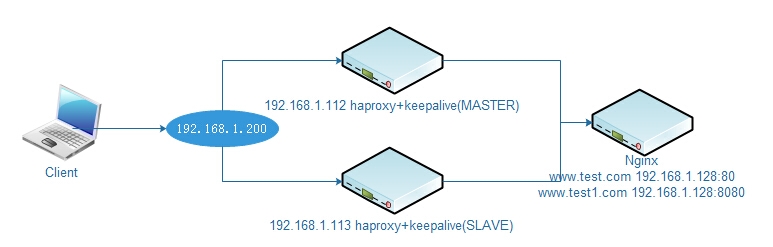

1、实验拓扑如下,这里用NGINX做的基于端口的虚拟主机,方便之后的测试

2、安装前准备

Haproxy 国内下载你懂得

Keepalived 下载地址www.keepalive.org

同步时间

#ntpdate pool.ntp.org

3、haproxy安装(主备节点安装一样)

#tar -zxvf haproxy-1.5.9.tar.gz #cd haproxy-1.5.9 # uname -a Linux localhost.localdomain 2.6.32-358.el6.x86_64 #1 SMP Fri Feb 22 00:31:26 UTC 2013 x86_64 x86_64 x86_64 GNU/Linux #make TARGET=linux26 PREFIX=/usr/local/haproxy install #cd /usr/local/haproxy # mkdir conf logs

4、创建配置文件信息如下

#vim conf/haproxy.cfg global log 127.0.0.1 local0//日志输出配置,所有日志都记录在本机,通过local0输出 maxconn 5120 //最大连接数 chroot /usr/local/haproxy //改变当前工作目录 uid 100 //所属用户的uid gid 100 //所属用户的gid daemon //以后台形式运行haproxy nbproc 2 //创建2个进程进去deamon模式 pidfile /usr/local/haproxy/logs/haproxy.pid //pid文件 defaults log 127.0.0.1 local3 mode http //http七层,tcp4层,health只返回OK option httplog //采用httpd日志格式 option dontlognull option redispatch retries 3 //三次失败认为服务不可用 maxconn 3000 //默认的最大连接数 contimeout 5000 //连接超时时间 clitimeout 50000 //客户端超时时间 srvtimeout 50000 //服务器超时时间 stats enable stats uri /admin //统计页面的url stats auth admin:admin //统计页面的用户名密码 stats realm Haproxy \ statistic //统计页面提示信息 stats hide-version//隐藏统计页面版本信息 listen web_proxy *:80 server web1 192.168.1.128:80 check inter 5000 fall 1 rise 2 server web1 192.168.1.128:8080 check inter 5000 fall 1 rise 2 //check inter 5000 是检测心跳频率,rise 2 是2次正确认为服务可用,fall 1 是1次失败认为服务不可用,weight代表权重

5、keepalive安装配置(主备安装一样,注意配置文件的修改)

# yum -y install openssl-devel #tar -zxvf keepalived-1.2.13.tar.gz #cd keepalived-1.2.13 #./configure --prefix=/usr/local/keepalived #make && make install #cp /usr/local/keepalived/etc/rc.d/init.d/keepalived /etc/init.d/ #ln -s /usr/local/keepalived/etc/sysconfig/keepalived /etc/sysconfig/keepalived #chmod +x /etc/init.d/keepalived #mkdir /etc/keepalived #ln -sf /usr/local/keepalived/etc/keepalived/keepalived.conf /etc/keepalived/keepalived.conf

6、修改配置文件

# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

root@localhost.com

}

notification_email_from root@localhost.com

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id LVS_DEVEL

}

vrrp_script chk_haproxy {

script "/root/chk_haproxy.sh"//检测脚本

interval 2

weight 2

}

vrrp_instance VI_1 {

state MASTER //实例的初始状态,备节点为BACKUP

interface eth0

virtual_router_id 51

priority 100//高优先级精选master,至少高backup50

advert_int 1

authentication {

auth_type PASS//认证方式,支持PASS和AH

auth_pass 1111//认证的密码

}

virtual_ipaddress {

192.168.1.200//vip

}

track_script {

chk_haproxy//检测命令

}

}7、keepalived检测脚本

# cat chk_haproxy.sh #!/bin/bash A=`ps -C haproxy --no-header | wc -l` if [ $A = 0 ] ;then /etc/init.d/keepalived stop fi

8、启动keepalived

# service keepalived start 报错信息 Starting keepalived: /bin/bash: keepalived: command not found [FAILED] 解决方法 # ln -s /usr/local/keepalived/sbin/keepalived /usr/sbin/keepalived

9、验证过程

首先先看下nginx配置

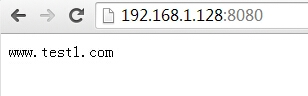

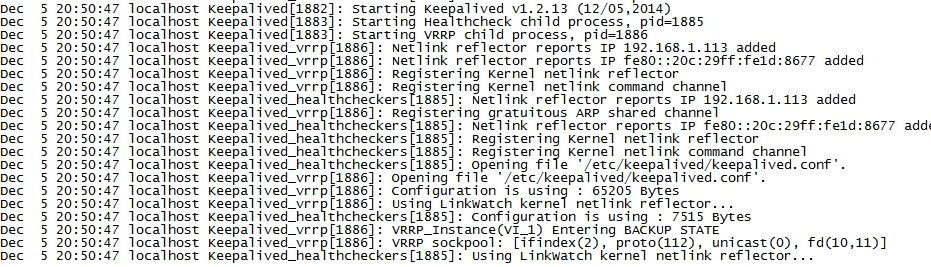

分别启动Master和SLAVE ,看到主备节点日志如下:

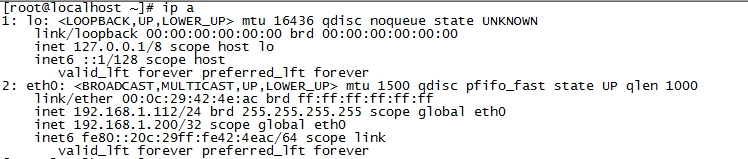

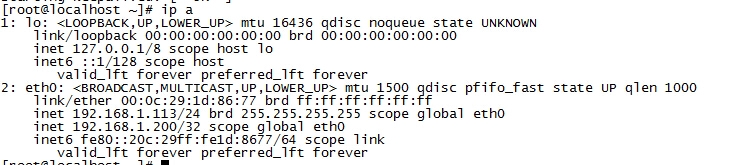

Master上可以看到vip信息

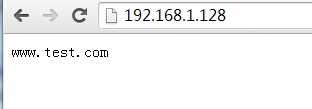

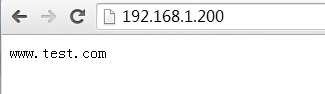

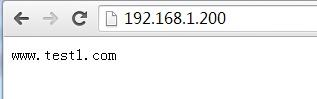

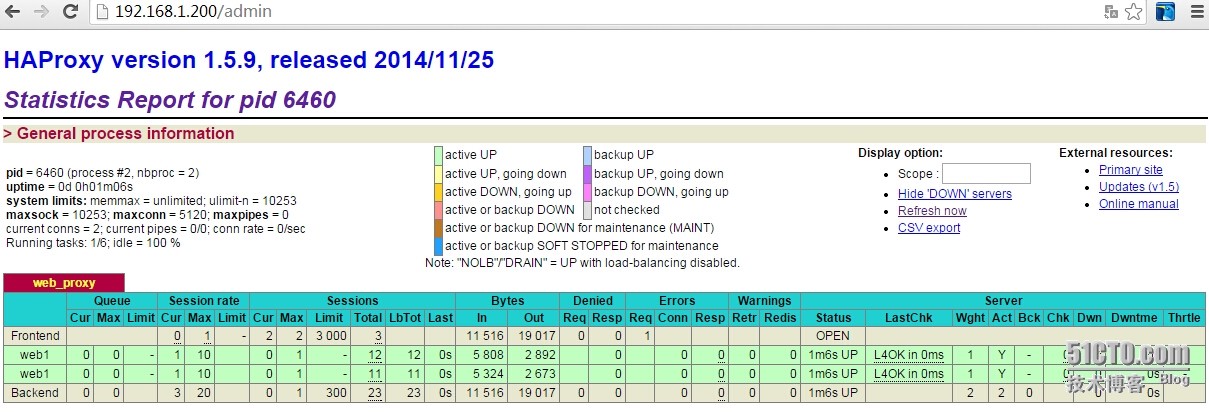

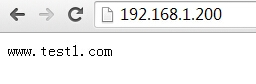

之后测试haproxy负载均衡是否正确

看下自动监控页面,发现轮询正常!

之后测试Master的haproxy挂掉了,是否切换

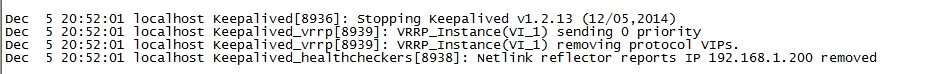

#killall haproxy

之后看下keepalived的日志信息,发现主的已经根据脚本停掉了,并且VIP开始漂移

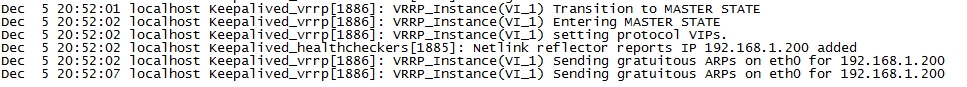

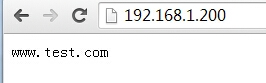

Slave的可以看到已经升为Master并且获得VIP地址

最后在测试访问,均正常!

本文出自 “毛竹之势” 博客,请务必保留此出处http://peaceweb.blog.51cto.com/3226037/1586797

构建高可用负载均衡—CentOS6.4+Haproxy+Keepalive

声明:以上内容来自用户投稿及互联网公开渠道收集整理发布,本网站不拥有所有权,未作人工编辑处理,也不承担相关法律责任,若内容有误或涉及侵权可进行投诉: 投诉/举报 工作人员会在5个工作日内联系你,一经查实,本站将立刻删除涉嫌侵权内容。