首页 > 代码库 > Cloudera Hadoop 5.2 安装

Cloudera Hadoop 5.2 安装

2024-08-07 14:03:01 218人阅读

环境准备

1)JDK

2)本地cdh yum源 省.....

3)本地hosts

10.20.120.11 sp-kvm01.hz.idc.com

10.20.120.12 sp-kvm02.hz.idc.com

10.20.120.13 sp-kvm03.hz.idc.com

10.20.120.14 sp-kvm04.hz.idc.com

10.20.120.21 hd01.hz.idc.com

10.20.120.22 hd02.hz.idc.com

10.20.120.31 hd-node01.hz.idc.com

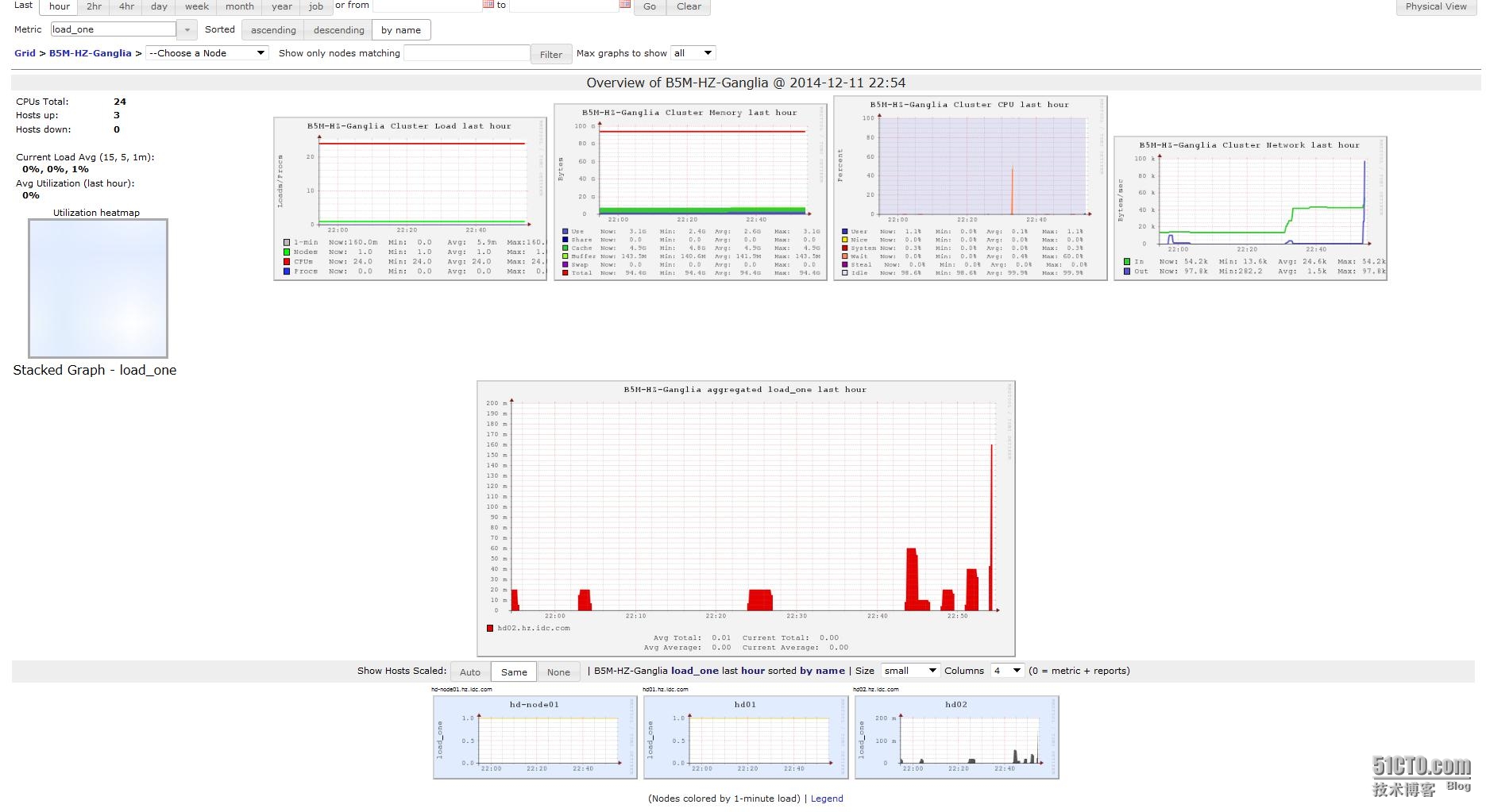

Ganglia 监控部署

参考文档.....

http://10.20.120.22/ganglia

Hadoop 部署

Zookeeper 安装

yum install zookeeper zookeeper-server

mkdir /opt/zookeeper

/etc/zookeeper/conf/zoo.cfg

maxClientCnxns=50

# The number of milliseconds of each tick

tickTime=2000

# The number of ticks that the initial

# synchronization phase can take

initLimit=10

# The number of ticks that can pass between

# sending a request and getting an acknowledgement

syncLimit=5

# the directory where the snapshot is stored.

dataDir=/opt/zookeeper

# the port at which the clients will connect

clientPort=2181

server.1=sp-kvm01.hz.idc.com:2888:3888

server.2=sp-kvm02.hz.idc.com:2888:3888

server.3=sp-kvm03.hz.idc.com:2888:3888

每个节点下创建myid文件

# echo 1 > /opt/zookeeper/myid #第二个节点2 第三个节点3

# chown -R zookeeper.zookeeper /data/zookeeper

初始换zookeeper

# /etc/init.d/zookeeper-server init --myid=1 #第二个节点2 第三个节点3

启动

/etc/init.d/zookeeper-server start

查看状态

[ops@sp-kvm01 ~]# zookeeper-server status

JMX enabled by default

Using config: /etc/zookeeper/conf/zoo.cfg

Mode: follower

Journalnode

#yum -y install hadoop-hdfs-journalnode

#mkdir -p /data/1/dfs/jn && chown -R hdfs:hdfs /data/1/dfs/jn

NameNode

#yum -y install hadoop-hdfs-namenode hadoop-hdfs-zkfc hadoop-client

DataNode

#yum -y install hadoop-hdfs-datanode

#set namenode datanode dir

#mkdir -p /data/1/dfs/nn /data/{1,2}/dfs/dn

#chown -R hdfs:hdfs /data/1/dfs/nn /data/{1,2}/dfs/dn

#chmod 700 /data/1/dfs/nn /data/{1,2}/dfs/dn

#chmod go-rx /data/1/dfs/nn

配置文件参考

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://hzcluster</value>

</property>

<property>

<name>fs.trash.interval</name>

<value>120</value>

</property>

<property>

<name>io.file.buffer.size</name>

<value>65536</value>

</property>

<property>

<name>io.compression.codecs</name>

<value>org.apache.hadoop.io.compress.DefaultCodec,org.apache.hadoop.io.compress.GzipCodec,org.apache.hadoop.io.compress.BZip2Codec,org.apache.hadoop.io.compress.DeflateCodec,org.apache.hadoop.io.compress.SnappyCodec,org.apache.hadoop.io.compress.Lz4Codec</value>

</property>

<property>

<name>ha.zookeeper.quorum</name>

<value>sp-kvm01.hz.idc.com:2181,sp-kvm01.hz.idc.com:2181,sp-kvm01.hz.idc.com:2181</value>

</property>

<property>

<name>ha.zookeeper.session-timeout.ms</name>

<value>10000</value>

<description>指定ZooKeeper超时间隔,单位毫秒</description>

</property>

<property>

<name>hadoop.security.authentication</name>

<value>simple</value>

</property>

<property>

<name>hadoop.rpc.protection</name>

<value>authentication</value>

</property>

<property>

<name>hadoop.security.auth_to_local</name>

<value>DEFAULT</value>

</property>

<!--set httpFS-->

<property>

<name>hadoop.proxyuser.httpfs.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.httpfs.groups</name>

<value>*</value>

</property>

#vim /etc/hadoop/conf/hdfs-site.xml

<configuration>

<property>

<name>dfs.replication</name>

<value>3</value>

</property>

<property>

<name>dfs.blocksize</name>

<value>67108864</value>

</property>

<property>

<name>dfs.permissions.superusergroup</name>

<value>hadoop</value>

</property>

<!-- set NameNode-->

<property>

<name>dfs.namenode.name.dir</name>

<value>/data/1/dfs/nn</value>

</property>

<!-- set DataNode-->

<property>

<name>dfs.datanode.data.dir</name>

<value>/data/2/dfs/dn</value>

</property>

<!-- set nn id -->

<property>

<name>dfs.nameservices</name>

<value>hzcluster</value>

</property>

<!-- set nn RPC address -->

<property>

<name>dfs.ha.namenodes.hzcluster</name>

<value>nn1,nn2</value>

</property>

<property>

<name>dfs.namenode.rpc-address.hzcluster.nn1</name>

<value>hd01.hz.idc.com:8020</value>

</property>

<property>

<name>dfs.namenode.rpc-address.hzcluster.nn2</name>

<value>hd02.hz.idc.com:8020</value>

</property>

<!-- set nn http address -->

<property>

<name>dfs.namenode.http-address.hzcluster.nn1</name>

<value>hd01.hz.idc.com:50070</value>

</property>

<property>

<name>dfs.namenode.http-address.hzcluster.nn2</name>

<value>hd02.hz.idc.com:50070</value>

</property>

<!-- set JournalNodes log dir -->

<property>

<name>dfs.namenode.shared.edits.dir</name>

<value>qjournal://hd01.hz.idc.com:8485;hd02.hz.idc.com:8485;hd-node01.hz.idc.com:8485/hzcluster</value>

</property>

<!-- ===JournalNode local === -->

<property>

<name>dfs.journalnode.edits.dir</name>

<value>/data/1/dfs/jn</value>

</property>

<!-- ===Client Failover Configuration=== -->

<property>

<name>dfs.client.failover.proxy.provider.hzcluster</name>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value>

</property>

<!-- ===Fencing Configuration=== -->

<property>

<name>dfs.ha.fencing.methods</name>

<value>sshfence</value>

</property>

<property>

<name>dfs.ha.fencing.ssh.private-key-files</name>

<value>/var/lib/hadoop-hdfs/.ssh/id_rsa</value>

</property>

<!-- set zookeeper -->

<property>

<name>dfs.ha.automatic-failover.enabled</name>

<value>true</value>

</property>

<property>

<name>ha.zookeeper.quorum</name>

<value>sp-kvm01.hz.idc.com:2181,sp-kvm02.hz.idc.com:2181,sp-kvm03.hz.idc.com:2181</value>

</property>

<!-- set RackAware -->

<property>

<name>topology.script.file.name</name>

<value>/etc/hadoop/conf/RackAware.py</value>

</property>

<property>

<name>dfs.datanode.du.reserved</name>

<value>10737418240</value>

<description>保留10G存储空间</description>

</property>

<property>

<name>dfs.namenode.handler.count</name>

<value>100</value>

<description>namenode的线程数</description>

</property>

<property>

<name>dfs.datanode.handler.count</name>

<value>100</value>

<description>datanode的线程数</description>

</property>

<property>

<name>dfs.datanode.max.xcievers</name>

<value>10240</value>

<description>datanode打开的句柄数目</description>

</property>

<property>

<name>dfs.socket.timeout</name>

<value>3000000</value>

</property>

<property>

<name>dfs.datanode.socket.write.timeout</name>

<value>3000000</value>

</property>

<property>

<name>dfs.balance.bandwidthPerSec</name>

<value>20971520</value>

<description>balance的最大带宽占用</description>

</property>

<!--del node-->

<property>

<name>dfs.hosts.exclude</name>

<value>/etc/hadoop/conf/excludes</value>

</property>

</configuration>

vim /etc/hadoop/conf/hadoop-metrics2.properties

*.sink.ganglia.class=org.apache.hadoop.metrics2.sink.ganglia.GangliaSink31

*.sink.ganglia.period=10

*.sink.ganglia.slope=jvm.metrics.gcCount=zero,jvm.metrics.memHeapUsedM=both

*.sink.ganglia.dmax=jvm.metrics.threadsBlocked=70,jvm.metrics.memHeapUsedM=40

namenode.sink.ganglia.servers=10.20.120.22:8649

resourcemanager.sink.ganglia.servers=10.20.120.22:8649

datanode.sink.ganglia.servers=10.20.120.22:8649

nodemanager.sink.ganglia.servers=10.20.120.22:8649

maptask.sink.ganglia.servers=10.20.120.22:8649

reducetask.sink.ganglia.servers=10.20.120.22:8649

1)配置NameNode之间的sshfence

2)NameNode启动时会连接Journal Node: /etc/init.d/hadoop-hdfs-journalnode start

3)格式化(主)上的NameNode #sudo -u hdfs hadoop namenode -format

4)启动主 NN #service hadoop-hdfs-namenode start

从同步 #sudo -u hdfs hadoop namenode -bootstrapStandby

从启动 #service hadoop-hdfs-namenode start

5)配置自动切换

在任意一个NameNode上运行即可,会创建一个znode用于自动故障转移。

#hdfs zkfc -formatZK

#启动 zkfs(2个NN) #service hadoop-hdfs-zkfc start

6)启动datanode

添加tmp目录

#sudo -u hdfs hadoop fs -mkdir /tmp

#sudo -u hdfs hadoop fs -chmod -R 1777 /tmp

#hadoop fs -ls /

##############################################################

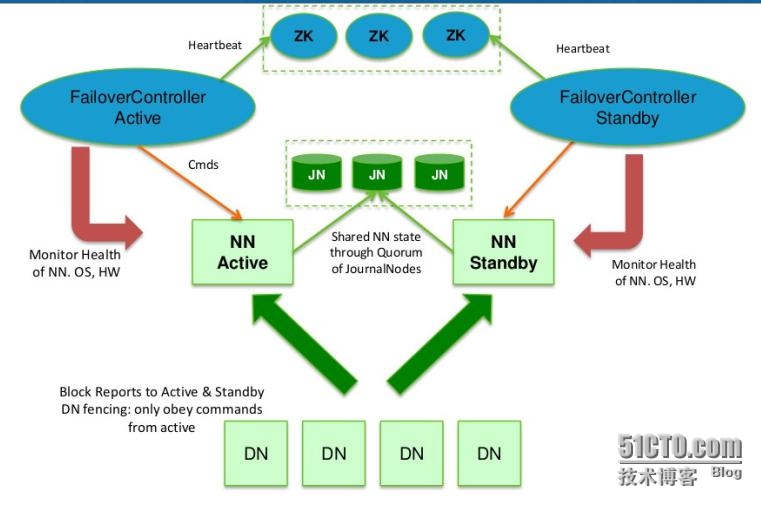

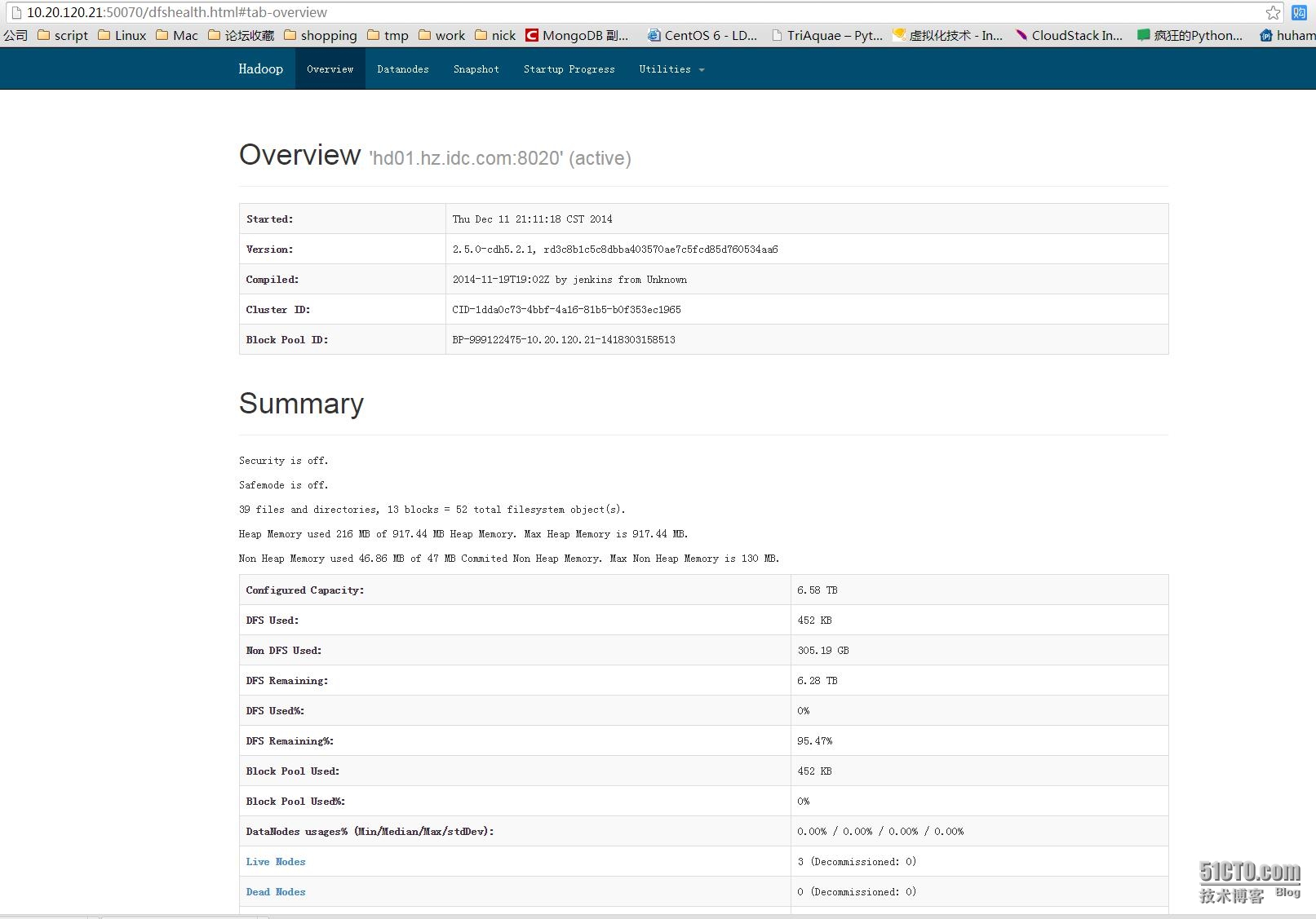

NameNode HA自动切换测试

##############################################################

查看namenode状态

[root@master01 ~]# sudo -u hdfs hdfs haadmin -getServiceState nn1

active

[root@master01 ~]# sudo -u hdfs hdfs haadmin -getServiceState nn2

standby

测试高可用

# ps -ef | grep namenode

hdfs 11499 1 1 17:27 ? 00:00:11 /usr/java/jdk1.6.0_31/bin/java

# kill -9 11499

查看状态

# sudo -u hdfs hdfs haadmin -getServiceState nn2

active

# /etc/init.d/hadoop-hdfs-namenode start

Starting Hadoop namenode: [ OK ]

# sudo -u hdfs hdfs haadmin -getServiceState nn1

standby

手动切换

# sudo -u hdfs hdfs haadmin -failover nn2 nn1

# sudo -u hdfs hdfs haadmin -getServiceState nn1

active

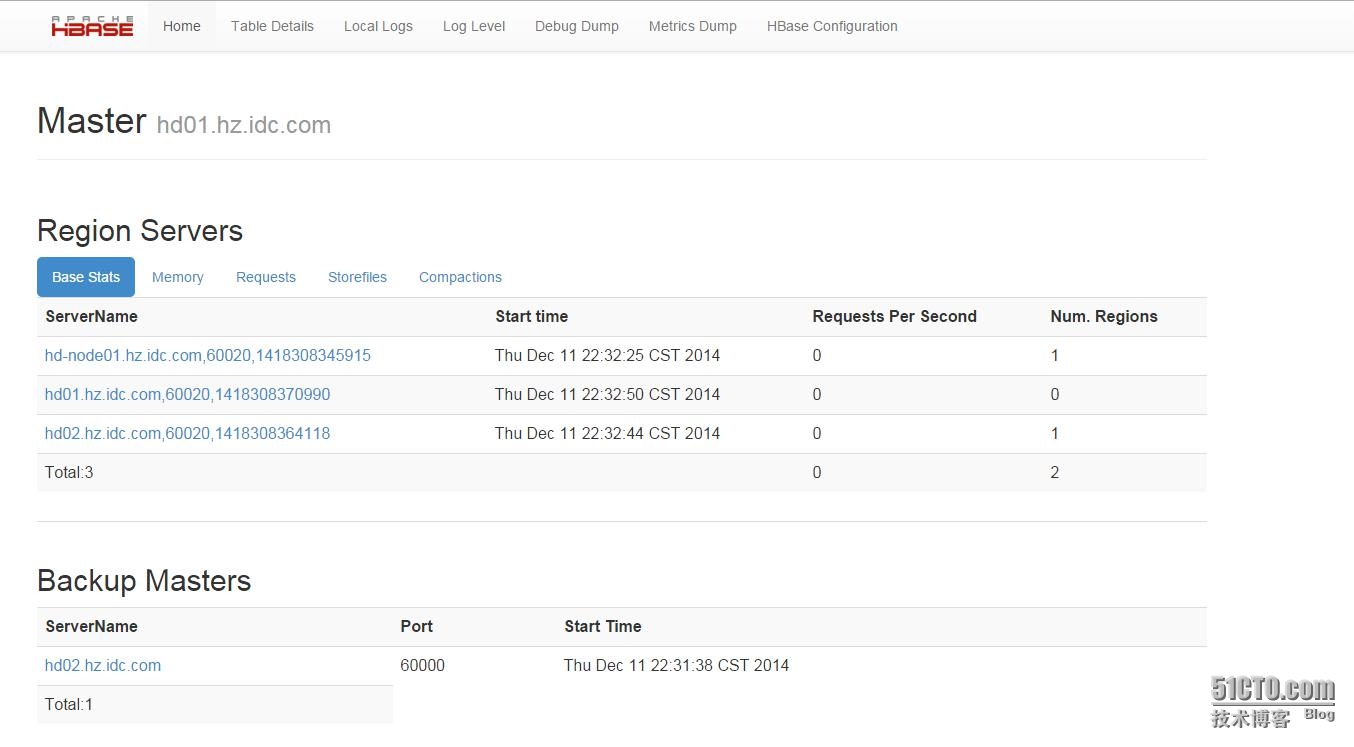

HBase 部署

#yum -y install hbase-master hbase-rest hbase-thrift

Reg

#yum -y install hbase-regionserver

创建hbase目录

# sudo -u hdfs hadoop fs -mkdir /hbase

# sudo -u hdfs hadoop fs -chown hbase /hbase

vim hbase-site.xml

<configuration>

<property>

<name>hbase.rest.port</name>

<value>8080</value>

</property>

<property>

<name>hbase.cluster.distributed</name>

<value>true</value>

</property>

<property>

<name>hbase.rootdir</name>

<value>hdfs://hzcluster/hbase</value>

</property>

<property>

<name>hbase.zookeeper.quorum</name>

<value>sp-kvm01.hz.idc.com,sp-kvm02.hz.idc.com,sp-kvm03.hz.idc.com</value>

</property>

<property>

<name>hbase.zookeeper.property.clientPort</name>

<value>2181</value>

</property>

<!-- set parameter -->

<property>

<name>hbase.client.write.buffer</name>

<value>2097152</value>

</property>

<property>

<name>hbase.client.pause</name>

<value>1000</value>

</property>

<property>

<name>hbase.client.retries.number</name>

<value>10</value>

</property>

<property>

<name>hbase.client.scanner.caching</name>

<value>1</value>

</property>

<property>

<name>hbase.client.keyvalue.maxsize</name>

<value>10485760</value>

</property>

<property>

<name>hbase.rpc.timeout</name>

<value>60000</value>

</property>

<property>

<name>hbase.security.authentication</name>

<value>simple</value>

</property>

<property>

<name>zookeeper.session.timeout</name>

<value>60000</value>

</property>

[root@hd01 conf]# cat regionservers

hd01.hz.idc.com

hd02.hz.idc.com

hd-node01.hz.idc.com

vim hbase-env.sh

# export HBASE_MANAGES_ZK=true

HBASE_MANAGES_ZK=false

启动Hbase服务

仅在hadoop-master上

# sudo /etc/init.d/hbase-master start

# sudo /etc/init.d/hbase-thrift start

# sudo /etc/init.d/hbase-rest start

仅在hadoop-node上

# sudo /etc/init.d/hbase-regionserver start

Cloudera Hadoop 5.2 安装