首页 > 代码库 > Python爬虫框架Scrapy 学习笔记 10.2 -------【实战】 抓取天猫某网店所有宝贝详情

Python爬虫框架Scrapy 学习笔记 10.2 -------【实战】 抓取天猫某网店所有宝贝详情

第二部分 抽取起始页中进入宝贝详情页面的链接

创建项目,并生成spider模板,这里使用crawlspider。

2. 在中scrapy shell中测试选取链接要使用的正则表达式。

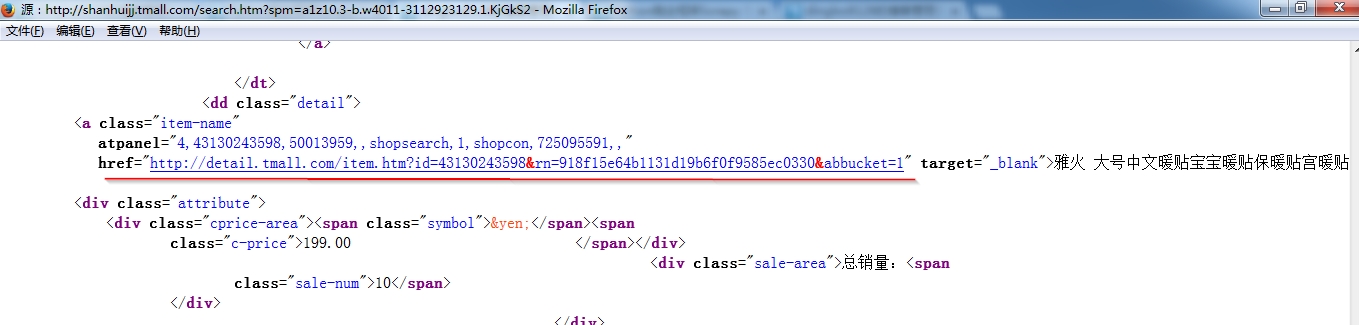

首先使用firefox和firebug查看源码,定位到要链接

然后在shell中打开网页:

scrapy shell http://shanhuijj.tmall.com/search.htm?spm=a1z10.3-b.w4011-3112923129.1.KjGkS2

确定正则表达式为:http://detail.tmall.com/item.htm\?id=\d+&rn=\w+&abbucket=\d+

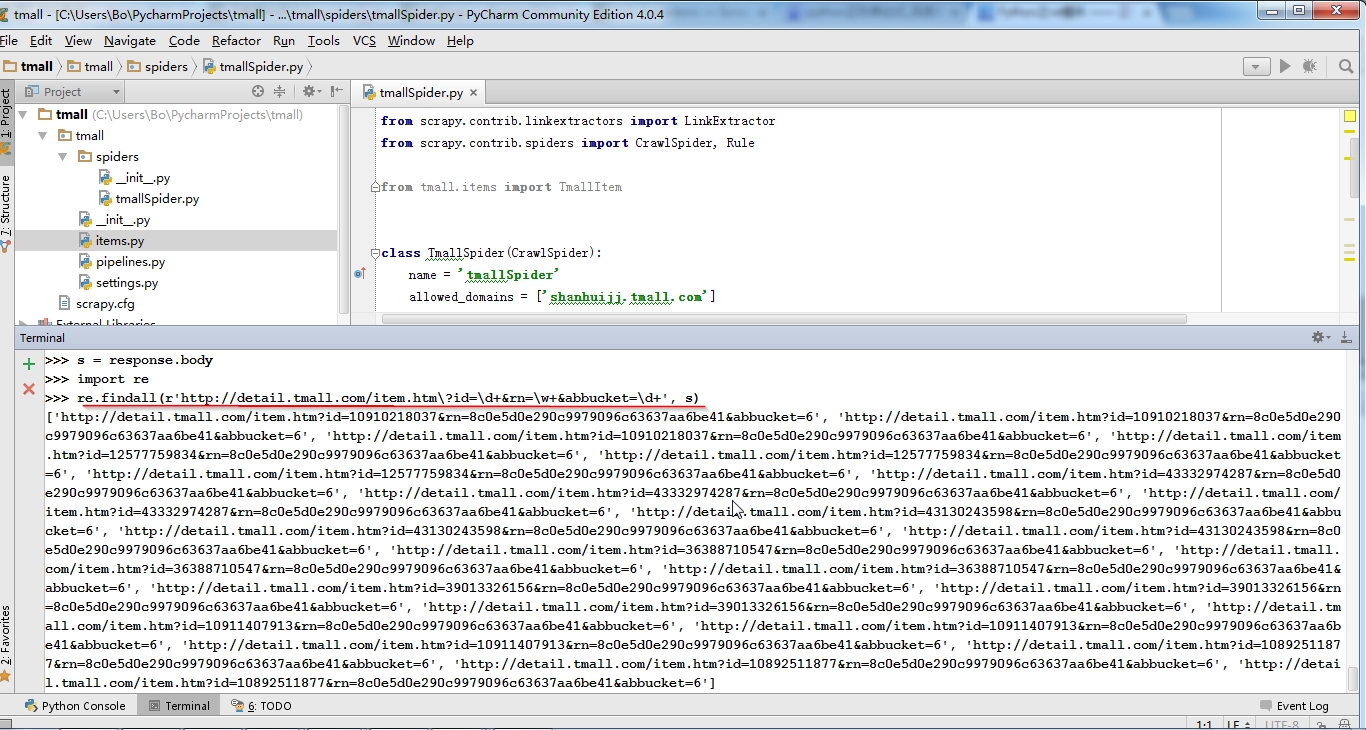

3. 在spider中测试上面的正则表达式

一开始我的spider是这样写的,但是parse_item函数总是没被调用

# -*- coding: utf-8 -*-

import scrapy

from scrapy.contrib.linkextractors import LinkExtractor

from scrapy.contrib.spiders import CrawlSpider, Rule

from tmall.items import TmallItem

class TmallSpider(CrawlSpider):

name = ‘tmallSpider‘

allowed_domains = [‘shanhuijj.tmall.com‘]

start_urls = [‘http://shanhuijj.tmall.com/search.htm?spm=a1z10.3-b.w4011-3112923129.1.KjGkS2/‘]

rules = (

Rule(LinkExtractor(allow=r‘http://detail.tmall.com/item.htm\?id=\d+&rn=\w+&abbucket=\d+‘,

restrict_xpaths="//div[@class=‘J_TItems‘]/div/dl/dd[@class=‘detail‘]"),

callback=‘parse_item‘, follow=False),

)

def parse_item(self, response):

self.log("---------------------------------------------------------")

item = TmallItem()

item[‘url‘] = response.url

return item上面给规则加了一个 restrict_xpath参数,目的是限定寻找匹配的URL的范围

run spider得到的output是

C:\Users\Bo\PycharmProjects\tmall>scrapy crawl tmallSpider

2015-01-11 22:22:59+0800 [scrapy] INFO: Scrapy 0.24.4 started (bot: tmall)

2015-01-11 22:22:59+0800 [scrapy] INFO: Optional features available: ssl, http11

2015-01-11 22:22:59+0800 [scrapy] INFO: Overridden settings: {‘NEWSPIDER_MODULE‘: ‘tmall.spiders‘, ‘SPIDER_MODULES‘: [‘tmall.spiders‘], ‘BOT_NAME‘: ‘tmall‘}

2015-01-11 22:22:59+0800 [scrapy] INFO: Enabled extensions: LogStats, TelnetConsole, CloseSpider, WebService, CoreStats, SpiderState

2015-01-11 22:22:59+0800 [scrapy] INFO: Enabled downloader middlewares: HttpAuthMiddleware, DownloadTimeoutMiddleware, UserAgentMiddleware, RetryMiddleware, Defau

ltHeadersMiddleware, MetaRefreshMiddleware, HttpCompressionMiddleware, RedirectMiddleware, CookiesMiddleware, ChunkedTransferMiddleware, DownloaderStats

2015-01-11 22:22:59+0800 [scrapy] INFO: Enabled spider middlewares: HttpErrorMiddleware, OffsiteMiddleware, RefererMiddleware, UrlLengthMiddleware, DepthMiddlewar

e

2015-01-11 22:22:59+0800 [scrapy] INFO: Enabled item pipelines:

2015-01-11 22:22:59+0800 [tmallSpider] INFO: Spider opened

2015-01-11 22:22:59+0800 [tmallSpider] INFO: Crawled 0 pages (at 0 pages/min), scraped 0 items (at 0 items/min)

2015-01-11 22:22:59+0800 [scrapy] DEBUG: Telnet console listening on 127.0.0.1:6024

2015-01-11 22:22:59+0800 [scrapy] DEBUG: Web service listening on 127.0.0.1:6081

2015-01-11 22:22:59+0800 [tmallSpider] DEBUG: Redirecting (302) to <GET http://jump.taobao.com/jump?target=http%3a%2f%2fshanhuijj.tmall.com%2fsearch.htm%3fspm%3da

1z10.3-b.w4011-3112923129.1.KjGkS2%2f%26tbpm%3d1> from <GET http://shanhuijj.tmall.com/search.htm?spm=a1z10.3-b.w4011-3112923129.1.KjGkS2/>

2015-01-11 22:22:59+0800 [tmallSpider] DEBUG: Redirecting (302) to <GET http://pass.tmall.com/add?_tb_token_=usBqQxmPNGXG&cookie2=1f684ca608c88f56e0df3eec780bd223

&t=323bcd0702e9fe03643e52efdb9f44bc&target=http%3a%2f%2fshanhuijj.tmall.com%2fsearch.htm%3fspm%3da1z10.3-b.w4011-3112923129.1.KjGkS2%2f%26tbpm%3d1&pacc=929hsh3NK_

FxsF9MJ5b50Q==&opi=183.128.158.212&tmsc=1420986185422946> from <GET http://jump.taobao.com/jump?target=http%3a%2f%2fshanhuijj.tmall.com%2fsearch.htm%3fspm%3da1z10

.3-b.w4011-3112923129.1.KjGkS2%2f%26tbpm%3d1>

2015-01-11 22:22:59+0800 [tmallSpider] DEBUG: Redirecting (302) to <GET http://shanhuijj.tmall.com/search.htm?spm=a1z10.3-b.w4011-3112923129.1.KjGkS2/&tbpm=1> fro

m <GET http://pass.tmall.com/add?_tb_token_=usBqQxmPNGXG&cookie2=1f684ca608c88f56e0df3eec780bd223&t=323bcd0702e9fe03643e52efdb9f44bc&target=http%3a%2f%2fshanhuijj

.tmall.com%2fsearch.htm%3fspm%3da1z10.3-b.w4011-3112923129.1.KjGkS2%2f%26tbpm%3d1&pacc=929hsh3NK_FxsF9MJ5b50Q==&opi=183.128.158.212&tmsc=1420986185422946>

2015-01-11 22:22:59+0800 [tmallSpider] DEBUG: Redirecting (302) to <GET http://shanhuijj.tmall.com/search.htm?spm=a1z10.3-b.w4011-3112923129.1.KjGkS2/> from <GET

http://shanhuijj.tmall.com/search.htm?spm=a1z10.3-b.w4011-3112923129.1.KjGkS2/&tbpm=1>

2015-01-11 22:22:59+0800 [tmallSpider] DEBUG: Crawled (200) <GET http://shanhuijj.tmall.com/search.htm?spm=a1z10.3-b.w4011-3112923129.1.KjGkS2/> (referer: None)

2015-01-11 22:22:59+0800 [tmallSpider] DEBUG: Filtered offsite request to ‘detail.tmall.com‘: <GET http://detail.tmall.com/item.htm?abbucket=17&id=10910218037&rn=

732c60a3a253eff86871e55dabc44a61>

2015-01-11 22:22:59+0800 [tmallSpider] INFO: Closing spider (finished)

2015-01-11 22:22:59+0800 [tmallSpider] INFO: Dumping Scrapy stats:

{‘downloader/request_bytes‘: 1864,

‘downloader/request_count‘: 5,

‘downloader/request_method_count/GET‘: 5,

‘downloader/response_bytes‘: 74001,

‘downloader/response_count‘: 5,

‘downloader/response_status_count/200‘: 1,

‘downloader/response_status_count/302‘: 4,

‘finish_reason‘: ‘finished‘,

‘finish_time‘: datetime.datetime(2015, 1, 11, 14, 22, 59, 808000),

‘log_count/DEBUG‘: 8,

‘log_count/INFO‘: 7,

‘offsite/domains‘: 1,

‘offsite/filtered‘: 8,

‘request_depth_max‘: 1,

‘response_received_count‘: 1,

‘scheduler/dequeued‘: 5,

‘scheduler/dequeued/memory‘: 5,

‘scheduler/enqueued‘: 5,

‘scheduler/enqueued/memory‘: 5,

‘start_time‘: datetime.datetime(2015, 1, 11, 14, 22, 59, 539000)}

2015-01-11 22:22:59+0800 [tmallSpider] INFO: Spider closed (finished)输出中出现了 ‘offsite/domains‘:1 ‘offsite/filtered‘:8 说明已经匹配到了8个链接。可是为什么parse_item方法没有执行呢?

答案是,你自己来找改过的代码与原来的代码的区别吧

# -*- coding: utf-8 -*-

import scrapy

from scrapy.contrib.linkextractors import LinkExtractor

from scrapy.contrib.spiders import CrawlSpider, Rule

from tmall.items import TmallItem

class TmallSpider(CrawlSpider):

name = ‘tmallSpider‘

allowed_domains = [‘shanhuijj.tmall.com‘, ‘detail.tmall.com‘]

start_urls = [‘http://shanhuijj.tmall.com/search.htm?spm=a1z10.3-b.w4011-3112923129.1.KjGkS2/‘]

rules = (

Rule(LinkExtractor(allow=r‘http://detail.tmall.com/item.htm\?id=\d+&rn=\w+&abbucket=\d+‘,

restrict_xpaths="//div[@class=‘J_TItems‘]/div/dl/dd[@class=‘detail‘]"),

callback=‘parse_item‘, follow=False),

)

def parse_item(self, response):

self.log("---------------------------------------------------------")

item = TmallItem()

item[‘url‘] = response.url

return itemPython爬虫框架Scrapy 学习笔记 10.2 -------【实战】 抓取天猫某网店所有宝贝详情

声明:以上内容来自用户投稿及互联网公开渠道收集整理发布,本网站不拥有所有权,未作人工编辑处理,也不承担相关法律责任,若内容有误或涉及侵权可进行投诉: 投诉/举报 工作人员会在5个工作日内联系你,一经查实,本站将立刻删除涉嫌侵权内容。