首页 > 代码库 > openstack项目【day24】:OpenStack mitaka部署

openstack项目【day24】:OpenStack mitaka部署

前言:

openstack的部署非常简单,简单的前提建立在扎实的理论功底,本人一直觉得,玩技术一定是理论指导实践,网上遍布个种搭建方法都可以实现一个基本的私有云环境,但是诸位可曾发现,很多配置都是重复的,为何重复?到底什么位置该不该配?具体配置什么参数?很多作者本人都搞不清楚,今天本人就是要在这里正本清源。

介绍:本次案列为基本的三节点部署,集群案列后期有时间再整理

一:网络:

1.管理网络:172.16.209.0/24

2.数据网络:1.1.1.0/24

二:操作系统:CentOS Linux release 7.2.1511 (Core)

三:内核:3.10.0-327.el7.x86_64

四:openstack版本mitaka

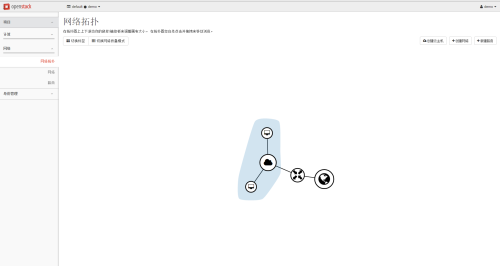

效果图:

OpenStack mitaka部署

约定:

1.在修改配置的时候,切勿在某条配置后加上注释,可以在配置的上面或者下面加注释

2.相关配置一定是在标题后追加,不要在原有注释的基础上修改

PART1:环境准备(在所有节点执行)

一:

每台机器设置固定ip,每台机器添加hosts文件解析,为每台机器设置主机名,关闭firewalld,selinux

可选操作:在控制节点制作密钥登录其他节点(可以方便后面的操作,真实环境也极有必要准备一台单独的管理机),在控制节点修改/etc/hosts并scp到其他节点

/etc/hosts 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 172.16.209.115 controller01 172.16.209.117 compute01 172.16.209.119 network02

二:获取软件包源(在所有节点执行),即配置yum源,下述两种方式请按个人情况选择一种,推荐方式一

方式一:自定义的yum源

本人从官网下载包后自定制的yum源,自定制源的好处是:严格地控制软件包版本,保持平台内主机版本的一致性和可控性,具体做法如下

-

找一个服务器,作为yum源(同时也可以作为cobbler或pxe主机)

-

上传openstack-mitaka-rpms.tar.gz

-

tar xvf openstack-mitaka-rpms.tar.gz -C /

-

在这台机器上安装httpd并且启动,设置开机启动

-

ln -s /mitaka-rpms /var/www/html/

然后每台机器配置yum源

[mitaka] name=mitaka repo baseurl=http://172.16.209.100/mitaka-rpms/ enabled=1 gpgcheck=0

方式二:下载安装官网的源

自定义yum源的包其实也都是来自于官网,只不过,官网经常会更新包,一个包的更新可能会导致一些版本兼容性问题,所以推荐使用方式一,但如果只是测试而非生产环境,方式二是一种稍微便捷的方式

基于centos系统,在所有节点上执行:

yum install centos-release-openstack-mitaka -y

基于redhat系统,在所有节点上执行:

yum install https://rdoproject.org/repos/rdo-release.rpm -y #红帽系统请去掉epel源,否则会跟这里的源有冲突

三 制作yum缓存并更新系统(在所有节点执行)

yum makecache && yum install vim net-tools -y&& yum update -y

小知识点:

yum -y update

升级所有包,改变软件设置和系统设置,系统版本内核都升级

yum -y upgrade

升级所有包,不改变软件设置和系统设置,系统版本升级,内核不改变

四 关闭yum自动更新(在所有节点执行)

CentOS7最小化安装后默认yum会自动下载更新,这对许多生产系统是不需要的,可以手动关闭它

[root@engine cron.weekly]# cd /etc/yum [root@engine yum]# ls fssnap.d pluginconf.d protected.d vars version-groups.conf yum-cron.conf yum-cron-hourly.conf

编辑yum-cron.conf,将download_updates = yes改为no即可

ps:yum install yum-plugin-priorities -y #如果不想关闭自动更新,那么可以安装这个插件,来设定升级的优先级,从官网下载更新而不是从一些乱七八糟的第三方源

五 预装包(在所有节点执行)

yum install python-openstackclient -y yum install openstack-selinux -y

六 时间服务部署

yum install chrony -y #(在所有节点执行)

控制节点:

修改配置:

/etc/chrony.conf

server ntp.staging.kycloud.lan iburst

allow 管理网络网段ip/24

启服务:

systemctl enable chronyd.service systemctl start chronyd.service

其余节点:

修改配置:

/etc/chrony.conf

server 控制节点ip iburst

启服务

systemctl enable chronyd.service

systemctl start chronyd.service

时区不是Asia/Shanghai需要改时区:

# timedatectl set-local-rtc 1 # 将硬件时钟调整为与本地时钟一致, 0 为设置为 UTC 时间

# timedatectl set-timezone Asia/Shanghai # 设置系统时区为上海

其实不考虑各个发行版的差异化, 从更底层出发的话, 修改时间时区比想象中要简单:

# cp /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

验证:

每台机器执行:

chronyc sources

在S那一列包含*号,代表同步成功(可能需要花费几分钟去同步,时间务必同步)

七:部署mariadb数据库

控制节点:

yum install mariadb mariadb-server python2-PyMySQL -y

编辑:

/etc/my.cnf.d/openstack.cnf

[mysqld] bind-address = 控制节点管理网络ip default-storage-engine = innodb innodb_file_per_table max_connections = 4096 collation-server = utf8_general_ci character-set-server = utf8

启服务:

systemctl enable mariadb.service systemctl start mariadb.service mysql_secure_installation

八:为Telemetry 服务部署MongoDB

控制节点:

yum install mongodb-server mongodb -y

编辑:/etc/mongod.conf

bind_ip = 控制节点管理网络ip smallfiles = true

启动服务:

systemctl enable mongod.service systemctl start mongod.service

九:部署消息队列rabbitmq(验证方式:http://172.16.209.104:15672/ 用户:guest 密码:guest)

控制节点:

yum install rabbitmq-server -y

启动服务:

systemctl enable rabbitmq-server.service systemctl start rabbitmq-server.service

新建rabbitmq用户密码:

rabbitmqctl add_user openstack che001

为新建的用户openstack设定权限:

rabbitmqctl set_permissions openstack ".*" ".*" ".*"

十:部署memcached缓存(为keystone服务缓存tokens)

控制节点:

yum install memcached python-memcached -y

启动服务:

systemctl enable memcached.service systemctl start memcached.service

PART2:认证服务keystone部署

一:安装和配置服务

1.建库建用户

mysql -u root -p CREATE DATABASE keystone; GRANT ALL PRIVILEGES ON keystone.* TO ‘keystone‘@‘localhost‘ IDENTIFIED BY ‘che001‘; GRANT ALL PRIVILEGES ON keystone.* TO ‘keystone‘@‘%‘ IDENTIFIED BY ‘che001‘; flush privileges;

2.yum install openstack-keystone httpd mod_wsgi -y

3.编辑/etc/keystone/keystone.conf

[DEFAULT] admin_token = che001 #建议用命令制作token:openssl rand -hex 10 [database] connection = mysql+pymysql://keystone:che001@controller01/keystone [token] provider = fernet #Token Provider:UUID, PKI, PKIZ, or Fernet #http://blog.csdn.net/miss_yang_cloud/article/details/49633719

4.同步修改到数据库

su -s /bin/sh -c "keystone-manage db_sync" keystone

5.初始化fernet keys

keystone-manage fernet_setup --keystone-user keystone --keystone-group keystone

6.配置apache服务

编辑:/etc/httpd/conf/httpd.conf

ServerName controller01

编辑:/etc/httpd/conf.d/wsgi-keystone.conf

新增配置 Listen 5000 Listen 35357 <VirtualHost *:5000>

WSGIDaemonProcess keystone-public processes=5 threads=1 user=keystone group=keystone display-name=%{GROUP} WSGIProcessGroup keystone-public WSGIScriptAlias / /usr/bin/keystone-wsgi-public WSGIApplicationGroup %{GLOBAL} WSGIPassAuthorization On ErrorLogFormat "%{cu}t %M" ErrorLog /var/log/httpd/keystone-error.log CustomLog /var/log/httpd/keystone-access.log combined <Directory /usr/bin> Require all granted </Directory> </VirtualHost> <VirtualHost *:35357> WSGIDaemonProcess keystone-admin processes=5 threads=1 user=keystone group=keystone display-name=%{GROUP} WSGIProcessGroup keystone-admin WSGIScriptAlias / /usr/bin/keystone-wsgi-admin WSGIApplicationGroup %{GLOBAL} WSGIPassAuthorization On ErrorLogFormat "%{cu}t %M" ErrorLog /var/log/httpd/keystone-error.log CustomLog /var/log/httpd/keystone-access.log combined <Directory /usr/bin> Require all granted </Directory> </VirtualHost>

7.启动服务:

systemctl enable httpd.service

systemctl restart httpd.service #因为之前自定义基于http协议的yum源时已经启动过了httpd,所以此处需要restart

二:创建服务实体和访问端点

1.实现配置管理员环境变量,用于获取后面创建的权限

export OS_TOKEN=che001 export OS_URL=http://controller01:35357/v3 export OS_IDENTITY_API_VERSION=3

2.基于上一步给的权限,创建认证服务实体(目录服务)

export OS_TOKEN=che001 export OS_URL=http://controller01:35357/v3 export OS_IDENTITY_API_VERSION=3

--name keystone --description "OpenStack Identity" identity

3.基于上一步建立的服务实体,创建访问该实体的三个api端点

openstack endpoint create --region RegionOne identity public http://controller01:5000/v3 openstack endpoint create --region RegionOne identity internal http://controller01:5000/v3 openstack endpoint create --region RegionOne identity admin http://controller01:35357/v3

三:创建域,租户,用户,角色,把四个元素关联到一起

建立一个公共的域名:

openstack domain create --description "Default Domain" default

管理员:admin

openstack project create --domain default --description "Admin Project" admin openstack user create --domain default --password-prompt admin openstack role create admin openstack role add --project admin --user admin admin

普通用户:demo

openstack project create --domain default --description "Demo Project" demo openstack user create --domain default --password-prompt demo openstack role create user openstack role add --project demo --user demo user

为后续的服务创建统一租户service

解释:后面每搭建一个新的服务都需要在keystone中执行四种操作:1.建租户 2.建用户 3.建角色 4.做关联

后面所有的服务公用一个租户service,都是管理员角色admin,所以实际上后续的服务安装关于keysotne

的操作只剩2,4

openstack project create --domain default --description "Service Project" service

四:验证操作:

编辑:/etc/keystone/keystone-paste.ini

在[pipeline:public_api], [pipeline:admin_api], and [pipeline:api_v3] 三个地方 移走:admin_token_auth unset OS_TOKEN OS_URL

openstack --os-auth-url http://controller01:35357/v3 --os-project-domain-name default --os-user-domain-name default --os-project-name admin --os-username admin token issue Password: +------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+ | Field | Value | +------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+ | expires | 2016-08-17T08:29:18.528637Z | | id | gAAAAABXtBJO-mItMcPR15TSELJVB2iwelryjAGGpaCaWTW3YuEnPpUeg799klo0DaTfhFBq69AiFB2CbFF4CE6qgIKnTauOXhkUkoQBL6iwJkpmwneMo5csTBRLAieomo4z2vvvoXfuxg2FhPUTDEbw-DPgponQO-9FY1IAEJv_QV1qRaCRAY0 | | project_id | 9783750c34914c04900b606ddaa62920 | | user_id | 8bc9b323a3b948758697cb17da304035 | +------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

五:新建客户端脚本文件

管理员:admin-openrc

export OS_PROJECT_DOMAIN_NAME=default export OS_USER_DOMAIN_NAME=default export OS_PROJECT_NAME=admin export OS_USERNAME=admin export OS_PASSWORD=che001 export OS_AUTH_URL=http://controller01:35357/v3 export OS_IDENTITY_API_VERSION=3 export OS_IMAGE_API_VERSION=2

普通用户demo:demo-openrc

export OS_PROJECT_DOMAIN_NAME=default export OS_USER_DOMAIN_NAME=default export OS_PROJECT_NAME=demo export OS_USERNAME=demo export OS_PASSWORD=che001 export OS_AUTH_URL=http://controller01:5000/v3 export OS_IDENTITY_API_VERSION=3 export OS_IMAGE_API_VERSION=2

效果:

source admin-openrc [root@controller01 ~]# openstack token issue

part3:部署镜像服务

一:安装和配置服务

1.建库建用户

mysql -u root -p CREATE DATABASE glance;

GRANT ALL PRIVILEGES ON glance.* TO ‘glance‘@‘localhost‘ IDENTIFIED BY ‘che001‘; GRANT ALL PRIVILEGES ON glance.* TO ‘glance‘@‘%‘ IDENTIFIED BY ‘che001‘; flush privileges;

2.keystone认证操作:

上面提到过:所有后续项目的部署都统一放到一个租户service里,然后需要为每个项目建立用户,建管理员角色,建立关联

. admin-openrc

openstack user create --domain default --password-prompt glance openstack role add --project service --user glance admin

建立服务实体

openstack service create --name glance --description "OpenStack Image" image

建端点

openstack endpoint create --region RegionOne image public http://controller01:9292 openstack endpoint create --region RegionOne image internal http://controller01:9292 openstack endpoint create --region RegionOne image admin http://controller01:9292

3.安装软件

yum install openstack-glance -y

4.初始化存放镜像的存储设备,此处我们暂时用本地存储,但是无论哪种存储,都应该在glance启动前就已经有了,否则glance启动时通过驱动程序检索不到存储设备,即在glance启动后 新建的存储设备无法被glance识别到,需要重新启动glance才可以,因此我们将下面的步骤提到了最前面。

新建目录:

mkdir -p /var/lib/glance/images/ chown glance. /var/lib/glance/images/

5.修改配置:

编辑:/etc/glance/glance-api.conf

[database]

#这里的数据库连接配置是用来初始化生成数据库表结构,不配置无法生成数据库表结构

#glance-api不配置database对创建vm无影响,对使用metada有影响

#日志报错:ERROR glance.api.v2.metadef_namespaces

connection = mysql+pymysql://glance:che001@controller01/glance [keystone_authtoken] auth_url = http://controller01:5000 memcached_servers = controller01:11211 auth_type = password project_domain_name = default user_domain_name = default project_name = service username = glance password = che001

[paste_deploy] flavor = keystone [glance_store] stores = file,http default_store = file filesystem_store_datadir = /var/lib/glance/images/

编辑:/etc/glance/glance-registry.conf

[database]

#这里的数据库配置是用来glance-registry检索镜像元数据

connection = mysql+pymysql://glance:che001@controller01/glance

同步数据库:(此处会报一些关于future的问题,自行忽略)

su -s /bin/sh -c "glance-manage db_sync" glance

启动服务:

systemctl enable openstack-glance-api.service openstack-glance-registry.service systemctl start openstack-glance-api.service openstack-glance-registry.service

二:验证操作:

. admin-openrc

wget http://download.cirros-cloud.net/0.3.4/cirros-0.3.4-x86_64-disk.img

(本地下载:wget http://172.16.209.100/cirros-0.3.4-x86_64-disk.img)

openstack image create "cirros" --file cirros-0.3.4-x86_64-disk.img --disk-format qcow2 --container-format bare --public openstack image list

part4:部署compute服务

一:控制节点配置

1.建库建用户

CREATE DATABASE nova_api; CREATE DATABASE nova; GRANT ALL PRIVILEGES ON nova_api.* TO ‘nova‘@‘localhost‘ IDENTIFIED BY ‘che001‘; GRANT ALL PRIVILEGES ON nova_api.* TO ‘nova‘@‘%‘ IDENTIFIED BY ‘che001‘; GRANT ALL PRIVILEGES ON nova.* TO ‘nova‘@‘localhost‘ IDENTIFIED BY ‘che001‘; GRANT ALL PRIVILEGES ON nova.* TO ‘nova‘@‘%‘ IDENTIFIED BY ‘che001‘; flush privileges;

2.keystone相关操作

. admin-openrc

openstack user create --domain default --password-prompt nova openstack role add --project service --user nova admin openstack service create --name nova --description "OpenStack Compute" compute openstack endpoint create --region RegionOne compute public http://controller01:8774/v2.1/%\(tenant_id\)s openstack endpoint create --region RegionOne compute internal http://controller01:8774/v2.1/%\(tenant_id\)s openstack endpoint create --region RegionOne compute admin http://controller01:8774/v2.1/%\(tenant_id\)s

3.安装软件包:

yum install openstack-nova-api openstack-nova-conductor openstack-nova-console openstack-nova-novncproxy \

openstack-nova-scheduler -y

4.修改配置:

编辑/etc/nova/nova.conf

[DEFAULT] enabled_apis = osapi_compute,metadata rpc_backend = rabbit auth_strategy = keystone #下面的为管理ip my_ip = 172.16.209.115 use_neutron = True firewall_driver = nova.virt.firewall.NoopFirewallDriver [api_database] connection = mysql+pymysql://nova:che001@controller01/nova_api [database] connection = mysql+pymysql://nova:che001@controller01/nova [oslo_messaging_rabbit] rabbit_host = controller01 rabbit_userid = openstack rabbit_password = che001 [keystone_authtoken] auth_url = http://controller01:5000 memcached_servers = controller01:11211 auth_type = password project_domain_name = default user_domain_name = default project_name = service username = nova password = che001 [vnc] #下面的为管理ip vncserver_listen = 172.16.209.115 #下面的为管理ip vncserver_proxyclient_address = 172.16.209.115 [oslo_concurrency] lock_path = /var/lib/nova/tmp

5.同步数据库:(此处会报一些关于future的问题,自行忽略)

su -s /bin/sh -c "nova-manage api_db sync" nova su -s /bin/sh -c "nova-manage db sync" nova

6.启动服务

systemctl enable openstack-nova-api.service openstack-nova-consoleauth.service openstack-nova-scheduler.service openstack-nova-conductor.service openstack-nova-novncproxy.service systemctl start openstack-nova-api.service openstack-nova-consoleauth.service openstack-nova-scheduler.service openstack-nova-conductor.service openstack-nova-novncproxy.service

二:计算节点配置

1.安装软件包:

yum install openstack-nova-compute libvirt-daemon-lxc -y

2.修改配置:

编辑/etc/nova/nova.conf

[DEFAULT] rpc_backend = rabbit auth_strategy = keystone #计算节点管理网络ip my_ip = 172.16.209.117 use_neutron = True firewall_driver = nova.virt.firewall.NoopFirewallDriver [oslo_messaging_rabbit] rabbit_host = controller01 rabbit_userid = openstack rabbit_password = che001 [vnc] enabled = True vncserver_listen = 0.0.0.0 #计算节点管理网络ip vncserver_proxyclient_address = 172.16.209.117 #控制节点管理网络ip novncproxy_base_url = http://172.16.209.115:6080/vnc_auto.html [glance] api_servers = http://controller01:9292 [oslo_concurrency] lock_path = /var/lib/nova/tmp

3.如果在不支持虚拟化的机器上部署nova,请确认

egrep -c ‘(vmx|svm)‘ /proc/cpuinfo结果为0

则编辑/etc/nova/nova.conf

[libvirt] virt_type = qemu

4.启动服务

systemctl enable libvirtd.service openstack-nova-compute.service systemctl start libvirtd.service openstack-nova-compute.service

三:验证

控制节点

[root@controller01 ~]# source admin-openrc [root@controller01 ~]# openstack compute service list +----+------------------+--------------+----------+---------+-------+----------------------------+ | Id | Binary | Host | Zone | Status | State | Updated At | +----+------------------+--------------+----------+---------+-------+----------------------------+ | 1 | nova-consoleauth | controller01 | internal | enabled | up | 2016-08-17T08:51:37.000000 | | 2 | nova-conductor | controller01 | internal | enabled | up | 2016-08-17T08:51:29.000000 | | 8 | nova-scheduler | controller01 | internal | enabled | up | 2016-08-17T08:51:38.000000 | | 12 | nova-compute | compute01 | nova | enabled | up | 2016-08-17T08:51:30.000000 |

part5:部署网络服务

一:控制节点配置

1.建库建用户

CREATE DATABASE neutron; GRANT ALL PRIVILEGES ON neutron.* TO ‘neutron‘@‘localhost‘ IDENTIFIED BY ‘che001‘; GRANT ALL PRIVILEGES ON neutron.* TO ‘neutron‘@‘%‘ IDENTIFIED BY ‘che001‘; flush privileges;

2.keystone相关

. admin-openrc

openstack user create --domain default --password-prompt neutron openstack role add --project service --user neutron admin openstack service create --name neutron --description "OpenStack Networking" network openstack endpoint create --region RegionOne network public http://controller01:9696 openstack endpoint create --region RegionOne network internal http://controller01:9696 openstack endpoint create --region RegionOne network admin http://controller01:9696

3.安装软件包

yum install openstack-neutron openstack-neutron-ml2 python-neutronclient which -y

4.配置服务器组件

编辑 /etc/neutron/neutron.conf文件,并完成以下动作:

在[数据库]节中,配置数据库访问: [DEFAULT] core_plugin = ml2 service_plugins = router #下面配置:启用重叠IP地址功能 allow_overlapping_ips = True rpc_backend = rabbit #auth_strategy = keystone notify_nova_on_port_status_changes = True notify_nova_on_port_data_changes = True [oslo_messaging_rabbit] rabbit_host = controller01 rabbit_userid = openstack rabbit_password = che001 [database] connection = mysql+pymysql://neutron:che001@controller01/neutron [keystone_authtoken] auth_url = http://controller01:5000 memcached_servers = controller01:11211 auth_type = password project_domain_name = default user_domain_name = default project_name = service username = neutron password = che001 [nova] auth_url = http://controller01:5000 auth_type = password project_domain_name = default user_domain_name = default region_name = RegionOne project_name = service username = nova password = che001 [oslo_concurrency] lock_path = /var/lib/neutron/tmp

编辑/etc/neutron/plugins/ml2/ml2_conf.ini文件

[ml2] type_drivers = flat,vlan,vxlan,gre tenant_network_types = vxlan mechanism_drivers = openvswitch,l2population extension_drivers = port_security [ml2_type_flat] flat_networks = provider [ml2_type_vxlan] vni_ranges = 1:1000 [securitygroup] enable_ipset = True

编辑/etc/nova/nova.conf文件:

[neutron] url = http://controller01:9696 auth_url = http://controller01:5000 auth_type = password project_domain_name = default user_domain_name = default region_name = RegionOne project_name = service username = neutron password = che001 service_metadata_proxy = True

5.创建连接

ln -s /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugin.ini

6.同步数据库:(此处会报一些关于future的问题,自行忽略)

su -s /bin/sh -c "neutron-db-manage --config-file /etc/neutron/neutron.conf --config-file /etc/neutron/plugins/ml2/ml2_conf.ini upgrade head" neutron

7.重启nova服务

systemctl restart openstack-nova-api.service

8.启动neutron服务

systemctl enable neutron-server.service systemctl start neutron-server.service

二:网络节点配置

1. 编辑 /etc/sysctl.conf

net.ipv4.ip_forward=1 net.ipv4.conf.all.rp_filter=0 net.ipv4.conf.default.rp_filter=0

2.执行下列命令,立即生效

sysctl -p

3.安装软件包

yum install openstack-neutron openstack-neutron-ml2 openstack-neutron-openvswitch -

4.配置组件

编辑/etc/neutron/neutron.conf文件

[DEFAULT] core_plugin = ml2 service_plugins = router allow_overlapping_ips = True rpc_backend = rabbit auth_strategy = keystone [database] connection = mysql+pymysql://neutron:che001@controller01/neutron [oslo_messaging_rabbit] rabbit_host = controller01 rabbit_userid = openstack rabbit_password = che001 [oslo_concurrency] lock_path = /var/lib/neutron/tmp

6、编辑 /etc/neutron/plugins/ml2/openvswitch_agent.ini文件:

[ovs] #下面ip为网络节点数据网络ip [agent] tunnel_types=gre,vxlan #l2_population=True prevent_arp_spoofing=True

7.配置L3代理。编辑 /etc/neutron/l3_agent.ini文件:

[DEFAULT] interface_driver=neutron.agent.linux.interface.OVSInterfaceDriver external_network_bridge=br-ex

8.配置DHCP代理。编辑 /etc/neutron/dhcp_agent.ini文件:

[DEFAULT] interface_driver=neutron.agent.linux.interface.OVSInterfaceDriver dhcp_driver=neutron.agent.linux.dhcp.Dnsmasq enable_isolated_metadata=http://www.mamicode.com/True

9.配置元数据代理。编辑 /etc/neutron/metadata_agent.ini文件:

[DEFAULT] nova_metadata_ip=controller01 metadata_proxy_shared_secret=che001

10.启动服务(先启动服务再建网桥br-ex)

网路节点:

systemctl start neutron-openvswitch-agent.service neutron-l3-agent.service neutron-dhcp-agent.service neutron-metadata-agent.service systemctl enable neutron-openvswitch-agent.service neutron-l3-agent.service neutron-dhcp-agent.service neutron-metadata-agent.service

11.建网桥

注意,如果网卡数量有限,想用网路节点的管理网络网卡作为br-ex绑定的物理网卡

#那么需要将网络节点管理网络网卡ip去掉,建立br-ex的配置文件,ip使用原管理网ip

[root@network01 ~]# cat /etc/sysconfig/network-scripts/ifcfg-eth0 DEVICE=eth0 TYPE=Ethernet ONBOOT="yes" BOOTPROTO="none" NM_CONTROLLED=no [root@network01 ~]# cat /etc/sysconfig/network-scripts/ifcfg-br-ex DEVICE=br-ex TYPE=Ethernet ONBOOT="yes" BOOTPROTO="none" #HWADDR=bc:ee:7b:78:7b:a7 IPADDR=172.16.209.10 GATEWAY=172.16.209.1 NETMASK=255.255.255.0 DNS1=202.106.0.20 DNS1=8.8.8.8 NM_CONTROLLED=no #注意加上这一句否则网卡可能启动不成功 ovs-vsctl add-br br-ex ovs-vsctl add-port br-ex eth2 #要在network服务重启前将物理端口eth0加入网桥br-ex

systemctl restart network #重启网络时,务必保证eth2网卡没有ip或者干脆是down掉的状态,并且一定要NM_CONTROLLED=no,否则会无法启动服务

三:计算节点配置

1. 编辑 /etc/sysctl.conf

net.ipv4.conf.all.rp_filter=0 net.ipv4.conf.default.rp_filter=0

2.sysctl -p

3.yum install openstack-neutron openstack-neutron-ml2 openstack-neutron-openvswitch -y

4.编辑 /etc/neutron/neutron.conf文件

[DEFAULT] rpc_backend = rabbit #auth_strategy = keystone [oslo_messaging_rabbit] rabbit_host = controller01 rabbit_userid = openstack rabbit_password = che001 [oslo_concurrency] lock_path = /var/lib/neutron/tmp

5.编辑 /etc/neutron/plugins/ml2/openvswitch_agent.ini

[ovs] #下面ip为计算节点数据网络ip local_ip = 1.1.1.117 #bridge_mappings = vlan:br-vlan [agent] tunnel_types = gre,vxlan l2_population = True #开启l2_population功能用于接收sdn控制器(一般放在控制节点)发来的(新建的vm)arp信息,这样就把arp信息推送到了每个中断设备(计算节点),减少了一大波初识arp广播流量(说初始是因为如果没有l2pop机制,一个vm对另外一个vm的arp广播一次后就缓存到本地了),好强大,详见https://assafmuller.com/2014/05/21/ovs-arp-responder-theory-and-practice/ arp_responder = True #开启br-tun的arp响应功能,这样br-tun就成了一个arp proxy,来自本节点对其他虚拟机而非物理主机的arp请求可以基于本地的br-tun轻松搞定,不能再牛逼了 prevent_arp_spoofing = True [securitygroup] firewall_driver = neutron.agent.linux.iptables_firewall.OVSHybridIptablesFirewallDriver enable_security_group = True 7.编辑 /etc/nova/nova.conf [neutron] url = http://controller01:9696 auth_url = http://controller01:5000 auth_type = password project_domain_name = default user_domain_name = default region_name = RegionOne project_name = service username = neutron password = che001

8.启动服务

systemctl enable neutron-openvswitch-agent.service systemctl start neutron-openvswitch-agent.service systemctl restart openstack-nova-compute.service

part6:部署控制面板dashboard

在控制节点

1.安装软件包

yum install openstack-dashboard -y

2.配置/etc/openstack-dashboard/local_settings

OPENSTACK_HOST = "controller01"

ALLOWED_HOSTS = [‘*‘, ]

SESSION_ENGINE = ‘django.contrib.sessions.backends.cache‘

CACHES = {

‘default‘: {

‘BACKEND‘: ‘django.core.cache.backends.memcached.MemcachedCache‘,

‘LOCATION‘: ‘controller01:11211‘,

}

}

#注意:必须是v3而不是v3.0

OPENSTACK_KEYSTONE_URL = "http://%s:5000/v3" % OPENSTACK_HOST

OPENSTACK_KEYSTONE_MULTIDOMAIN_SUPPORT = True

OPENSTACK_API_VERSIONS = {

"identity": 3,

"image": 2,

"volume": 2,

}

OPENSTACK_KEYSTONE_DEFAULT_DOMAIN = "default"

OPENSTACK_KEYSTONE_DEFAULT_ROLE = "user"

TIME_ZONE = "UTC"

3.启动服务

systemctl enable httpd.service memcached.service systemctl restart httpd.service memcached.service

4.验证;

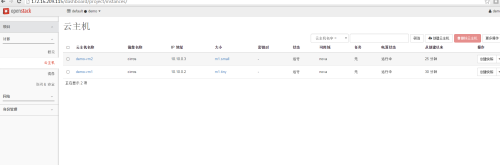

http://172.16.209.115/dashboard

总结:

-

与keystone打交道的只有api层,所以不要到处乱配

-

建主机的时候由nova-compute负责调用各个api,所以不要再控制节点配置啥调用

-

ml2是neutron的core plugin,只需要在控制节点配置

-

网络节点只需要配置相关的agent

-

各组件的api除了接收请求外还有很多其他功能,比方说验证请求的合理性,控制节点nova.conf需要配neutron的api、认证,因为nova boot时需要去验证用户提交网络的合理性,控制节点neutron.conf需要配nova的api、认证,因为你删除网络端口时需要通过nova-api去查是否有主机正在使用端口。计算几点nova.conf需要配neutron,因为nova-compute发送请求给neutron-server来创建端口。这里的端口值得是‘交换机上的端口‘

-

不明白为啥?或者不懂我在说什么,请好好研究openstack各组件通信机制和主机创建流程。

网路故障排查:

网络节点:

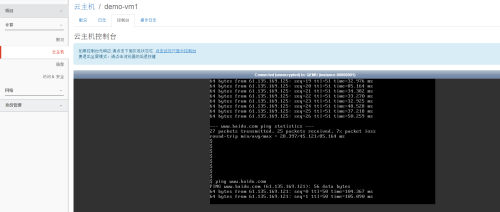

[root@network02 ~]# ip netns show qdhcp-e63ab886-0835-450f-9d88-7ea781636eb8 qdhcp-b25baebb-0a54-4f59-82f3-88374387b1ec qrouter-ff2ddb48-86f7-4b49-8bf4-0335e8dbaa83 [root@network02 ~]# ip netns exec qrouter-ff2ddb48-86f7-4b49-8bf4-0335e8dbaa83 bash [root@network02 ~]# ping -c2 www.baidu.com PING www.a.shifen.com (61.135.169.125) 56(84) bytes of data. 64 bytes from 61.135.169.125: icmp_seq=1 ttl=52 time=33.5 ms 64 bytes from 61.135.169.125: icmp_seq=2 ttl=52 time=25.9 ms

如果无法ping通,那么退出namespace

ovs-vsctl del-br br-ex

ovs-vsctl del-br br-int

ovs-vsctl del-br br-tun

ovs-vsctl add-br br-int

ovs-vsctl add-br br-ex

ovs-vsctl add-port br-ex eth0

systemctl restart neutron-openvswitch-agent.service neutron-l3-agent.service \

neutron-dhcp-agent.service neutron-metadata-agent.service

openstack项目【day24】:OpenStack mitaka部署