首页 > 代码库 > python之网络爬虫

python之网络爬虫

一、演绎自已的北爱

踏上北漂的航班,开始演奏了我自已的北京爱情故事

二、爬虫1

1、网络爬虫的思路

首先:指定一个url,然后打开这个url地址,读其中的内容。

其次:从读取的内容中过滤关键字;这一步是关键,可以通过查看源代码的方式获取。

最后:下载获取的html的url地址,或者图片的url地址保存到本地

2、针对指定的url来网络爬虫

分析:

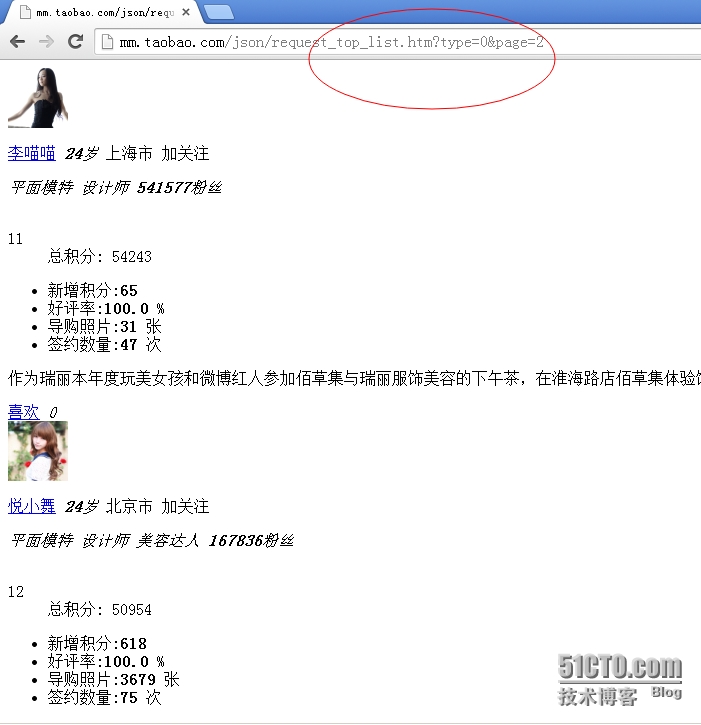

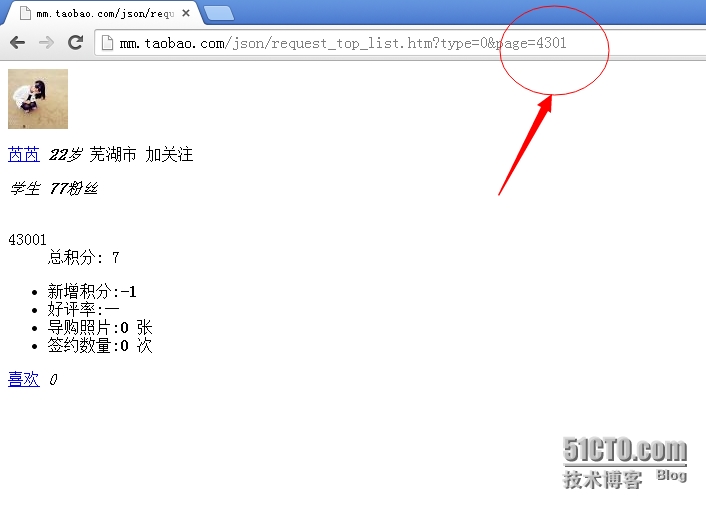

第一步:大约共有4300个下一页。

第二步:一个页面上有10个个人头像

第三步:一个头像内大约有100张左右的个人图片

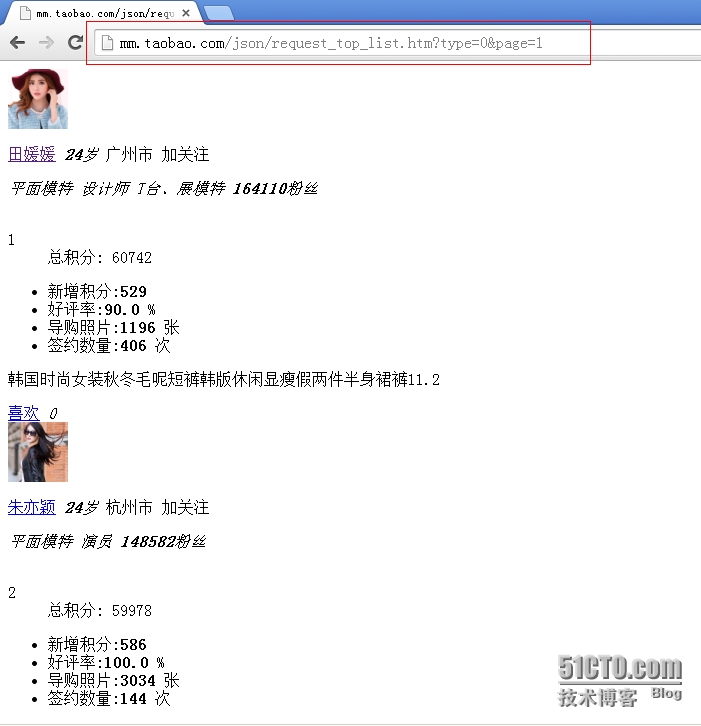

指定的淘宝mm的url为:http://mm.taobao.com/json/request_top_list.htm?type=0&page=1

这个页面默认是没有下一页按钮的,我们可以通过修改其url地址来进行查看下一个页面

最后一页的url地址和页面展示如下图所示:

点击任意一个头像来进入个人的主页,如下图

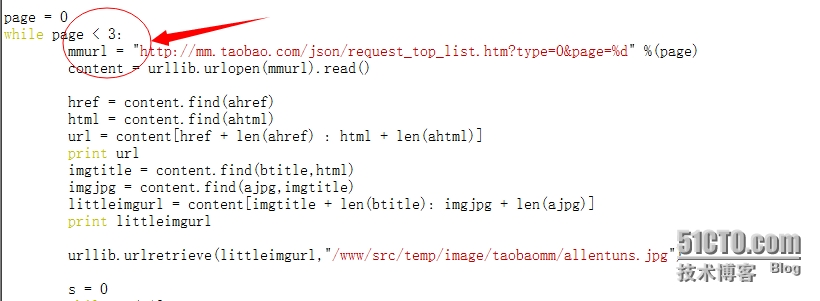

3、定制的脚本

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 | #!/usr/bin/env python#coding:utf-8#Author:Allentuns#Email:zhengyansheng@hytyi.comimport urllibimport osimport sysimport timeahref = ‘<a href="http://www.mamicode.com/‘ahrefs = ‘<a href="http://www.mamicode.com/h‘ahtml = ".htm"atitle = "<img style"ajpg = ".jpg"btitle = ‘<img src="http://www.mamicode.com/‘page = 0while page < 4300: #这个地方可以修改;最大值为4300,我测试的时候写的是3. mmurl = "http://mm.taobao.com/json/request_top_list.htm?type=0&page=%d" %(page) content = urllib.urlopen(mmurl).read() href = content.find(ahref) html = content.find(ahtml) url = content[href + len(ahref) : html + len(ahtml)] print url imgtitle = content.find(btitle,html) imgjpg = content.find(ajpg,imgtitle) littleimgurl = content[imgtitle + len(btitle): imgjpg + len(ajpg)] print littleimgurl urllib.urlretrieve(littleimgurl,"/www/src/temp/image/taobaomm/allentuns.jpg") s = 0 while s < 18: href = content.find(ahrefs,html) html = content.find(ahtml,href) url = content[href + len(ahref): html + len(ajpg)] print s,url imgtitle = content.find(btitle,html) imgjpg = content.find(ajpg,imgtitle) littleimgurl = content[imgtitle : imgjpg + len(ajpg)] littlesrc = littleimgurl.find("src") tureimgurl = littleimgurl[littlesrc + 5:] print s,tureimgurl if url.find("photo") == -1: content01 = urllib.urlopen(url).read() imgtitle = content01.find(atitle) imgjpg = content01.find(ajpg,imgtitle) littleimgurl = content01[imgtitle : imgjpg + len(ajpg)] littlesrc = littleimgurl.find("src") tureimgurl = littleimgurl[littlesrc + 5:] print tureimgurl imgcount = content01.count(atitle) i = 20 try: while i < imgcount: content01 = urllib.urlopen(url).read() imgtitle = content01.find(atitle,imgjpg) imgjpg = content01.find(ajpg,imgtitle) littleimgurl = content01[imgtitle : imgjpg + len(ajpg)] littlesrc = littleimgurl.find("src") tureimgurl = littleimgurl[littlesrc + 5:] print i,tureimgurl time.sleep(1) if tureimgurl.count("<") == 0: imgname = tureimgurl[tureimgurl.index("T"):] urllib.urlretrieve(tureimgurl,"/www/src/temp/image/taobaomm/%s-%s" %(page,imgname)) else: pass i += 1 except IOError: print ‘/nWhy did you do an EOF on me?‘ break except: print ‘/nSome error/exception occurred.‘ s += 1 else: print "---------------{< 20;1 page hava 10 htm and pic }-------------------------}" page = page + 1 print "****************%s page*******************************" %(page)else: print "Download Finshed." |

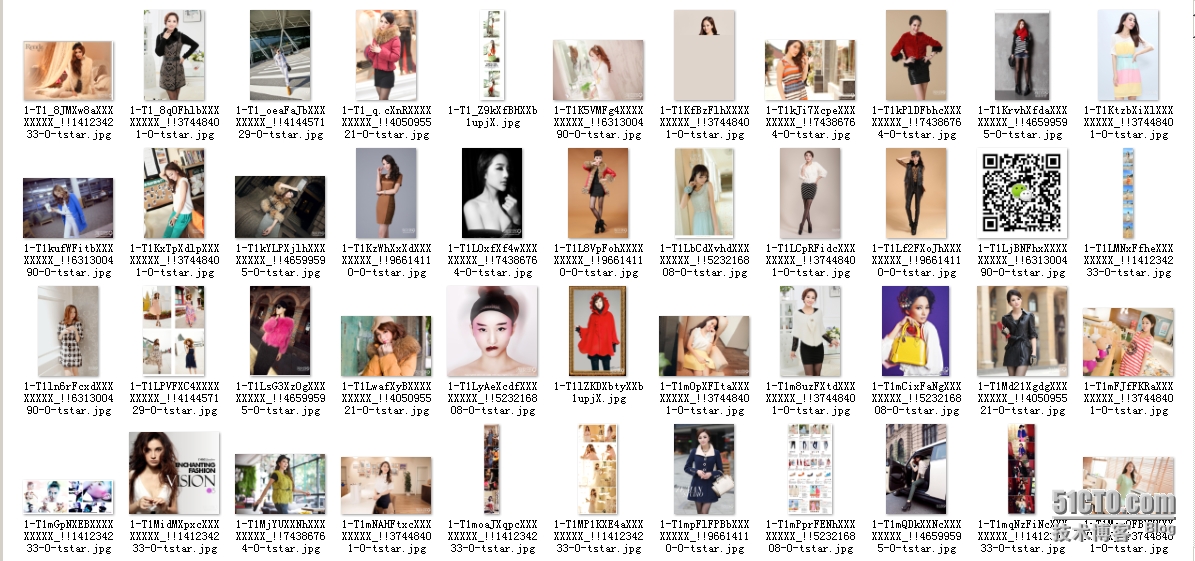

4、图片展示(部分图片)

5、查看下载的图片数量

二、爬虫2

1、首先来分析url

第一步:总共有7个页面;

第二步:每个页面有20篇文章

第三步:查看后总共有317篇文章

2、python脚本

脚本的功能:通过给定的url来将这片博客里面的所有文章下载到本地

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 | #!/usr/bin/env python#coding: utf-8import urllibimport timelist00 = []i = j = 0page = 1while page < 8: str = "http://blog.sina.com.cn/s/articlelist_1191258123_0_%d.html" %(page) content = urllib.urlopen(str).read() title = content.find(r"<a title") href = content.find(r"href="http://www.mamicode.com/,title) html = content.find(r".html",href) url = content[href + 6:html + 5] urlfilename = url[-26:] list00.append(url) print i, url while title != -1 and href != -1 and html != -1 and i < 350: title = content.find(r"<a title",html) href = content.find(r"href="http://www.mamicode.com/,title) html = content.find(r".html",href) url = content[href + 6:html + 5] urlfilename = url[-26:] list00.append(url) i = i + 1 print i, url else: print "Link address Finshed." print "This is %s page" %(page) page = page + 1else: print "spage=",list00[50] print list00[:51] print list00.count("") print "All links address Finshed."x = list00.count(‘‘)a = 0while a < x: y1 = list00.index(‘‘) list00.pop(y1) print a a = a + 1print list00.count(‘‘)listcount = len(list00)while j < listcount: content = urllib.urlopen(list00[j]).read() open(r"/tmp/hanhan/"+list00[j][-26:],‘a+‘).write(content) print "%2s is finshed." %(j) j = j + 1 #time.sleep(1)else: print "Write to file End." |

3、下载文章后的截图

4、从linux下载到windows本地,然后打开查看;如下截图

4、从linux下载到windows本地,然后打开查看;如下截图

python之网络爬虫

声明:以上内容来自用户投稿及互联网公开渠道收集整理发布,本网站不拥有所有权,未作人工编辑处理,也不承担相关法律责任,若内容有误或涉及侵权可进行投诉: 投诉/举报 工作人员会在5个工作日内联系你,一经查实,本站将立刻删除涉嫌侵权内容。