首页 > 代码库 > 云计算之OpenStack实战记(二)与埋坑填坑

云计算之OpenStack实战记(二)与埋坑填坑

3.6 Nova控制节点的部署

创建nova用户,并加入到service项目中,赋予admin权限

[root@node1 ~]# source admin-openrc.sh

[root@node1 ~]# openstack user create --domain default --password=nova nova

+-----------+----------------------------------+

| Field | Value |

+-----------+----------------------------------+

| domain_id | default |

| enabled | True |

| id | 4ab17ba8c8804d5f888acb243675388b |

| name | nova |

+-----------+----------------------------------+

[root@node1 ~]# openstack role add --project service --user nova admin

修改nova的配置文件,配置结果如下

1.数据库

2.keystone

3.RabbitMQ

4.网络相关

5.注册相关

[root@node1 ~]# grep -n "^[a-Z]" /etc/nova/nova.conf

1 [DEFAULT]

198 my_ip=192.168.8.150 //变量,方便调用

344 enabled_apis=osapi_compute,metadata //禁用ec2的API

506 auth_strategy=keystone //(使用keystone验证,分清处这个是default模块下的)

838 network_api_class=nova.network.neutronv2.api.API //网络使用neutron的,中间的.代表目录结构

930 linuxnet_interface_driver=nova.network.linux_net.NeutronLinuxBridgeInterfaceDriver //(以前的类的名称LinuxBridgeInterfaceDriver,现在叫做NeutronLinuxBridgeInterfaceDriver)

1064 security_group_api=neutron //设置安全组sg为neutron

1240 firewall_driver=nova.virt.firewall.NoopFirewallDriver //(关闭防火墙)

1277 debug=true

1283 verbose=true

1422 rpc_backend=rabbit //使用rabbitmq消息队列

1719 [database]

1742 connection=mysql://nova:nova@192.168.8.150/nova

1936 [glance]

1943 host=$my_ip //glance的地址

2120 [keystone_authtoken]

2125 auth_uri = http://192.168.8.150:5000

2126 auth_url = http://192.168.8.150:35357

2127 auth_plugin = password

2128 project_domain_id = default

2129 user_domain_id = default

2130 project_name = service //使用service项目

2131 username = nova

2132 password = nova

2737 [oslo_concurrency]

2752 lock_path=/var/lib/nova/tmp //锁路径

2870 [oslo_messaging_rabbit]

2924 rabbit_host=192.168.8.150 //指定rabbit主机

2928 rabbit_port=5672 //rabbitmq端口

2940 rabbit_userid=openstack //rabbitmq用户

2944 rabbit_password=openstack //rabbitmq密码

3302 [vnc]

3320 vncserver_listen=$my_ip //vnc监听地址

3325 vncserver_proxyclient_address=$my_ip //代理客户端地址

本机配置:

[root@node1 ~]# grep -n "^[a-Z]" /etc/nova/nova.conf

198:my_ip=192.168.8.150

344:enabled_apis=osapi_compute,metadata

506:auth_strategy=keystone

838:network_api_class=nova.network.neutronv2.api.API

930:linuxnet_interface_driver=nova.network.linux_net.NeutronLinuxBridgeInterfaceDriver

1064:security_group_api=neutron

1240:firewall_driver=nova.virt.firewall.NoopFirewallDriver

1277:debug=true

1283:verbose=true

1422:rpc_backend=rabbit

1742:connection=mysql://nova:nova@192.168.8.150/nova

1943:host=$my_ip

2125:auth_uri = http://192.168.8.150:5000

2126:auth_url = http://192.168.8.150:35357

2127:auth_plugin = password

2128:project_domain_id = default

2129:user_domain_id = default

2130:project_name = service

2131:username = nova

2132:password = nova

2752:lock_path=/var/lib/nova/tmp

2933:rabbit_host=192.168.8.150

2937:rabbit_port=5672

2949:rabbit_userid=openstack

2953:rabbit_password=openstack

3320:vncserver_listen=$my_ip

3325:vncserver_proxyclient_address=$my_ip

同步数据库

[root@node1 ~]# su -s /bin/sh -c "nova-manage db sync" nova

/usr/lib64/python2.7/site-packages/sqlalchemy/engine/default.py:450: Warning: Duplicate index ‘block_device_mapping_instance_uuid_virtual_name_device_name_idx‘ defined on the table ‘nova.block_device_mapping‘. This is deprecated and will be disallowed in a future release.

cursor.execute(statement, parameters)

/usr/lib64/python2.7/site-packages/sqlalchemy/engine/default.py:450: Warning: Duplicate index ‘uniq_instances0uuid‘ defined on the table ‘nova.instances‘. This is deprecated and will be disallowed in a future release.

cursor.execute(statement, parameters)

[root@node1 ~]# mysql -uroot -p123456

Welcome to the MariaDB monitor. Commands end with ; or \g.

Your MariaDB connection id is 19

Server version: 5.5.50-MariaDB MariaDB Server

Copyright (c) 2000, 2016, Oracle, MariaDB Corporation Ab and others.

Type ‘help;‘ or ‘\h‘ for help. Type ‘\c‘ to clear the current input statement.

MariaDB [nova]> use nova;

Database changed

MariaDB [nova]> show tables;

+--------------------------------------------+

| Tables_in_nova |

+--------------------------------------------+

| agent_builds |

| aggregate_hosts |

| aggregate_metadata |

| aggregates |

| block_device_mapping |

| bw_usage_cache |

| cells |

| certificates |

| compute_nodes |

| console_pools |

| consoles |

| dns_domains |

| fixed_ips |

| floating_ips |

| instance_actions |

| instance_actions_events |

| instance_extra |

| instance_faults |

| instance_group_member |

| instance_group_policy |

| instance_groups |

| instance_id_mappings |

| instance_info_caches |

| instance_metadata |

| instance_system_metadata |

| instance_type_extra_specs |

| instance_type_projects |

| instance_types |

| instances |

| key_pairs |

| migrate_version |

| migrations |

| networks |

| pci_devices |

| project_user_quotas |

| provider_fw_rules |

| quota_classes |

| quota_usages |

| quotas |

| reservations |

| s3_images |

| security_group_default_rules |

| security_group_instance_association |

| security_group_rules |

| security_groups |

| services |

| shadow_agent_builds |

| shadow_aggregate_hosts |

| shadow_aggregate_metadata |

| shadow_aggregates |

| shadow_block_device_mapping |

| shadow_bw_usage_cache |

| shadow_cells |

| shadow_certificates |

| shadow_compute_nodes |

| shadow_console_pools |

| shadow_consoles |

| shadow_dns_domains |

| shadow_fixed_ips |

| shadow_floating_ips |

| shadow_instance_actions |

| shadow_instance_actions_events |

| shadow_instance_extra |

| shadow_instance_faults |

| shadow_instance_group_member |

| shadow_instance_group_policy |

| shadow_instance_groups |

| shadow_instance_id_mappings |

| shadow_instance_info_caches |

| shadow_instance_metadata |

| shadow_instance_system_metadata |

| shadow_instance_type_extra_specs |

| shadow_instance_type_projects |

| shadow_instance_types |

| shadow_instances |

| shadow_key_pairs |

| shadow_migrate_version |

| shadow_migrations |

| shadow_networks |

| shadow_pci_devices |

| shadow_project_user_quotas |

| shadow_provider_fw_rules |

| shadow_quota_classes |

| shadow_quota_usages |

| shadow_quotas |

| shadow_reservations |

| shadow_s3_images |

| shadow_security_group_default_rules |

| shadow_security_group_instance_association |

| shadow_security_group_rules |

| shadow_security_groups |

| shadow_services |

| shadow_snapshot_id_mappings |

| shadow_snapshots |

| shadow_task_log |

| shadow_virtual_interfaces |

| shadow_volume_id_mappings |

| shadow_volume_usage_cache |

| snapshot_id_mappings |

| snapshots |

| tags |

| task_log |

| virtual_interfaces |

| volume_id_mappings |

| volume_usage_cache |

+--------------------------------------------+

105 rows in set (0.01 sec)

启动nova的全部服务

[root@node1 ~]# systemctl enable openstack-nova-api.service openstack-nova-cert.service openstack-nova-consoleauth.service openstack-nova-scheduler.service openstack-nova-conductor.service openstack-nova-novncproxy.service

Created symlink from /etc/systemd/system/multi-user.target.wants/openstack-nova-api.service to /usr/lib/systemd/system/openstack-nova-api.service.

Created symlink from /etc/systemd/system/multi-user.target.wants/openstack-nova-cert.service to /usr/lib/systemd/system/openstack-nova-cert.service.

Created symlink from /etc/systemd/system/multi-user.target.wants/openstack-nova-consoleauth.service to /usr/lib/systemd/system/openstack-nova-consoleauth.service.

Created symlink from /etc/systemd/system/multi-user.target.wants/openstack-nova-scheduler.service to /usr/lib/systemd/system/openstack-nova-scheduler.service.

Created symlink from /etc/systemd/system/multi-user.target.wants/openstack-nova-conductor.service to /usr/lib/systemd/system/openstack-nova-conductor.service.

Created symlink from /etc/systemd/system/multi-user.target.wants/openstack-nova-novncproxy.service to /usr/lib/systemd/system/openstack-nova-novncproxy.service.

[root@node1 ~]# systemctl start openstack-nova-api.service openstack-nova-cert.service openstack-nova-consoleauth.service openstack-nova-scheduler.service openstack-nova-conductor.service openstack-nova-novncproxy.service

在keystone上注册nova,并检查控制节点的nova服务是否配置成功

[root@node1 ~]# openstack service create --name nova --description "OpenStack Compute" compute

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | OpenStack Compute |

| enabled | True |

| id | c80323b6a0b743bf95b40ebd031d407d |

| name | nova |

| type | compute |

+-------------+----------------------------------+

[root@node1 ~]# openstack endpoint create --region RegionOne compute public http://192.168.8.150:8774/v2/%\(tenant_id\)s

+--------------+--------------------------------------------+

| Field | Value |

+--------------+--------------------------------------------+

| enabled | True |

| id | ea10c9cdf2064825bc6f634bb84f2030 |

| interface | public |

| region | RegionOne |

| region_id | RegionOne |

| service_id | c80323b6a0b743bf95b40ebd031d407d |

| service_name | nova |

| service_type | compute |

| url | http://192.168.8.150:8774/v2/%(tenant_id)s |

+--------------+--------------------------------------------+

[root@node1 ~]# openstack endpoint create --region RegionOne compute internal http://192.168.8.150:8774/v2/%\(tenant_id\)s

+--------------+--------------------------------------------+

| Field | Value |

+--------------+--------------------------------------------+

| enabled | True |

| id | 86c1a8c2b7774239b4cfaa659734c155 |

| interface | internal |

| region | RegionOne |

| region_id | RegionOne |

| service_id | c80323b6a0b743bf95b40ebd031d407d |

| service_name | nova |

| service_type | compute |

| url | http://192.168.8.150:8774/v2/%(tenant_id)s |

+--------------+--------------------------------------------+

[root@node1 ~]# openstack endpoint create --region RegionOne compute admin http://192.168.8.150:8774/v2/%\(tenant_id\)s

+--------------+--------------------------------------------+

| Field | Value |

+--------------+--------------------------------------------+

| enabled | True |

| id | 0d41fab3fa474a9ba555290396c3dd52 |

| interface | admin |

| region | RegionOne |

| region_id | RegionOne |

| service_id | c80323b6a0b743bf95b40ebd031d407d |

| service_name | nova |

| service_type | compute |

| url | http://192.168.8.150:8774/v2/%(tenant_id)s |

+--------------+--------------------------------------------+

[root@node1 ~]# openstack host list

+-----------+-------------+----------+

| Host Name | Service | Zone |

+-----------+-------------+----------+

| node1 | conductor | internal | //conductor用来访问数据库

| node1 | consoleauth | internal | //consoleauth用来做控制台验证的

| node1 | scheduler | internal | //scheduler用来作调度的

| node1 | cert | internal | //cert用来作身份验证

+-----------+-------------+----------+

3.7 Nova compute 计算节点的部署

图解Novacpmpute

nova-compute一般运行在计算节点上,通过Message Queue接收并管理VM的生命周期

nova-compute通过Libvirt管理KVM,通过XenAPI管理Xen等

配置时间同步

修改其配置文件

[root@node2 ~]# vi /etc/chrony.conf

server 192.168.8.150 iburst(只保留这一个server,也就是控制节点的时间)

chrony开机自启动,并且启动

[root@node2 ~]# systemctl enable chronyd.service

[root@node2 ~]# systemctl start chronyd.service

设置Centos7的时区

[root@node2 ~]# timedatectl set-timezone Asia/Shanghai

[root@node2 ~]# timedatectl status

Local time: Mon 2016-09-26 21:29:02 CST

Universal time: Mon 2016-09-26 13:29:02 UTC

RTC time: Mon 2016-09-26 13:29:03

Time zone: Asia/Shanghai (CST, +0800)

NTP enabled: yes

NTP synchronized: yes

RTC in local TZ: no

DST active: n/a

[root@node2 ~]# date

Mon Sep 26 21:30:38 CST 2016

开始部署计算节点:

更改计算节点上的配置文件,直接使用控制节点的配置文件

[root@node1 ~]# scp /etc/nova/nova.conf 192.168.8.155:/etc/nova/

The authenticity of host ‘192.168.0.155 (192.168.8.155)‘ can‘t be established.

ECDSA key fingerprint is 48:6c:b4:31:5b:52:e7:d6:1f:e5:c6:bf:43:ca:c2:9b.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added ‘192.168.0.155‘ (ECDSA) to the list of known hosts.

root@192.168.0.155‘s password:

nova.conf 100% 116KB 116.1KB/s 00:00 (在控制节点上操作的scp)

更改配置文件后的过滤结果

[root@node2 ~]# grep -n ‘^[a-Z]‘ /etc/nova/nova.conf

61:rpc_backend=rabbit

124:my_ip=192.168.8.155 //改成本机ip

268:enabled_apis=osapi_compute,metadata

425:auth_strategy=keystone

1053:network_api_class=nova.network.neutronv2.api.API

1171:linuxnet_interface_driver=nova.network.linux_net.NeutronLinuxBridgeInterfaceDriver

1331:security_group_api=neutron

1370:debug=true

1374:verbose=True

1760:firewall_driver = nova.virt.firewall.NoopFirewallDriver

1820:novncproxy_base_url=http://192.168.8.150:6080/vnc_auto.html //指定novncproxy的IP地址和端口

1828:vncserver_listen=0.0.0.0 vnc监听0.0.0.0

1832:vncserver_proxyclient_address= $my_ip

1835:vnc_enabled=true 启用vnc

1838:vnc_keymap=en-us 英语键盘

2213:connection=mysql://nova:nova@192.168.8.150/nova

1936 [glance]

1943 host=192.168.8.150 //glance主机地址

2546:auth_uri = http://192.168.8.150:5000

2547:auth_url = http://192.168.8.150:35357

2548:auth_plugin = password

2549:project_domain_id = default

2550:user_domain_id = default

2551:project_name = service

2552:username = nova

2553:password = nova

2308 # Libvirt domain type (string value)

2309 # Allowed values: kvm, lxc, qemu, uml, xen, parallels

2310 virt_type=kvm //使用kvm虚拟机,需要cpu支持,可通过grep "vmx" /proc/cpuinfo或egrep -c ‘(vmx|svm)‘ /proc/cpuinfo查看。

如果这个命令返回 ``1或者更大``的值,说明您的计算节点支持硬件加速,一般不需要进行额外的配置。

如果这个命令返回``0``,你的计算节点不支持硬件加速,你必须配置 libvirt 使用QEMU而不是使用KVM。如果不支持,配置成qemu。

3807:lock_path=/var/lib/nova/tmp

3970:rabbit_host=192.168.56.11

3974:rabbit_port=5672

3986:rabbit_userid=openstack

3990:rabbit_password=openstack

本机配置:

[root@node2 ~]# grep -n ‘^[a-Z]‘ /etc/nova/nova.conf

198:my_ip=192.168.8.155

344:enabled_apis=osapi_compute,metadata

506:auth_strategy=keystone

838:network_api_class=nova.network.neutronv2.api.API

930:linuxnet_interface_driver=nova.network.linux_net.NeutronLinuxBridgeInterfaceDriver

1064:security_group_api=neutron

1240:firewall_driver=nova.virt.firewall.NoopFirewallDriver

1277:debug=true

1283:verbose=true

1422:rpc_backend=rabbit

1742:connection=mysql://nova:nova@192.168.8.150/nova

1943:host=192.168.8.150

2125:auth_uri = http://192.168.8.150:5000

2126:auth_url = http://192.168.8.150:35357

2127:auth_plugin = password

2128:project_domain_id = default

2129:user_domain_id = default

2130:project_name = service

2131:username = nova

2132:password = nova

2310:virt_type=qemu

2752:lock_path=/var/lib/nova/tmp

2933:rabbit_host=192.168.8.150

2937:rabbit_port=5672

2949:rabbit_userid=openstack

2953:rabbit_password=openstack

3311:novncproxy_base_url=http://192.168.8.150:6080/vnc_auto.html

3320:vncserver_listen=0.0.0.0

3325:vncserver_proxyclient_address=$my_ip

3329:enabled=true

3333:keymap=en-us

启动计算节点的libvirt和nova-compute

[root@node2 ~]# systemctl enable libvirtd openstack-nova-compute

Created symlink from /etc/systemd/system/multi-user.target.wants/openstack-nova-compute.service to /usr/lib/systemd/system/openstack-nova-compute.service.

[root@node2 ~]# systemctl start libvirtd openstack-nova-compute

在控制节点中查看注册的host,最后一个compute即是注册的host

[root@node1 ~]# openstack host list

+-----------+-------------+----------+

| Host Name | Service | Zone |

+-----------+-------------+----------+

| node1 | conductor | internal |

| node1 | consoleauth | internal |

| node1 | scheduler | internal |

| node1 | cert | internal |

| node2 | compute | nova |

+-----------+-------------+----------+

在控制节点中测试nova和glance连接正常,nova链接keystone是否正常

本机查询结果:

[root@node1 ~]# nova image-list

+--------------------------------------+--------+--------+--------+

| ID | Name | Status | Server |

+--------------------------------------+--------+--------+--------+

| 7ecc81e4-2b8f-4834-8686-00add29b70c4 | cirros | ACTIVE | |

+--------------------------------------+--------+--------+--------+

[root@node1 ~]# nova endpoints

WARNING: nova has no endpoint in ! Available endpoints for this service:

+-----------+---------------------------------------------------------------+

| nova | Value |

+-----------+---------------------------------------------------------------+

| id | 100773762e7f483daf1332afeef09382 |

| interface | public |

| region | RegionOne |

| region_id | RegionOne |

| url | http://192.168.8.150:8774/v2/572d9f4e16574cd6855bee7f0115cda7 |

+-----------+---------------------------------------------------------------+

+-----------+---------------------------------------------------------------+

| nova | Value |

+-----------+---------------------------------------------------------------+

| id | 28b1ad74bcee4564861ec106d1755824 |

| interface | admin |

| region | RegionOne |

| region_id | RegionOne |

| url | http://192.168.8.150:8774/v2/572d9f4e16574cd6855bee7f0115cda7 |

+-----------+---------------------------------------------------------------+

+-----------+---------------------------------------------------------------+

| nova | Value |

+-----------+---------------------------------------------------------------+

| id | fc38ec07e26e46ae8c026a9fc06e77dd |

| interface | internal |

| region | RegionOne |

| region_id | RegionOne |

| url | http://192.168.8.150:8774/v2/572d9f4e16574cd6855bee7f0115cda7 |

+-----------+---------------------------------------------------------------+

WARNING: glance has no endpoint in ! Available endpoints for this service:

+-----------+----------------------------------+

| glance | Value |

+-----------+----------------------------------+

| id | 5e7489ec5c1f425b85412a5f02b6012b |

| interface | public |

| region | RegionOne |

| region_id | RegionOne |

| url | http://192.168.8.150:9292 |

+-----------+----------------------------------+

+-----------+----------------------------------+

| glance | Value |

+-----------+----------------------------------+

| id | 733af08c94d640c0a6db0de7876a6d1b |

| interface | internal |

| region | RegionOne |

| region_id | RegionOne |

| url | http://192.168.8.150:9292 |

+-----------+----------------------------------+

+-----------+----------------------------------+

| glance | Value |

+-----------+----------------------------------+

| id | cf60f4e48d0c402c870d8239bebb064c |

| interface | admin |

| region | RegionOne |

| region_id | RegionOne |

| url | http://192.168.8.150:9292 |

+-----------+----------------------------------+

WARNING: keystone has no endpoint in ! Available endpoints for this service:

+-----------+----------------------------------+

| keystone | Value |

+-----------+----------------------------------+

| id | 4cf9cab338934ae19240f3935621da1f |

| interface | internal |

| region | RegionOne |

| region_id | RegionOne |

| url | http://192.168.8.150:5000/v2.0 |

+-----------+----------------------------------+

+-----------+----------------------------------+

| keystone | Value |

+-----------+----------------------------------+

| id | 54b10b9ff3c9487a87a1c8ae3b770d28 |

| interface | public |

| region | RegionOne |

| region_id | RegionOne |

| url | http://192.168.8.150:5000/v2.0 |

+-----------+----------------------------------+

+-----------+----------------------------------+

| keystone | Value |

+-----------+----------------------------------+

| id | ab228da361974bf8b6010f3b08271ac7 |

| interface | admin |

| region | RegionOne |

| region_id | RegionOne |

| url | http://192.168.8.150:35357/v2.0 |

+-----------+----------------------------------+

3.8 Neturn 服务部署

注册neutron服务

[root@node1 ~]# source admin-openrc.sh

[root@node1 ~]# openstack service create --name neutron --description "OpenStack Networking" network

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | OpenStack Networking |

| enabled | True |

| id | c208003fbb63477b85b5b20effb5e36b |

| name | neutron |

| type | network |

+-------------+----------------------------------+

[root@node1 ~]# openstack endpoint create --region RegionOne network public http://192.168.8.150:9696

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | ae523c7a0fc44b91a0f4666c78bc0710 |

| interface | public |

| region | RegionOne |

| region_id | RegionOne |

| service_id | c208003fbb63477b85b5b20effb5e36b |

| service_name | neutron |

| service_type | network |

| url | http://192.168.8.150:9696 |

+--------------+----------------------------------+

[root@node1 ~]# openstack endpoint create --region RegionOne network internal http://192.168.8.150:9696

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | b01b0cc592dc4d5087f89cf7afb9dec1 |

| interface | internal |

| region | RegionOne |

| region_id | RegionOne |

| service_id | c208003fbb63477b85b5b20effb5e36b |

| service_name | neutron |

| service_type | network |

| url | http://192.168.8.150:9696 |

+--------------+----------------------------------+

[root@node1 ~]# openstack endpoint create --region RegionOne network admin http://192.168.8.150:9696

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 14bd745d9bb64269bad11ece209bf3f3 |

| interface | admin |

| region | RegionOne |

| region_id | RegionOne |

| service_id | c208003fbb63477b85b5b20effb5e36b |

| service_name | neutron |

| service_type | network |

| url | http://192.168.8.150:9696 |

+--------------+----------------------------------+

创建neutron用户,并添加到service项目,给予admin权限

[root@node1 ~]# openstack user create --domain default --password=neutron neutron

+-----------+----------------------------------+

| Field | Value |

+-----------+----------------------------------+

| domain_id | default |

| enabled | True |

| id | aa76750cf0414ba59e9b2ae3952994b8 |

| name | neutron |

+-----------+----------------------------------+

[root@node1 ~]# openstack role add --project service --user neutron admin

****修改neturn配置文件****

[root@node1 ~]# grep -n "^[a-Z]" /etc/neutron/neutron.conf

1 [DEFAULT]

3 verbose = True

20:state_path = /var/lib/neutron

60 core_plugin = ml2 //核心插件为ml2

61 # Example: core_plugin = ml2

78 service_plugins = router //服务插件为router

93 auth_strategy = keystone

361 notify_nova_on_port_status_changes = True //端口改变需通知nova

365 notify_nova_on_port_data_changes = True

368 nova_url = http://192.168.8.150:8774/v2

574 rpc_backend=rabbit

720 [keystone_authtoken]

726 auth_uri = http://192.168.8.150:5000

727 auth_url = http://192.168.8.150:35357

728 auth_plugin = password

729 project_domain_id = default

730 user_domain_id = default

731 project_name = service

732 username = neutron

733 password = neutron

727 [database]

731 connection = mysql://neutron:neutron@192.168.8.150:3306/neutron

786 [nova]

787 auth_url = http://192.168.8.150:35357

788 auth_plugin = password

789 project_domain_id = default

790 user_domain_id = default

791 region_name = RegionOne

792 project_name = service

793 username = nova

794 password = nova

831 lock_path = $state_path/lock

957 [oslo_messaging_rabbit]

1000 rabbit_host = 192.168.8.150

1004 rabbit_port = 5672

1016 rabbit_userid = openstack

1020 rabbit_password = openstack

本机配置:

[root@node1 ~]# grep -n "^[a-Z]" /etc/neutron/neutron.conf

3:verbose = True

20:state_path = /var/lib/neutron

60:core_plugin = ml2

78:service_plugins = router

93:auth_strategy = keystone

361:notify_nova_on_port_status_changes = True

365:notify_nova_on_port_data_changes = True

368:nova_url = http://192.168.8.150:8774/v2

574:rpc_backend=rabbit

727:auth_uri = http://192.168.8.150:5000

728:auth_url = http://192.168.8.150:35357

729:auth_plugin = password

730:project_domain_id = default

731:user_domain_id = default

732:project_name = service

733:username = neutron

734:password = neutron

740:connection = mysql://neutron:neutron@192.168.8.150:3306/neutron

787:auth_url = http://192.168.8.150:35357

788:auth_plugin = password

789:project_domain_id = default

790:user_domain_id = default

791:region_name = RegionOne

792:project_name = service

793:username = nova

794:password = nova

831:lock_path = $state_path/lock

1011:rabbit_host = 192.168.8.150

1015:rabbit_port = 5672

1027:rabbit_userid = openstack

1031:rabbit_password = openstack

修改ml2的配置文件,ml2后续会有详细说明

[root@node1 ~]# grep "^[a-Z]" /etc/neutron/plugins/ml2/ml2_conf.ini

1 [ml2]

type_drivers = flat,vlan,gre,vxlan,geneve //各种驱动

tenant_network_types = vlan,gre,vxlan,geneve //网络类型

mechanism_drivers = openvswitch,linuxbridge //支持的底层驱动

extension_drivers = port_security //端口安全

flat_networks = physnet1 //使用单一扁平网络(和host一个网络)

enable_ipset = True

本机配置:

[root@node1 ~]# grep -n "^[a-Z]" /etc/neutron/plugins/ml2/ml2_conf.ini

5:type_drivers = flat,vlan,gre,vxlan,geneve

12:tenant_network_types = vlan,gre,vxlan,geneve

18:mechanism_drivers = openvswitch,linuxbridge

28:extension_drivers = port_security

69:flat_networks = physnet1

123:enable_ipset = True

修改的linuxbridge配置文件、

[root@node1 ~]# grep -n "^[a-Z]" /etc/neutron/plugins/ml2/linuxbridge_agent.ini

1 [linux_bridge]

9:physical_interface_mappings = physnet1:eth0 //网卡映射eth

12 [vxlan]

16:enable_vxlan = false //关闭vxlan

48 [agent]

51:prevent_arp_spoofing = True

58 [securitygroup]

60:firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

61:enable_security_group = True

本机配置:

[root@node1 ~]# grep -n "^[a-Z]" /etc/neutron/plugins/ml2/linuxbridge_agent.ini

9:physical_interface_mappings = physnet1:eth0

16:enable_vxlan = false

56:prevent_arp_spoofing = True

60:firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

66:enable_security_group = True

修改dhcp的配置文件

[root@node1 ~]# grep -n "^[a-Z]" /etc/neutron/dhcp_agent.ini

27:interface_driver = neutron.agent.linux.interface.BridgeInterfaceDriver

31:dhcp_driver = neutron.agent.linux.dhcp.Dnsmasq //默认使用Dnsmasq作为dhcp服务

55 enable_isolated_metadata = True

本机配置:

[root@node1 ~]# grep -n "^[a-Z]" /etc/neutron/dhcp_agent.ini

27:interface_driver = neutron.agent.linux.interface.BridgeInterfaceDriver

34:dhcp_driver = neutron.agent.linux.dhcp.Dnsmasq

55:enable_isolated_metadata = True

修改metadata_agent.ini配置文件

[root@node1 ~]# grep -n "^[a-Z]" /etc/neutron/metadata_agent.ini

1 [DEFAULT]

4:auth_uri = http://192.168.8.150:5000

5:auth_url = http://192.168.8.150:35357

6:auth_region = RegionOne

7:auth_plugin = password

8:project_domain_id = default

9:user_domain_id = default

10:project_name = service

11:username = neutron

12:password = neutron

29:nova_metadata_ip = 192.168.8.150

53:metadata_proxy_shared_secret = neutron

注释21-23行

在控制节点的nova中添加关于neutron的配置,`添加如下内容到neutron模块即可

[root@node1 ~]# vi /etc/nova/nova.conf

[neutron]

2575:url = http://192.168.8.150:9696

2636:auth_url = http://192.168.8.150:35357

2639:auth_plugin = password

2666:project_domain_id = default

2691:user_domain_id = default

2610:region_name = RegionOne

2675 project_name = service

2701:username = neutron

2663:password = neutron

2565:service_metadata_proxy = True

2568:metadata_proxy_shared_secret = neutron

创建ml2的软连接

[root@node1 ~]# ln -s /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugin.ini

同步neutron数据库,并检查结果

[root@node1 ~]# su -s /bin/sh -c "neutron-db-manage --config-file /etc/neutron/neutron.conf --config-file \ /etc/neutron/plugins/ml2/ml2_conf.ini upgrade head" neutron

INFO [alembic.runtime.migration] Context impl MySQLImpl.

INFO [alembic.runtime.migration] Will assume non-transactional DDL.

Running upgrade for neutron ...

INFO [alembic.runtime.migration] Context impl MySQLImpl.

INFO [alembic.runtime.migration] Will assume non-transactional DDL.

INFO [alembic.runtime.migration] Running upgrade -> juno, juno_initial

INFO [alembic.runtime.migration] Running upgrade juno -> 44621190bc02, add_uniqueconstraint_ipavailability_ranges

INFO [alembic.runtime.migration] Running upgrade 44621190bc02 -> 1f71e54a85e7, ml2_network_segments models change for multi-segment network.

INFO [alembic.runtime.migration] Running upgrade 1f71e54a85e7 -> 408cfbf6923c, remove ryu plugin

INFO [alembic.runtime.migration] Running upgrade 408cfbf6923c -> 28c0ffb8ebbd, remove mlnx plugin

INFO [alembic.runtime.migration] Running upgrade 28c0ffb8ebbd -> 57086602ca0a, scrap_nsx_adv_svcs_models

INFO [alembic.runtime.migration] Running upgrade 57086602ca0a -> 38495dc99731, ml2_tunnel_endpoints_table

INFO [alembic.runtime.migration] Running upgrade 38495dc99731 -> 4dbe243cd84d, nsxv

INFO [alembic.runtime.migration] Running upgrade 4dbe243cd84d -> 41662e32bce2, L3 DVR SNAT mapping

INFO [alembic.runtime.migration] Running upgrade 41662e32bce2 -> 2a1ee2fb59e0, Add mac_address unique constraint

INFO [alembic.runtime.migration] Running upgrade 2a1ee2fb59e0 -> 26b54cf9024d, Add index on allocated

INFO [alembic.runtime.migration] Running upgrade 26b54cf9024d -> 14be42f3d0a5, Add default security group table

INFO [alembic.runtime.migration] Running upgrade 14be42f3d0a5 -> 16cdf118d31d, extra_dhcp_options IPv6 support

INFO [alembic.runtime.migration] Running upgrade 16cdf118d31d -> 43763a9618fd, add mtu attributes to network

INFO [alembic.runtime.migration] Running upgrade 43763a9618fd -> bebba223288, Add vlan transparent property to network

INFO [alembic.runtime.migration] Running upgrade bebba223288 -> 4119216b7365, Add index on tenant_id column

INFO [alembic.runtime.migration] Running upgrade 4119216b7365 -> 2d2a8a565438, ML2 hierarchical binding

INFO [alembic.runtime.migration] Running upgrade 2d2a8a565438 -> 2b801560a332, Remove Hyper-V Neutron Plugin

INFO [alembic.runtime.migration] Running upgrade 2b801560a332 -> 57dd745253a6, nuage_kilo_migrate

INFO [alembic.runtime.migration] Running upgrade 57dd745253a6 -> f15b1fb526dd, Cascade Floating IP Floating Port deletion

INFO [alembic.runtime.migration] Running upgrade f15b1fb526dd -> 341ee8a4ccb5, sync with cisco repo

INFO [alembic.runtime.migration] Running upgrade 341ee8a4ccb5 -> 35a0f3365720, add port-security in ml2

INFO [alembic.runtime.migration] Running upgrade 35a0f3365720 -> 1955efc66455, weight_scheduler

INFO [alembic.runtime.migration] Running upgrade 1955efc66455 -> 51c54792158e, Initial operations for subnetpools

INFO [alembic.runtime.migration] Running upgrade 51c54792158e -> 589f9237ca0e, Cisco N1kv ML2 driver tables

INFO [alembic.runtime.migration] Running upgrade 589f9237ca0e -> 20b99fd19d4f, Cisco UCS Manager Mechanism Driver

INFO [alembic.runtime.migration] Running upgrade 20b99fd19d4f -> 034883111f, Remove allow_overlap from subnetpools

INFO [alembic.runtime.migration] Running upgrade 034883111f -> 268fb5e99aa2, Initial operations in support of subnet allocation from a pool

INFO [alembic.runtime.migration] Running upgrade 268fb5e99aa2 -> 28a09af858a8, Initial operations to support basic quotas on prefix space in a subnet pool

INFO [alembic.runtime.migration] Running upgrade 28a09af858a8 -> 20c469a5f920, add index for port

INFO [alembic.runtime.migration] Running upgrade 20c469a5f920 -> kilo, kilo

INFO [alembic.runtime.migration] Running upgrade kilo -> 354db87e3225, nsxv_vdr_metadata.py

INFO [alembic.runtime.migration] Running upgrade 354db87e3225 -> 599c6a226151, neutrodb_ipam

INFO [alembic.runtime.migration] Running upgrade 599c6a226151 -> 52c5312f6baf, Initial operations in support of address scopes

INFO [alembic.runtime.migration] Running upgrade 52c5312f6baf -> 313373c0ffee, Flavor framework

INFO [alembic.runtime.migration] Running upgrade 313373c0ffee -> 8675309a5c4f, network_rbac

INFO [alembic.runtime.migration] Running upgrade kilo -> 30018084ec99, Initial no-op Liberty contract rule.

INFO [alembic.runtime.migration] Running upgrade 30018084ec99, 8675309a5c4f -> 4ffceebfada, network_rbac

INFO [alembic.runtime.migration] Running upgrade 4ffceebfada -> 5498d17be016, Drop legacy OVS and LB plugin tables

INFO [alembic.runtime.migration] Running upgrade 5498d17be016 -> 2a16083502f3, Metaplugin removal

INFO [alembic.runtime.migration] Running upgrade 2a16083502f3 -> 2e5352a0ad4d, Add missing foreign keys

INFO [alembic.runtime.migration] Running upgrade 2e5352a0ad4d -> 11926bcfe72d, add geneve ml2 type driver

INFO [alembic.runtime.migration] Running upgrade 11926bcfe72d -> 4af11ca47297, Drop cisco monolithic tables

INFO [alembic.runtime.migration] Running upgrade 8675309a5c4f -> 45f955889773, quota_usage

INFO [alembic.runtime.migration] Running upgrade 45f955889773 -> 26c371498592, subnetpool hash

INFO [alembic.runtime.migration] Running upgrade 26c371498592 -> 1c844d1677f7, add order to dnsnameservers

INFO [alembic.runtime.migration] Running upgrade 1c844d1677f7 -> 1b4c6e320f79, address scope support in subnetpool

INFO [alembic.runtime.migration] Running upgrade 1b4c6e320f79 -> 48153cb5f051, qos db changes

INFO [alembic.runtime.migration] Running upgrade 48153cb5f051 -> 9859ac9c136, quota_reservations

INFO [alembic.runtime.migration] Running upgrade 9859ac9c136 -> 34af2b5c5a59, Add dns_name to Port

OK

查看同步结果:

MariaDB [(none)]> use neutron;

Database changed

MariaDB [neutron]> show tables;

+-----------------------------------------+

| Tables_in_neutron |

+-----------------------------------------+

| address_scopes |

| agents |

| alembic_version |

| allowedaddresspairs |

| arista_provisioned_nets |

| arista_provisioned_tenants |

| arista_provisioned_vms |

| brocadenetworks |

| brocadeports |

| cisco_csr_identifier_map |

| cisco_hosting_devices |

| cisco_ml2_apic_contracts |

| cisco_ml2_apic_host_links |

| cisco_ml2_apic_names |

| cisco_ml2_n1kv_network_bindings |

| cisco_ml2_n1kv_network_profiles |

| cisco_ml2_n1kv_policy_profiles |

| cisco_ml2_n1kv_port_bindings |

| cisco_ml2_n1kv_profile_bindings |

| cisco_ml2_n1kv_vlan_allocations |

| cisco_ml2_n1kv_vxlan_allocations |

| cisco_ml2_nexus_nve |

| cisco_ml2_nexusport_bindings |

| cisco_port_mappings |

| cisco_router_mappings |

| consistencyhashes |

| csnat_l3_agent_bindings |

| default_security_group |

| dnsnameservers |

| dvr_host_macs |

| embrane_pool_port |

| externalnetworks |

| extradhcpopts |

| firewall_policies |

| firewall_rules |

| firewalls |

| flavors |

| flavorserviceprofilebindings |

| floatingips |

| ha_router_agent_port_bindings |

| ha_router_networks |

| ha_router_vrid_allocations |

| healthmonitors |

| ikepolicies |

| ipallocationpools |

| ipallocations |

| ipamallocationpools |

| ipamallocations |

| ipamavailabilityranges |

| ipamsubnets |

| ipavailabilityranges |

| ipsec_site_connections |

| ipsecpeercidrs |

| ipsecpolicies |

| lsn |

| lsn_port |

| maclearningstates |

| members |

| meteringlabelrules |

| meteringlabels |

| ml2_brocadenetworks |

| ml2_brocadeports |

| ml2_dvr_port_bindings |

| ml2_flat_allocations |

| ml2_geneve_allocations |

| ml2_geneve_endpoints |

| ml2_gre_allocations |

| ml2_gre_endpoints |

| ml2_network_segments |

| ml2_nexus_vxlan_allocations |

| ml2_nexus_vxlan_mcast_groups |

| ml2_port_binding_levels |

| ml2_port_bindings |

| ml2_ucsm_port_profiles |

| ml2_vlan_allocations |

| ml2_vxlan_allocations |

| ml2_vxlan_endpoints |

| multi_provider_networks |

| networkconnections |

| networkdhcpagentbindings |

| networkgatewaydevicereferences |

| networkgatewaydevices |

| networkgateways |

| networkqueuemappings |

| networkrbacs |

| networks |

| networksecuritybindings |

| neutron_nsx_network_mappings |

| neutron_nsx_port_mappings |

| neutron_nsx_router_mappings |

| neutron_nsx_security_group_mappings |

| nexthops |

| nsxv_edge_dhcp_static_bindings |

| nsxv_edge_vnic_bindings |

| nsxv_firewall_rule_bindings |

| nsxv_internal_edges |

| nsxv_internal_networks |

| nsxv_port_index_mappings |

| nsxv_port_vnic_mappings |

| nsxv_router_bindings |

| nsxv_router_ext_attributes |

| nsxv_rule_mappings |

| nsxv_security_group_section_mappings |

| nsxv_spoofguard_policy_network_mappings |

| nsxv_tz_network_bindings |

| nsxv_vdr_dhcp_bindings |

| nuage_net_partition_router_mapping |

| nuage_net_partitions |

| nuage_provider_net_bindings |

| nuage_subnet_l2dom_mapping |

| ofcfiltermappings |

| ofcnetworkmappings |

| ofcportmappings |

| ofcroutermappings |

| ofctenantmappings |

| packetfilters |

| poolloadbalanceragentbindings |

| poolmonitorassociations |

| pools |

| poolstatisticss |

| portbindingports |

| portinfos |

| portqueuemappings |

| ports |

| portsecuritybindings |

| providerresourceassociations |

| qos_bandwidth_limit_rules |

| qos_network_policy_bindings |

| qos_policies |

| qos_port_policy_bindings |

| qosqueues |

| quotas |

| quotausages |

| reservations |

| resourcedeltas |

| router_extra_attributes |

| routerl3agentbindings |

| routerports |

| routerproviders |

| routerroutes |

| routerrules |

| routers |

| securitygroupportbindings |

| securitygrouprules |

| securitygroups |

| serviceprofiles |

| sessionpersistences |

| subnetpoolprefixes |

| subnetpools |

| subnetroutes |

| subnets |

| tz_network_bindings |

| vcns_router_bindings |

| vips |

| vpnservices |

+-----------------------------------------+

155 rows in set (0.00 sec)

重启nova-api,并启动neutron服务

[root@node1 ~]# systemctl restart openstack-nova-api.service

[root@node1 ~]# systemctl enable neutron-server.service neutron-linuxbridge-agent.service neutron-dhcp-agent.service neutron-metadata-agent.service

Created symlink from /etc/systemd/system/multi-user.target.wants/neutron-server.service to /usr/lib/systemd/system/neutron-server.service.

Created symlink from /etc/systemd/system/multi-user.target.wants/neutron-linuxbridge-agent.service to /usr/lib/systemd/system/neutron-linuxbridge-agent.service.

Created symlink from /etc/systemd/system/multi-user.target.wants/neutron-dhcp-agent.service to /usr/lib/systemd/system/neutron-dhcp-agent.service.

Created symlink from /etc/systemd/system/multi-user.target.wants/neutron-metadata-agent.service to /usr/lib/systemd/system/neutron-metadata-agent.service.

[root@node1 ~]# systemctl start neutron-server.service \

neutron-linuxbridge-agent.service neutron-dhcp-agent.service \

neutron-metadata-agent.service

检查neutron-agent结果:

[root@node1 ~]# neutron agent-list

+--------------------------------------+--------------------+-------+-------+----------------+---------------------------+

| id | agent_type | host | alive | admin_state_up | binary |

+--------------------------------------+--------------------+-------+-------+----------------+---------------------------+

| 46374c4b-098e-477d-8423-ae3787d58881 | Linux bridge agent | node1 | :-) | True | neutron-linuxbridge-agent |

| a6dd32be-341a-49ad-a1ae-6ec6944a9dc1 | Metadata agent | node1 | :-) | True | neutron-metadata-agent |

| c3606b15-fecf-4142-b664-61cbc712c781 | DHCP agent | node1 | :-) | True | neutron-dhcp-agent |

+--------------------------------------+--------------------+-------+-------+----------------+---------------------------+

开始部署neutron的计算节点,在这里直接scp过去,不需要做任何更改

[root@node1 ~]# scp /etc/neutron/neutron.conf 192.168.8.155:/etc/neutron/

root@192.168.8.155‘s password:

neutron.conf 100% 36KB 35.8KB/s 00:00

[root@node1 ~]# scp /etc/neutron/plugins/ml2/linuxbridge_agent.ini 192.168.8.155:/etc/neutron/plugins/ml2/

root@192.168.8.155‘s password:

linuxbridge_agent.ini 100% 2808 2.7KB/s 00:00

修改计算节点的nova配置,添加如下内容到neutron模块即可

[root@node2 ~]# vi /etc/nova/nova.conf

[neutron]

2575:url = http://192.168.8.150:9696

2636:auth_url = http://192.168.8.150:35357

2639:auth_plugin = password

2666:project_domain_id = default

2691:user_domain_id = default

2610:region_name = RegionOne

2675 project_name = service

2701:username = neutron

2663:password = neutron

2565:service_metadata_proxy = True

2568:metadata_proxy_shared_secret = neutron

创建ml2的软连接,复制ml2_conf.ini文件,无需更改,并创建ml2软连接

[root@node1 ~]# scp /etc/neutron/plugins/ml2/ml2_conf.ini 192.168.8.155:/etc/neutron/plugins/ml2/

root@192.168.8.155‘s password:

ml2_conf.ini 100% 4874 4.8KB/s 00:00

[root@node2 ~]# ln -s /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugin.ini

重启计算节点的nova-computer

[root@node2 ~]# systemctl restart openstack-nova-compute.service

计算节点上启动linuxbridge_agent服务

[root@node2 ~]# systemctl enable neutron-linuxbridge-agent.service

Created symlink from /etc/systemd/system/multi-user.target.wants/neutron-linuxbridge-agent.service to /usr/lib/systemd/system/neutron-linuxbridge-agent.service.

[root@node2 ~]# systemctl start neutron-linuxbridge-agent.service

******检查neutron的结果,有四个(控制节点一个,计算节点两个)结果代表正确********

[root@node1 ~]# neutron agent-list +--------------------------------------+--------------------+-------+-------+----------------+---------------------------+

| id | agent_type | host | alive | admin_state_up | binary |

+--------------------------------------+--------------------+-------+-------+----------------+---------------------------+

| 46374c4b-098e-477d-8423-ae3787d58881 | Linux bridge agent | node1 | :-) | True | neutron-linuxbridge-agent |

| a6dd32be-341a-49ad-a1ae-6ec6944a9dc1 | Metadata agent | node1 | :-) | True | neutron-metadata-agent |

| c3606b15-fecf-4142-b664-61cbc712c781 | DHCP agent | node1 | :-) | True | neutron-dhcp-agent |

+--------------------------------------+--------------------+-------+-------+----------------+---------------------------+

明显看到neutron-agent list查询出来的结果不对,检查node2计算节点的配置并没有错误,服务状态也是活动的,网络也是通的,那剩下的就是selinux和firewall了,检查了下,firewall因为系统安装的是mini的所有默认没有安装,selinux确定是打开的:

[root@node2 ~]# getenforce

Enforcing

[root@node2 ~]# setenforce 0

[root@node2 ~]# getenforce

Permissive

[root@node2 ~]# systemctl restart openstack-nova-compute.service

[root@node2 ~]# systemctl restart neutron-linuxbridge-agent.service

暂时设置为宽容模式,并重启计算节点node2的计算服务和桥接代理服务后,此时再在neutron服务端node1节点查看桥接代理节点列表如下:

[root@node1 ~]# neutron agent-list

+--------------------------------------+--------------------+-------+-------+----------------+---------------------------+

| id | agent_type | host | alive | admin_state_up | binary |

+--------------------------------------+--------------------+-------+-------+----------------+---------------------------+

| 0d698be2-fbe8-4539-b8c2-b7856c5e4ccb | Linux bridge agent | node2 | :-) | True | neutron-linuxbridge-agent |

| 46374c4b-098e-477d-8423-ae3787d58881 | Linux bridge agent | node1 | :-) | True | neutron-linuxbridge-agent |

| a6dd32be-341a-49ad-a1ae-6ec6944a9dc1 | Metadata agent | node1 | :-) | True | neutron-metadata-agent |

| c3606b15-fecf-4142-b664-61cbc712c781 | DHCP agent | node1 | :-) | True | neutron-dhcp-agent |

+--------------------------------------+--------------------+-------+-------+----------------+---------------------------+

可以看到,现在的neutron-agent list查询结果是正确的,是4个,node1是3个,node2是一个。

四、创建一台虚拟机

图解网络,并创建一个真实的桥接网络

创建一个单一扁平网络(名字:flat),网络类型为flat,网络适共享的(share),网络提供者:physnet1,它是和eth0关联起来的

[root@node1 ~]# source admin-openrc.sh

[root@node1 ~]# neutron net-create flat --shared --provider:physical_network physnet1 --provider:network_type flat

Created a new network:

+---------------------------+--------------------------------------+

| Field | Value |

+---------------------------+--------------------------------------+

| admin_state_up | True |

| id | 7e68ff60-b8a0-4506-b47f-9026dea6a682 |

| mtu | 0 |

| name | flat |

| port_security_enabled | True |

| provider:network_type | flat |

| provider:physical_network | physnet1 |

| provider:segmentation_id | |

| router:external | False |

| shared | True |

| status | ACTIVE |

| subnets | |

| tenant_id | 4c1ee18980c64098a1e86fb43a2429bb |

+---------------------------+--------------------------------------+

对上一步创建的网络创建一个子网,名字为:subnet-create flat,设置dns和网关

[root@node1 ~]# neutron subnet-create flat 192.168.8.0/24 --name flat-subnet --allocation-pool start=192.168.8.160,end=192.168.8.189 --dns-nameserver 192.168.8.2 --gateway 192.168.8.2

Created a new subnet:

+-------------------+----------------------------------------------------+

| Field | Value |

+-------------------+----------------------------------------------------+

| allocation_pools | {"start": "192.168.8.160", "end": "192.168.8.189"} || cidr | 192.168.8.0/24 |

| dns_nameservers | 192.168.8.2 |

| enable_dhcp | True |

| gateway_ip | 192.168.8.2 |

| host_routes | |

| id | 55797b4f-532b-4a1d-8b2b-620a2d45e9a9 |

| ip_version | 4 |

| ipv6_address_mode | |

| ipv6_ra_mode | |

| name | flat-subnet |

| network_id | a2263708-13d2-490d-a4bf-1f55d55f32ef |

| subnetpool_id | |

| tenant_id | 572d9f4e16574cd6855bee7f0115cda7 |

+-------------------+----------------------------------------------------+

查看创建的网络和子网

[root@node1 ~]# neutron subnet-list

+--------------------------------------+-------------+----------------+----------------------------------------------------+

| id | name | cidr | allocation_pools |

+--------------------------------------+-------------+----------------+----------------------------------------------------+

| 55797b4f-532b-4a1d-8b2b-620a2d45e9a9 | flat-subnet | 192.168.8.0/24 | {"start": "192.168.8.160", "end": "192.168.8.189"} |+--------------------------------------+-------------+----------------+----------------------------------------------------+

注:创建虚拟机之前,由于一个网络下不能存在多个dhcp,所以一定关闭其他的dhcp选项 下面开始正式创建虚拟机,为了可以连上所创建的虚拟机,在这里要创建一对公钥和私钥,并添加openstack中

[root@node1 ~]# source demo-openrc.sh

[root@node1 ~]# ssh-keygen -q -N ""

Enter file in which to save the key (/root/.ssh/id_rsa):

[root@node1 ~]# nova keypair-add --pub-key .ssh/id_rsa.pub mykey

Message from syslogd@node1 at Oct 22 09:31:31 ...

kernel:BUG: soft lockup - CPU#0 stuck for 25s! [kswapd0:153]

出错了,意思是:

【博士】北京-林夕(630995935) 9:57:40

CPU无响应了一段时间。

CPU#0 stuck for 25s! 这个看到了吗? soft lockup 也就是软锁啦。

【博士】~~~@懒人一个(1729294227) 9:58:52

怎么解决呢

【博士】北京-林夕(630995935) 9:59:04

玩openstack平台,太耗费CPU和内存资源啦。

【博士】~~~@懒人一个(1729294227) 9:59:14

是啊

我也发现

【博士】~~~@懒人一个(1729294227) 10:00:34

难道说是内存不够了?

【硕士】北京-sky(946731984) 10:01:54

系统性能跟不上呗

【博士】北京-林夕(630995935) 10:01:59

解决办法是提高系统的CPU和内存配置吧。

【硕士】北京-sky(946731984) 10:02:03

另外你这是拿虚拟机做的nova节点么

如果是的话,那就是资源超分了

【博士】~~~@懒人一个(1729294227) 10:02:36

明白了,是啊

哦,好的,谢谢

提高CPU和内存资源后,再次尝试,确实可以了。

[root@node1 ~]# nova keypair-add --pub-key .ssh/id_rsa.pub mykey

[root@node1 ~]# nova keypair-list

+-------+-------------------------------------------------+

| Name | Fingerprint |

+-------+-------------------------------------------------+

| mykey | cb:f8:66:58:49:84:62:21:c2:89:fb:fd:de:a1:93:19 |

+-------+-------------------------------------------------+

[root@node1 ~]# ls .ssh/

id_rsa id_rsa.pub known_hosts

创建一个安全组,打开icmp和开放22端口

[root@node1 ~]# nova secgroup-add-rule default icmp -1 -1 0.0.0.0/0

+-------------+-----------+---------+-----------+--------------+

| IP Protocol | From Port | To Port | IP Range | Source Group |

+-------------+-----------+---------+-----------+--------------+

| icmp | -1 | -1 | 0.0.0.0/0 | |

+-------------+-----------+---------+-----------+--------------+

[root@node1 ~]# nova secgroup-add-rule default tcp 22 22 0.0.0.0/0

+-------------+-----------+---------+-----------+--------------+

| IP Protocol | From Port | To Port | IP Range | Source Group |

+-------------+-----------+---------+-----------+--------------+

| tcp | 22 | 22 | 0.0.0.0/0 | |

+-------------+-----------+---------+-----------+--------------+

创建虚拟机之前要进行的确认虚拟机类型flavor(相当于EC2的intance的type)、需要的镜像(EC2的AMI),需要的网络(EC2的VPC),安全组(EC2的sg)

[root@node1 ~]# nova flavor-list

+----+-----------+-----------+------+-----------+------+-------+-------------+-----------+

| ID | Name | Memory_MB | Disk | Ephemeral | Swap | VCPUs | RXTX_Factor | Is_Public |

+----+-----------+-----------+------+-----------+------+-------+-------------+-----------+

| 1 | m1.tiny | 512 | 1 | 0 | | 1 | 1.0 | True |

| 2 | m1.small | 2048 | 20 | 0 | | 1 | 1.0 | True |

| 3 | m1.medium | 4096 | 40 | 0 | | 2 | 1.0 | True |

| 4 | m1.large | 8192 | 80 | 0 | | 4 | 1.0 | True |

| 5 | m1.xlarge | 16384 | 160 | 0 | | 8 | 1.0 | True |

+----+-----------+-----------+------+-----------+------+-------+-------------+-----------+

[root@node1 ~]# nova image-list

+--------------------------------------+--------+--------+--------+

| ID | Name | Status | Server |

+--------------------------------------+--------+--------+--------+

| c25c409d-6e7d-4855-9322-41cc1141c350 | cirros | ACTIVE | |

+--------------------------------------+--------+--------+--------+

[root@node1 ~]# neutron net-list

+--------------------------------------+------+-----------------------------------------------------+

| id | name | subnets |

+--------------------------------------+------+-----------------------------------------------------+

| 7e68ff60-b8a0-4506-b47f-9026dea6a682 | flat | 9b95b3da-c7ec-4165-b37a-d3ea86d4ffe6 192.168.0.0/24 |

+--------------------------------------+------+-----------------------------------------------------+

[root@node1 ~]# nova secgroup-list

+--------------------------------------+---------+------------------------+

| Id | Name | Description |

+--------------------------------------+---------+------------------------+

| a67a675a-9dcb-4940-a189-bc9d13134a79 | default | Default security group |

+--------------------------------------+---------+------------------------+

创建一台虚拟机,类型为m1.tiny,镜像为cirros(上文wget的),网络id为neutronnet-list出来的,安全组就是默认的,选择刚开的创建的key-pair,虚拟机的名字为hello-instance

[root@node1 ~]# nova boot --flavor m1.tiny --image cirros --nic net-id=a2263708-13d2-490d-a4bf-1f55d55f32ef --security-group default --key-name mykey hello-instance

+--------------------------------------+-----------------------------------------------+

| Property | Value |

+--------------------------------------+-----------------------------------------------+

| OS-DCF:diskConfig | MANUAL |

| OS-EXT-AZ:availability_zone | |

| OS-EXT-STS:power_state | 0 |

| OS-EXT-STS:task_state | scheduling |

| OS-EXT-STS:vm_state | building |

| OS-SRV-USG:launched_at | - |

| OS-SRV-USG:terminated_at | - |

| accessIPv4 | |

| accessIPv6 | |

| adminPass | NKDqtk6N6sD5 |

| config_drive | |

| created | 2016-11-14T15:57:48Z |

| flavor | m1.tiny (1) |

| hostId | |

| id | eaaa70b1-afe0-4be6-93e6-9b3150f2b899 |

| image | cirros (cca2b796-6c5e-4746-b697-f126604f7063) |

| key_name | mykey |

| metadata | {} || name | hello-instance |

| os-extended-volumes:volumes_attached | [] |

| progress | 0 |

| security_groups | default |

| status | BUILD |

| tenant_id | 7af679b7d62b49908822bbdf6d36428d |

| updated | 2016-11-14T15:57:48Z |

| user_id | 331d3cf73bcc4b03914bb44d4cb85041 |

+--------------------------------------+-----------------------------------------------+

***查看所创建的虚拟机状态***

[root@node1 ~]# nova list

+--------------------------------------+----------------+--------+------------+-------------+--------------------+

| ID | Name | Status | Task State | Power State | Networks |

+--------------------------------------+----------------+--------+------------+-------------+--------------------+

| 4ed8bb06-1f95-4483-ac76-f99460222fdd | hello-instance | ACTIVE | - | Running | flat=192.168.8.102 |

+--------------------------------------+----------------+--------+------------+-------------+--------------------+

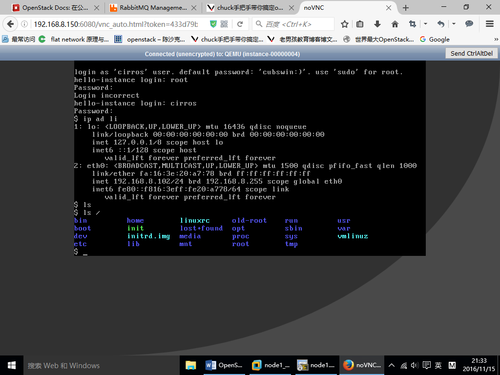

ssh连接到所创建的虚拟机

[root@node1 ~]# ping 192.168.8.102

PING 192.168.8.102 (192.168.8.102) 56(84) bytes of data.

64 bytes from 192.168.8.102: icmp_seq=1 ttl=64 time=3.89 ms

64 bytes from 192.168.8.102: icmp_seq=2 ttl=64 time=0.969 ms

64 bytes from 192.168.8.102: icmp_seq=3 ttl=64 time=1.68 ms

64 bytes from 192.168.8.102: icmp_seq=4 ttl=64 time=1.50 ms

64 bytes from 192.168.8.102: icmp_seq=5 ttl=64 time=1.18 ms

[root@node1 ~]# ssh cirros@192.168.8.102

$ ip ad li

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 16436 qdisc noqueue

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast qlen 1000

link/ether fa:16:3e:20:a7:78 brd ff:ff:ff:ff:ff:ff

inet 192.168.8.102/24 brd 192.168.8.255 scope global eth0

inet6 fe80::f816:3eff:fe20:a778/64 scope link

valid_lft forever preferred_lft forever

$ ls /

bin home linuxrc old-root run usr

boot init lost+found opt sbin var

dev initrd.img media proc sys vmlinuz

etc lib mnt root tmp

通过vnc生成URL在web界面上链接虚拟机

[root@node1 ~]# nova get-vnc-console hello-instance novnc

+-------+------------------------------------------------------------------------------------+

| Type | Url |

+-------+------------------------------------------------------------------------------------+

| novnc | http://192.168.8.150:6080/vnc_auto.html?token=433d79b8-b651-454a-818d-41d9cafbca0a |

+-------+------------------------------------------------------------------------------------+

五、Dashboard演示

5.1 编辑dashboard的配置文件

[root@linux-node1 ~]# vim /etc/openstack-dashboard/local_settings

29 ALLOWED_HOSTS = [‘*‘, ‘localhost‘] //哪些主机可以访问,localhost代表列表

138 OPENSTACK_HOST = "192.168.56.11" //改成keystone的地址

140 OPENSTACK_KEYSTONE_DEFAULT_ROLE = "user" //keystone之前创建的

108 CACHES = {109 ‘default‘: {110 ‘BACKEND‘: ‘django.core.cache.backends.memcached.Memcac

hedCache‘,

111 ‘LOCATION‘: ‘192.168.56.11:11211‘,

112 }

113 } //打开使用memcached

320 TIME_ZONE = " Asia/Shanghai " //设置时区

重启apache

[root@linux-node1 ~]# systemctlrestart httpd

5.2 操作dashboard

5.2.1 登录dashboard

使用keystone的demo用户登录(只有在管理员admin权限下才能看到所有instance)

5.2.2 删除之前的虚拟机并重新创建一台虚拟机

了解针对虚拟机的各个状态操作

绑定浮动ip:Eip

绑定/解绑接口:绑定或者解绑API

编辑云主机:修改云主机的参数

编辑安全组:修改secrity group的参数

控制台:novnc控制台

查看日志:查看console.log

中止实例:stop虚拟机

挂起实例:save 状态

废弃实例:将实例暂时留存

调整云主机大小: 调整其type

锁定/解锁实例:锁定/解锁这个云主机

软重启实例:正常重启,先stop后start

硬重启实例:类似于断电重启

关闭实例: shutdown该实例

重建云主机:重新build一个同样的云主机

终止实例: 删除云主机

5.2.3 launch instance

总算搞出来了,期间碰到很多坑,在热心网友及好友的帮助下,还算顺利,都一 一填掉了,在此真心感谢各,滴水之恩,无以为报,为有整理出实战过程,奉献给广大网友,后续会继续奉上理论部分采纳参照了以下博客及官网:

老男孩教育博客: http://blog.oldboyedu.com/openstack/

本文出自 “云计算与大数据” 博客,请务必保留此出处http://linuxzkq.blog.51cto.com/9379412/1876983

云计算之OpenStack实战记(二)与埋坑填坑