首页 > 代码库 > 玩转MHA高可用集群

玩转MHA高可用集群

MHA高可用集群

===============================================================================

概述:

本章将主要介绍MySQL中MHA高可用集群的相关内容,如下:

MHA的功能介绍;

MHA的集群架构,工作原理;

CentOS 7上MHA实战配置部署及故障转移测试;

===============================================================================

关于MHA

★功能:

MHA(Master HA)是一款开源的MySQL高可用程序,它为MySQL主从复制架构提供了automating master failover(自动化故障转移)功能。MHA在控制master节点故障时,会提升其中拥有最新数据的slave节点成为最新的master节点,在此期间,MHA会通过其他从节点获取额外信息来避免一致性方面的问题。MHA还提供了master节点在线切换功能,即按需切换master/slave节点。

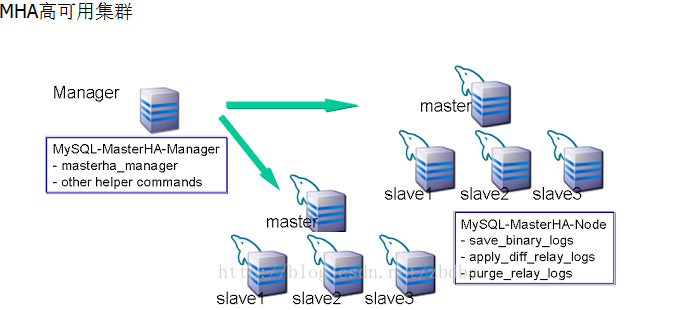

★MHA有两种角色,MHA Manager(管理节点)和MHA Node(数据节点)

☉MHA Manager

通常单独部署在一台独立机器上管理多个master/slave集群,每个master/slave集群称作一个application

☉MHA Node

运行在每台MySQL服务器上(master/slave/manager),它通过监控具备解析和清理logs功能的脚本来加快故障转移

★MHA高可用集群架构图

★MHA工作原理及应用场景:

MHA Manager可以单独部署在一台独立的机器上管理多个master-slave集群,也可以部署在一台slave节点上。MHA Node运行在每台MySQL服务器上,MHA Manager会定时探测集群中的master节点,当master出现故障时,它可以自动将最新数据的slave提升为新的master,然后将所有其他的slave重新指向新的master。整个故障转移过程对应用程序完全透明。

在MHA自动故障切换过程中,MHA试图从宕机的主服务器上保存二进制日志,最大程度的保证数据的不丢失,但这并不总是可行的。例如,如果主服务器硬件故障或无法通过ssh访问,MHA没法保存二进制日志,只进行故障转移而丢失了最新的数据。使用MySQL 5.5的半同步复制,可以大大降低数据丢失的风险。MHA可以与半同步复制结合起来。如果只有一个slave已经收到了最新的二进制日志,MHA可以将最新的二进制日志应用于其他所有的slave服务器上,因此可以保证所有节点的数据一致性,有时候可故意设置从节点慢于主节点,当发生意外删除数据库倒是数据丢失时可从从节点二进制日志中恢复。

目前MHA主要支持一主多从的架构,要搭建MHA,要求一个复制集群中必须最少有三台数据库服务器,一主二从,即一台充当master,一台充当备用master,另外一台充当从库,因为至少需要三台服务器,出于机器成本的考虑,淘宝也在该基础上进行了改造,目前淘宝TMHA已经支持一主一从。

===============================================================================

MHA实战配置部署及故障转移测试

===============================================================================

实验环境描述

准备四台虚拟主机(CentOS 7),一台为MHA Manager,一台为master,其余两台为slave;

Manager需要安装MHA Manager程序,其余的都要安装MHA Node程序;

master和slave1,slave2为主从复制集群

IP地址规划:

| 服务器名称 | IP地址 | 主机名 |

| MHA Manager | 10.1.252.153 | node1.taotao.com |

| MAriaDB master | 10.1.252.161 | node2.taotao.com |

| MAriaDB slave1 | 10.1.252.73 | node3.taotao.com |

| MAriaDB slave2 | 10.1.252.156 | node4.taotao.com |

一、准备MySQL Replication 环境

MHA对MySQL复制环境有特殊要求,例如各节点都要开启二进制日志及中继日志,各从节点必须显示启用read-only属性,并且关闭relay_log_purge功能等

1.在各节点的/etc/hosts文件中配置各节点能够基于主机名解析ip地址

10.1.252.153 node1.taotao.com node1 10.1.252.161 node2.taotao.com node2 10.1.252.73 node3.taotao.com node3 10.1.252.156 node4.taotao.com node4

2.各节点时间同步,如下:

[root@node1 ~]# date;ansible nodes -m shell -a "date" Fri Dec 9 11:30:06 CST 2016 10.1.252.156 | SUCCESS | rc=0 >> Fri Dec 9 11:30:06 CST 2016 10.1.252.161 | SUCCESS | rc=0 >> Fri Dec 9 11:30:07 CST 2016 10.1.252.73 | SUCCESS | rc=0 >> Fri Dec 9 11:30:07 CST 2016

3.初始主节点Master(node2)配置如下:

[root@node2 ~]# vim /etc/my.cnf skip_name_resolve = ON innodb_file_per_table = ON log_bin=master-log server-id=1 relay-log=relay-log [root@node2 ~]# systemctl start mariadb

所有Slave节点(node3,node4)依赖的配置如下:

[root@node3 ~]# vim /etc/my.cnf skip_name_resolve = ON innodb_file_per_table = ON log_bin=master-log server-id=2 //复制集群中的各节点id均必须唯一 relay-log=relay-log relay-log-purge=0 //关闭清理中继日志的功能 read-only=1 //只读 [root@node2 ~]# systemctl start mariadb //启动服务

4.在各节点(master,slave)授权一个拥有所有权限的管理账号和复制权限的账号,这里只需在master中授权一个即可,然后其余两个slave节点会复制其授权语句,如下:

MariaDB [(none)]> SHOW MASTER STATUS; +-------------------+----------+--------------+------------------+ | File | Position | Binlog_Do_DB | Binlog_Ignore_DB | +-------------------+----------+--------------+------------------+ | master-log.000001 | 245 | | | +-------------------+----------+--------------+------------------+ 1 row in set (0.01 sec) # 授权一个有复制功能的账号 MariaDB [(none)]> GRANT REPLICATION SLAVE,REPLICATION CLIENT ON *.* TO ‘repluser‘@‘10.1.%.%‘ IDENTIFIED BY ‘replpass‘; Query OK, 0 rows affected (0.00 sec) MariaDB [(none)]> FLUSH PRIVILEGES; Query OK, 0 rows affected (0.00 sec) # 授权一个有所有权限的账号 MariaDB [(none)]> GRANT ALL ON *.* TO ‘mhaadmin‘@‘10.1.%.%‘ IDENTIFIED BY ‘mhapass‘; Query OK, 0 rows affected (0.00 sec) MariaDB [(none)]> FLUSH PRIVILEGES; Query OK, 0 rows affected (0.01 sec) MariaDB [(none)]> SHOW MASTER STATUS; +-------------------+----------+--------------+------------------+ | File | Position | Binlog_Do_DB | Binlog_Ignore_DB | +-------------------+----------+--------------+------------------+ | master-log.000001 | 709 | | | +-------------------+----------+--------------+------------------+ 1 row in set (0.00 sec)

5.在slave从服务器上连接主服务器,并启动IO和SQL线程,确保主从复制无误:

MariaDB [(none)]> CHANGE MASTER TO # 连接主服务器 -> MASTER_HOST=‘10.1.252.161‘, -> MASTER_USER=‘repluser‘, -> MASTER_PASSWORD=‘replpass‘, -> MASTER_LOG_FILE=‘master-log.000001‘, -> MASTER_LOG_POS=245; Query OK, 0 rows affected (0.07 sec) MariaDB [(none)]> START SLAVE; # 启动复制线程 Query OK, 0 rows affected (0.01 sec) MariaDB [(none)]> show slave status\G *************************** 1. row *************************** Slave_IO_State: Waiting for master to send event Master_Host: 10.1.252.161 Master_User: repluser Master_Port: 3306 Connect_Retry: 60 Master_Log_File: master-log.000001 Read_Master_Log_Pos: 709 Relay_Log_File: relay-log.000002 Relay_Log_Pos: 994 Relay_Master_Log_File: master-log.000001 Slave_IO_Running: Yes Slave_SQL_Running: Yes Replicate_Do_DB: Replicate_Ignore_DB: Replicate_Do_Table: Replicate_Ignore_Table: Replicate_Wild_Do_Table: Replicate_Wild_Ignore_Table: Last_Errno: 0 Last_Error: Skip_Counter: 0 Exec_Master_Log_Pos: 709 Relay_Log_Space: 1282 Until_Condition: None Until_Log_File: Until_Log_Pos: 0 Master_SSL_Allowed: No Master_SSL_CA_File: Master_SSL_CA_Path: Master_SSL_Cert: Master_SSL_Cipher: Master_SSL_Key: Seconds_Behind_Master: 0 Master_SSL_Verify_Server_Cert: No Last_IO_Errno: 0 Last_IO_Error: Last_SQL_Errno: 0 Last_SQL_Error: Replicate_Ignore_Server_Ids: Master_Server_Id: 1 1 row in set (0.00 sec) MariaDB [(none)]> SELECT user,host FROM mysql.user; # 查看授权用户 +----------+-----------+ | user | host | +----------+-----------+ | mhaadmin | 10.1.%.% | # 授权的账户 | repluser | 10.1.%.% | # 授权的账户 | root | 127.0.0.1 | | root | ::1 | | | centos7 | | root | centos7 | | | localhost | | root | localhost | +----------+-----------+

如上,主从复制就已经配置好了,接下来安装配置MHA...

二、安装配置MHA

1.准备基于SSH互信通信环境,在manager生成密钥对,并设置其可远程连接本地主机,将私钥文件及authorized_keys复制给其余的节点,如下:

# 生成密钥对 [root@node1 ~]# ssh-keygen -t rsa -P ‘‘ Generating public/private rsa key pair. Enter file in which to save the key (/root/.ssh/id_rsa): /root/.ssh/id_rsa already exists. Overwrite (y/n)? y Your identification has been saved in /root/.ssh/id_rsa. Your public key has been saved in /root/.ssh/id_rsa.pub. The key fingerprint is: 7a:0f:4e:4f:95:68:aa:0b:c2:cf:0b:e8:f1:12:ff:ac root@node1.taotao.com The key‘s randomart image is: +--[ RSA 2048]----+ | | | | | | | . . | | S o o | | + . o . | |..* . . = . | |..o*.. = = | | ..E*+o.. o | +-----------------+ [root@node1 ~]# cat .ssh/id_rsa.pub > .ssh/authorized_keys [root@node1 ~]# chmod go= .ssh/authorized_keys [root@node1 ~]# ll .ssh total 16 -rw-------. 1 root root 403 Dec 9 13:18 authorized_keys -rw------- 1 root root 1675 Dec 9 13:17 id_rsa -rw-r--r-- 1 root root 403 Dec 9 13:17 id_rsa.pub -rw-r--r-- 1 root root 1749 Dec 9 11:20 known_hosts #将私钥文件和authorized_keys复制到其他节点,完成互信通信 [root@node1 ~]# scp -p .ssh/id_rsa .ssh/authorized_keys root@node2.taotao.com:/root/.ssh/ [root@node1 ~]# scp -p .ssh/id_rsa .ssh/authorized_keys root@node3.taotao.com:/root/.ssh/ [root@node1 ~]# scp -p .ssh/id_rsa .ssh/authorized_keys root@node4.taotao.com:/root/.ssh/

2.Manager节点(node1)安装程序包mha4mydql-manager和mha4mydql-node,其余节点均需安装node程序包即可,CentOS 7系统可直接使用适用于el6的的程序包,另外,MHA Manager和MHA Node程序包的版本并不强制要求一致;

[root@node1 MHA]# ls mha4mysql-manager-0.56-0.el6.noarch.rpm mha4mysql-node-0.56-0.el6.noarch.rpm Manager节点(node1) [root@node1 MHA]# yum install ./mha4mysql-node-0.56-0.el6.noarch.rpm -y [root@node1 MHA]# yum install ./mha4mysql-manager-0.56-0.el6.noarch.rpm -y 其余节点(node2,node3,node4) [root@node2 MHA]# yum install ./mha4mysql-node-0.56-0.el6.noarch.rpm -y

3.初始化MHA。Manager需要为每个监控的master/slave集群提供一个专用的配置文件,这里需要我们手动创建MHA的目录及其配置文件,如下:

[root@node1 ~]# mkdir /etc/masterha [root@node1 ~]# vim /etc/masterha/app1.cnf [server default] user=mhaadmin password=mhapass manager_workdir=/data/masterha/app1 manager_log=/data/masterha/app1/manager.log remote_workdir=/data/masterha/app1 ssh_user=root repl_user=repluser repl_password=replpass ping_interval=1 [server1] hostname=10.1.252.161 ssh_port=22 candidate_master=1 [server2] hostname=10.1.252.73 ssh_port=22 candidate_master=1 [server3] hostname=10.1.252.156 ssh_port=22 candidate_master=1

4.检测各节点ssh互信通信配置是否OK

[root@node1 ~]# masterha_check_ssh --conf=/etc/masterha/app1.cnf Fri Dec 9 15:42:30 2016 - [warning] Global configuration file /etc/masterha_default.cnf not found. Skipping. Fri Dec 9 15:42:30 2016 - [info] Reading application default configuration from /etc/masterha/app1.cnf.. Fri Dec 9 15:42:30 2016 - [info] Reading server configuration from /etc/masterha/app1.cnf.. Fri Dec 9 15:42:30 2016 - [info] Starting SSH connection tests.. Fri Dec 9 15:42:31 2016 - [debug] Fri Dec 9 15:42:30 2016 - [debug] Connecting via SSH from root@10.1.252.161(10.1.252.161:22) to root@10.1.252.73(10.1.252.73:22).. Fri Dec 9 15:42:31 2016 - [debug] ok. Fri Dec 9 15:42:31 2016 - [debug] Connecting via SSH from root@10.1.252.161(10.1.252.161:22) to root@10.1.252.156(10.1.252.156:22).. Fri Dec 9 15:42:31 2016 - [debug] ok. Fri Dec 9 15:42:32 2016 - [debug] Fri Dec 9 15:42:31 2016 - [debug] Connecting via SSH from root@10.1.252.73(10.1.252.73:22) to root@10.1.252.161(10.1.252.161:22).. Fri Dec 9 15:42:31 2016 - [debug] ok. Fri Dec 9 15:42:31 2016 - [debug] Connecting via SSH from root@10.1.252.73(10.1.252.73:22) to root@10.1.252.156(10.1.252.156:22).. Fri Dec 9 15:42:32 2016 - [debug] ok. Fri Dec 9 15:42:32 2016 - [debug] Fri Dec 9 15:42:31 2016 - [debug] Connecting via SSH from root@10.1.252.156(10.1.252.156:22) to root@10.1.252.161(10.1.252.161:22).. Fri Dec 9 15:42:32 2016 - [debug] ok. Fri Dec 9 15:42:32 2016 - [debug] Connecting via SSH from root@10.1.252.156(10.1.252.156:22) to root@10.1.252.73(10.1.252.73:22).. Fri Dec 9 15:42:32 2016 - [debug] ok. Fri Dec 9 15:42:32 2016 - [info] All SSH connection tests passed successfully. #各节点ssh互信通信配置是否OK

检测管理的MySQL复制集群的连接配置参数是否OK

[root@node1 ~]# masterha_check_repl --conf=/etc/masterha/app1.cnf Fri Dec 9 15:46:00 2016 - [warning] Global configuration file /etc/masterha_default.cnf not found. Skipping. Fri Dec 9 15:46:00 2016 - [info] Reading application default configuration from /etc/masterha/app1.cnf.. Fri Dec 9 15:46:00 2016 - [info] Reading server configuration from /etc/masterha/app1.cnf.. Fri Dec 9 15:46:00 2016 - [info] MHA::MasterMonitor version 0.56. Creating directory /data/masterha/app1.. done. Fri Dec 9 15:46:00 2016 - [info] GTID failover mode = 0 Fri Dec 9 15:46:00 2016 - [info] Dead Servers: Fri Dec 9 15:46:00 2016 - [info] Alive Servers: Fri Dec 9 15:46:00 2016 - [info] 10.1.252.161(10.1.252.161:3306) Fri Dec 9 15:46:00 2016 - [info] 10.1.252.73(10.1.252.73:3306) Fri Dec 9 15:46:00 2016 - [info] 10.1.252.156(10.1.252.156:3306) Fri Dec 9 15:46:00 2016 - [info] Alive Slaves: Fri Dec 9 15:46:00 2016 - [info] 10.1.252.73(10.1.252.73:3306) Version=5.5.44-MariaDB-log (oldest major version between slaves) log-bin:enabled Fri Dec 9 15:46:00 2016 - [info] Replicating from 10.1.252.161(10.1.252.161:3306) Fri Dec 9 15:46:00 2016 - [info] Primary candidate for the new Master (candidate_master is set) Fri Dec 9 15:46:00 2016 - [info] 10.1.252.156(10.1.252.156:3306) Version=5.5.44-MariaDB-log (oldest major version between slaves) log-bin:enabled Fri Dec 9 15:46:00 2016 - [info] Replicating from 10.1.252.161(10.1.252.161:3306) Fri Dec 9 15:46:00 2016 - [info] Primary candidate for the new Master (candidate_master is set) Fri Dec 9 15:46:00 2016 - [info] Current Alive Master: 10.1.252.161(10.1.252.161:3306) Fri Dec 9 15:46:00 2016 - [info] Checking slave configurations.. Fri Dec 9 15:46:00 2016 - [warning] relay_log_purge=0 is not set on slave 10.1.252.73(10.1.252.73:3306). Fri Dec 9 15:46:00 2016 - [warning] relay_log_purge=0 is not set on slave 10.1.252.156(10.1.252.156:3306). Fri Dec 9 15:46:00 2016 - [info] Checking replication filtering settings.. Fri Dec 9 15:46:00 2016 - [info] binlog_do_db= , binlog_ignore_db= Fri Dec 9 15:46:00 2016 - [info] Replication filtering check ok. Fri Dec 9 15:46:00 2016 - [info] GTID (with auto-pos) is not supported Fri Dec 9 15:46:00 2016 - [info] Starting SSH connection tests.. Fri Dec 9 15:46:02 2016 - [info] All SSH connection tests passed successfully. Fri Dec 9 15:46:02 2016 - [info] Checking MHA Node version.. Fri Dec 9 15:46:03 2016 - [info] Version check ok. Fri Dec 9 15:46:03 2016 - [info] Checking SSH publickey authentication settings on the current master.. Fri Dec 9 15:46:03 2016 - [info] HealthCheck: SSH to 10.1.252.161 is reachable. Fri Dec 9 15:46:04 2016 - [info] Master MHA Node version is 0.56. Fri Dec 9 15:46:04 2016 - [info] Checking recovery script configurations on 10.1.252.161(10.1.252.161:3306).. Fri Dec 9 15:46:04 2016 - [info] Executing command: save_binary_logs --command=test --start_pos=4 --binlog_dir=/var/lib/mysql,/var/log/mysql --output_file=/data/masterha/app1/save_binary_logs_test --manager_version=0.56 --start_file=master-log.000001 Fri Dec 9 15:46:04 2016 - [info] Connecting to root@10.1.252.161(10.1.252.161:22).. Creating /data/masterha/app1 if not exists.. Creating directory /data/masterha/app1.. done. ok. Checking output directory is accessible or not.. ok. Binlog found at /var/lib/mysql, up to master-log.000001 Fri Dec 9 15:46:04 2016 - [info] Binlog setting check done. Fri Dec 9 15:46:04 2016 - [info] Checking SSH publickey authentication and checking recovery script configurations on all alive slave servers.. Fri Dec 9 15:46:04 2016 - [info] Executing command : apply_diff_relay_logs --command=test --slave_user=‘mhaadmin‘ --slave_host=10.1.252.73 --slave_ip=10.1.252.73 --slave_port=3306 --workdir=/data/masterha/app1 --target_version=5.5.44-MariaDB-log --manager_version=0.56 --relay_log_info=/var/lib/mysql/relay-log.info --relay_dir=/var/lib/mysql/ --slave_pass=xxx Fri Dec 9 15:46:04 2016 - [info] Connecting to root@10.1.252.73(10.1.252.73:22).. Creating directory /data/masterha/app1.. done. Checking slave recovery environment settings.. Opening /var/lib/mysql/relay-log.info ... ok. Relay log found at /var/lib/mysql, up to relay-log.000002 Temporary relay log file is /var/lib/mysql/relay-log.000002 Testing mysql connection and privileges.. done. Testing mysqlbinlog output.. done. Cleaning up test file(s).. done. Fri Dec 9 15:46:05 2016 - [info] Executing command : apply_diff_relay_logs --command=test --slave_user=‘mhaadmin‘ --slave_host=10.1.252.156 --slave_ip=10.1.252.156 --slave_port=3306 --workdir=/data/masterha/app1 --target_version=5.5.44-MariaDB-log --manager_version=0.56 --relay_log_info=/var/lib/mysql/relay-log.info --relay_dir=/var/lib/mysql/ --slave_pass=xxx Fri Dec 9 15:46:05 2016 - [info] Connecting to root@10.1.252.156(10.1.252.156:22).. Creating directory /data/masterha/app1.. done. Checking slave recovery environment settings.. Opening /var/lib/mysql/relay-log.info ... ok. Relay log found at /var/lib/mysql, up to relay-log.000002 Temporary relay log file is /var/lib/mysql/relay-log.000002 Testing mysql connection and privileges.. done. Testing mysqlbinlog output.. done. Cleaning up test file(s).. done. Fri Dec 9 15:46:05 2016 - [info] Slaves settings check done. Fri Dec 9 15:46:05 2016 - [info] 10.1.252.161(10.1.252.161:3306) (current master) +--10.1.252.73(10.1.252.73:3306) +--10.1.252.156(10.1.252.156:3306) Fri Dec 9 15:46:05 2016 - [info] Checking replication health on 10.1.252.73.. Fri Dec 9 15:46:05 2016 - [info] ok. Fri Dec 9 15:46:05 2016 - [info] Checking replication health on 10.1.252.156.. Fri Dec 9 15:46:05 2016 - [info] ok. Fri Dec 9 15:46:05 2016 - [warning] master_ip_failover_script is not defined. # 需自行定义脚本 Fri Dec 9 15:46:05 2016 - [warning] shutdown_script is not defined. Fri Dec 9 15:46:05 2016 - [info] Got exit code 0 (Not master dead). MySQL Replication Health is OK. #说明MySQL复制集群的连接配置参数是否OK

5.启动MHA,让其在后台运行即可,调试阶段可让其在前台运行

[root@node1 ~]# masterha_manager --conf=/etc/masterha/app1.cnf Fri Dec 9 16:00:43 2016 - [warning] Global configuration file /etc/masterha_default.cnf not found. Skipping. Fri Dec 9 16:00:43 2016 - [info] Reading application default configuration from /etc/masterha/app1.cnf.. Fri Dec 9 16:00:43 2016 - [info] Reading server configuration from /etc/masterha/app1.cnf.. # 下面为在后台运行,并把输出的日志重定向到日志文件中 [root@node1 ~]# nohup masterha_manager --conf=/etc/masterha/app1.cnf > /data/masterha/app1/manager.log 2>&1 &

启动成功后,可通过如下命令来查看master节点的状态,如下:

[root@node1 ~]# masterha_check_status --conf=/etc/masterha/app1.cnf app1 (pid:4222) is running(0:PING_OK), master:10.1.252.161

上面信息中的app1 (pid:4222) is running(0:PING_OK)表示MHA服务运行OK,否则,则会显示为类似app1 is stopped(1:NOT_RUNNING)

如果要停止MHA,需要使用masterha_stop命令

[root@node1 ~]# masterha_stop --conf=/etc/masterha/app1.cnf Stopped app1 successfully.

三、测试故障转移:

1.在master节点关闭mariadb服务

[root@node2 ~]# killall -9 mysqld mysqld_safe [root@node2 ~]# ss -tnl # 3306端口已经关闭 State Recv-Q Send-Q Local Address:Port Peer Address:Port LISTEN 0 128 *:22 *:* LISTEN 0 128 127.0.0.1:631 *:* LISTEN 0 100 127.0.0.1:25 *:* LISTEN 0 128 127.0.0.1:6010 *:* LISTEN 0 128 :::80 :::* LISTEN 0 128 :::22 :::* LISTEN 0 128 ::1:631 :::* LISTEN 0 100 ::1:25 :::* LISTEN 0 128 ::1:6010 :::*

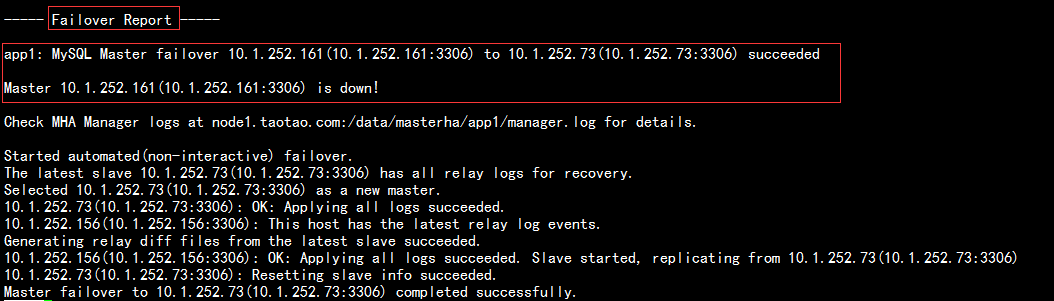

2.在manager节点查看日志,在/data/masterha/app1/manager.log日志中出现如下信息,表示检测到10.1.252.161节点故障,而后自动执行故障转移,将10.1.252.73提升为主节点,如下:

注意:故障转移完成后,manager将会自动停止,此时使用masterha_check_status命令检测时将会遇到错误提示,如下:

[root@node1 ~]# masterha_check_status --conf=/etc/masterha/app1.cnf app1 is stopped(2:NOT_RUNNING).

3.提供新的从节点以修复复制集群

原有manager节点故障后,需要重新准备好一个新的MySQL节点。基于来自于master节点的备份恢复数据后,将其配置为新的master的从节点即可。注意,新加入的节点如果为新增节点,其IP地址要配置为原来master节点的IP,否则还需要修改app1.cnf中相应的IP地址(方法同前面从节点的配置)。随后再次启动manager,并再次检测其状态;

# 检测不成功的话是启动不了MHA服务的 [root@node1 ~]# masterha_check_repl --conf=/etc/masterha/app1.cnf [root@node1 ~]# nohup masterha_manager --conf=/etc/masterha/app1.cnf > /data/masterha/app1/manager.log 2>&1 & [1] 5473 [root@node1 ~]# masterha_check_status --conf=/etc/masterha/app1.cnf app1 (pid:5473) is running(0:PING_OK), master:10.1.252.73

如上,就是整个MHA高可用集群的配置部署,以及测试过程...

四、进一步工作:

为了更多实际应用的需求,还需要进一步完成如下操作:

提供额外检测机制,以免对master的监控做出误判;

在master节点上提供虚拟ip地址向外提供服务,以免master节点转换时,客户端的请求无法正确送达;

进行故障转移时,对原有master节点进行STONITH操作以免脑裂,可通过指定shutdon_script实现;

必要时进行在线master节点转换。

本文出自 “逐梦小涛” 博客,请务必保留此出处http://1992tao.blog.51cto.com/11606804/1881275

玩转MHA高可用集群