首页 > 代码库 > openstack I版的搭建三--Nova

openstack I版的搭建三--Nova

安装Nova

[root@linux-node1 src]# cd ~

[root@linux-node1 ~]# cd /usr/local/src/nova-2014.1

[root@linux-node1 nova-2014.1]# python setup.py install

[root@linux-node1 nova]# pwd

/usr/local/src/nova-2014.1/etc/nova

[root@linux-node1 nova]# ll

总用量 48

-rw-rw-r-- 1 1004 1004 3120 4月 17 17:47 api-paste.ini

-rw-rw-r-- 1 1004 1004 723 4月 17 17:47 cells.json

-rw-rw-r-- 1 1004 1004 1346 4月 17 17:47 logging_sample.conf

-rw-rw-r-- 1 1004 1004 16750 4月 17 17:47 policy.json

-rw-rw-r-- 1 1004 1004 124 4月 17 17:47 README-nova.conf.txt

-rw-rw-r-- 1 1004 1004 73 4月 17 17:47 release.sample

-rw-rw-r-- 1 1004 1004 934 4月 17 17:47 rootwrap.conf

drwxrwxr-x 2 1004 1004 4096 4月 17 17:56 rootwrap.d

[root@linux-node1 nova]# mkdir /etc/nova

[root@linux-node1 nova]# mkdir /var/log/nova

[root@linux-node1 nova]# mkdir /var/lib/nova

[root@linux-node1 nova]# mkdir /var/run/nova

[root@linux-node1 nova]# mkdir /var/lib/nova/instances

[root@linux-node1 nova]# cp -r * /etc/nova/

[root@linux-node1 nova]# cd /etc/nova/

上传一个Nova的配置文件,配置文件件附件nova20148191418.conf

[root@linux-node1 nova]# ll

总用量 48

-rw-r--r-- 1 root root 3120 8月 19 05:25 api-paste.ini

-rw-r--r-- 1 root root 723 8月 19 05:25 cells.json

-rw-r--r-- 1 root root 1346 8月 19 05:25 logging_sample.conf

-rw-r--r-- 1 root root 16750 8月 19 05:25 policy.json

-rw-r--r-- 1 root root 124 8月 19 05:25 README-nova.conf.txt

-rw-r--r-- 1 root root 73 8月 19 05:25 release.sample

-rw-r--r-- 1 root root 934 8月 19 05:25 rootwrap.conf

drwxr-xr-x 2 root root 4096 8月 19 05:25 rootwrap.d

[root@linux-node1 nova]# rz

z waiting to receive.**B0100000023be50

[root@linux-node1 nova]# ll

总用量 148

-rw-r--r-- 1 root root 3120 8月 19 05:25 api-paste.ini

-rw-r--r-- 1 root root 723 8月 19 05:25 cells.json

-rw-r--r-- 1 root root 1346 8月 19 05:25 logging_sample.conf

-rw-r--r-- 1 root root 99091 8月 19 2014 nova.conf

-rw-r--r-- 1 root root 16750 8月 19 05:25 policy.json

-rw-r--r-- 1 root root 124 8月 19 05:25 README-nova.conf.txt

-rw-r--r-- 1 root root 73 8月 19 05:25 release.sample

-rw-r--r-- 1 root root 934 8月 19 05:25 rootwrap.conf

drwxr-xr-x 2 root root 4096 8月 19 05:25 rootwrap.d

[root@linux-node1 nova]# mv logging_sample.conf logging.conf

[root@linux-node1 ~]# keystone service-create --name=nova --type=compute --description="Openstack Compute"

+-------------+----------------------------------+

| Property | Value |

+-------------+----------------------------------+

| description | Openstack Compute |

| enabled | True |

| id | 95b294a2be074f548baedbd5744c518f |

| name | nova |

| type | compute |

+-------------+----------------------------------+

批量替换更改配置文件

:%s/192.168.1.1/192.168.33.11/g

connection=mysql://nova:nova@192.168.33.11/nova

rabbit_host=192.168.33.11

# The RabbitMQ broker port where a single node is used.

# (integer value)

rabbit_port=5672

# RabbitMQ HA cluster host:port pairs. (list value)

rabbit_hosts=$rabbit_host:$rabbit_port

# Connect over SSL for RabbitMQ. (boolean value)

rabbit_use_ssl=false

# The RabbitMQ userid. (string value)

rabbit_userid=guest

# The RabbitMQ password. (string value)

rabbit_password=guest

# The strategy to use for auth: noauth or keystone. (string

# value)

auth_strategy=keystone

# Host providing the admin Identity API endpoint (string

# value)

auth_host=192.168.33.11

# Port of the admin Identity API endpoint (integer value)

auth_port=35357

# Protocol of the admin Identity API endpoint(http or https)

# (string value)

auth_protocol=http

# Complete public Identity API endpoint (string value)

auth_uri=http://192.168.33.11:5000

# API version of the admin Identity API endpoint (string

# value)

auth_version=v2.0

# Keystone account username (string value)

admin_user=admin

# Keystone account password (string value)

admin_password=admin

# Keystone service account tenant name to validate user tokens

# (string value)

admin_tenant_name=admin

[root@linux-node1 nova]# grep ‘^[a-z]‘ /etc/nova/nova.conf

rabbit_host=192.168.33.11

rabbit_port=5672

rabbit_hosts=$rabbit_host:$rabbit_port

rabbit_use_ssl=false

rabbit_userid=guest

rabbit_password=guest

rabbit_virtual_host=/

rpc_backend=rabbit

my_ip=192.168.33.11

state_path=/var/lib/nova

auth_strategy=keystone

instances_path=$state_path/instances

network_api_class=nova.network.neutronv2.api.API

linuxnet_interface_driver=nova.network.linux_net.LinuxBridgeInterfaceDriver

neutron_url=http://192.168.33.11:9696

neutron_admin_username=neutron

neutron_admin_password=neutron

neutron_admin_tenant_name=service

neutron_admin_auth_url=http://192.168.33.11:5000/v2.0

neutron_auth_strategy=keystone

security_group_api=neutron

lock_path=/var/lib/nova/tmp

debug=true

resize_fs_using_block_device=false

compute_driver=libvirt.LibvirtDriver

use_cow_images=false

vnc_enabled=false

volume_api_class=nova.volume.cinder.API

connection=mysql://nova:nova@192.168.33.11/nova

auth_host=192.168.33.11

auth_port=35357

auth_protocol=http

auth_uri=http://192.168.33.11:5000

auth_version=v2.0

admin_user=admin

admin_password=admin

admin_tenant_name=admin

virt_type=qemu

vif_driver=nova.virt.libvirt.vif.NeutronLinuxBridgeVIFDriver

同步Nova的数据库

[root@linux-node1 ~]# nova-manage db sync

查看一下数据库

[root@linux-node1 ~]# mysql -h 192.168.33.11 -unova -pnova -e"use nova;show tables;"

+--------------------------------------------+

| Tables_in_nova |

+--------------------------------------------+

| agent_builds |

| aggregate_hosts |

| aggregate_metadata |

| aggregates |

| block_device_mapping |

| bw_usage_cache |

| cells |

| certificates |

| compute_nodes |

| console_pools |

| consoles |

| dns_domains |

| fixed_ips |

| floating_ips |

| instance_actions |

| instance_actions_events |

| instance_faults |

| instance_group_member |

| instance_group_metadata |

| instance_group_policy |

| instance_groups |

| instance_id_mappings |

| instance_info_caches |

| instance_metadata |

| instance_system_metadata |

| instance_type_extra_specs |

| instance_type_projects |

| instance_types |

| instances |

| iscsi_targets |

| key_pairs |

| migrate_version |

| migrations |

| networks |

| pci_devices |

| project_user_quotas |

| provider_fw_rules |

| quota_classes |

| quota_usages |

| quotas |

| reservations |

| s3_images |

| security_group_default_rules |

| security_group_instance_association |

| security_group_rules |

| security_groups |

| services |

| shadow_agent_builds |

| shadow_aggregate_hosts |

| shadow_aggregate_metadata |

| shadow_aggregates |

| shadow_block_device_mapping |

| shadow_bw_usage_cache |

| shadow_cells |

| shadow_certificates |

| shadow_compute_nodes |

| shadow_console_pools |

| shadow_consoles |

| shadow_dns_domains |

| shadow_fixed_ips |

| shadow_floating_ips |

| shadow_instance_actions |

| shadow_instance_actions_events |

| shadow_instance_faults |

| shadow_instance_group_member |

| shadow_instance_group_metadata |

| shadow_instance_group_policy |

| shadow_instance_groups |

| shadow_instance_id_mappings |

| shadow_instance_info_caches |

| shadow_instance_metadata |

| shadow_instance_system_metadata |

| shadow_instance_type_extra_specs |

| shadow_instance_type_projects |

| shadow_instance_types |

| shadow_instances |

| shadow_iscsi_targets |

| shadow_key_pairs |

| shadow_migrate_version |

| shadow_migrations |

| shadow_networks |

| shadow_pci_devices |

| shadow_project_user_quotas |

| shadow_provider_fw_rules |

| shadow_quota_classes |

| shadow_quota_usages |

| shadow_quotas |

| shadow_reservations |

| shadow_s3_images |

| shadow_security_group_default_rules |

| shadow_security_group_instance_association |

| shadow_security_group_rules |

| shadow_security_groups |

| shadow_services |

| shadow_snapshot_id_mappings |

| shadow_snapshots |

| shadow_task_log |

| shadow_virtual_interfaces |

| shadow_volume_id_mappings |

| shadow_volume_usage_cache |

| shadow_volumes |

| snapshot_id_mappings |

| snapshots |

| task_log |

| virtual_interfaces |

| volume_id_mappings |

| volume_usage_cache |

| volumes |

+--------------------------------------------+

表创建了就证明我们安装是没有问题的。

[root@linux-node1 ~]# keystone service-create --name=nova --type=compute --description="Openstack Compute"

+-------------+----------------------------------+

| Property | Value |

+-------------+----------------------------------+

| description | Openstack Compute |

| enabled | True |

| id | 95b294a2be074f548baedbd5744c518f |

| name | nova |

| type | compute |

+-------------+----------------------------------+

[root@linux-node1 ~]# keystone endpoint-create \

> --service-id=95b294a2be074f548baedbd5744c518f \

> --publicurl=http://192.168.33.11:8774/v2/%\(tenant_id\)s \

> --internalurl=http://192.168.33.11:8774/v2/%\(tenant_id\)s \

> --adminurl=http://192.168.33.11:8774/v2/%\(tenant_id\)s

+-------------+--------------------------------------------+

| Property | Value |

+-------------+--------------------------------------------+

| adminurl | http://192.168.33.11:8774/v2/%(tenant_id)s |

| id | 1ad88c16010d41da93288d7b4fe78332 |

| internalurl | http://192.168.33.11:8774/v2/%(tenant_id)s |

| publicurl | http://192.168.33.11:8774/v2/%(tenant_id)s |

| region | regionOne |

| service_id | 95b294a2be074f548baedbd5744c518f |

+-------------+--------------------------------------------+

复制

keystone endpoint-create --service-id=95b294a2be074f548baedbd5744c518f --publicurl=http://192.168.33.11:8774/v2/%\(tenant_id\)s --internalurl=http://192.168.33.11:8774/v2/%\(tenant_id\)s --adminurl=http://192.168.33.11:8774/v2/%\(tenant_id\)s

[root@linux-node1 ~]# cd init.d

[root@linux-node1 init.d]# cp openstack-nova-api /etc/init.d/

[root@linux-node1 init.d]# cp openstack-nova-cert /etc/init.d/

[root@linux-node1 init.d]# cp openstack-nova-conductor /etc/init.d/

[root@linux-node1 init.d]# cp openstack-nova-scheduler /etc/init.d/

[root@linux-node1 init.d]# cp openstack-nova-consoleauth /etc/init.d/

[root@linux-node1 init.d]# cp openstack-nova-novncproxy /etc/init.d/

[root@linux-node1 init.d]# chmod +x /etc/init.d/openstack-*

[root@linux-node1 init.d]# chkconfig --add openstack-nova-api

[root@linux-node1 init.d]# chkconfig --add openstack-nova-cert

[root@linux-node1 init.d]# chkconfig --add openstack-nova-conductor

[root@linux-node1 init.d]# chkconfig --add openstack-nova-scheduler

[root@linux-node1 init.d]# chkconfig --add openstack-nova-consoleauth

[root@linux-node1 init.d]# chkconfig --add openstack-nova-novncproxy

[root@linux-node1 init.d]# chkconfig openstack-nova-api on

[root@linux-node1 init.d]# chkconfig openstack-nova-cert on

[root@linux-node1 init.d]# chkconfig openstack-nova-conductor on

[root@linux-node1 init.d]# chkconfig openstack-nova-scheduler on

[root@linux-node1 init.d]# chkconfig openstack-nova-consoleauth on

[root@linux-node1 init.d]# chkconfig openstack-nova-novncproxy on

[root@linux-node1 init.d]# for i in {api,cert,conductor,scheduler,consoleauth,novncproxy};do /etc/init.d/openstack-nova-$i start;done

正在启动 openstack-nova-api [确定]

正在启动 openstack-nova-cert [确定]

正在启动 openstack-nova-conductor [确定]

正在启动 openstack-nova-scheduler [确定]

正在启动 openstack-nova-consoleauth [确定]

正在启动 openstack-nova-novncproxy [确定]

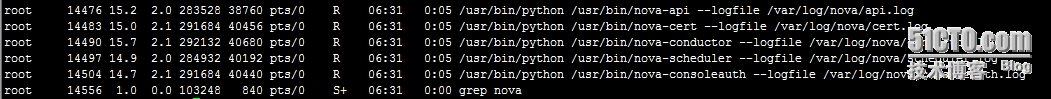

[root@linux-node1 init.d]# ps aux|grep nova

我们发现openstack-nova-novncproxy没有启动起来那么下面我们来找一下原因。

我们发现openstack-nova-novncproxy没有启动起来那么下面我们来找一下原因。

手动启动一下看一下报错

[root@linux-node1 ~]# nova-novncproxy --config-file=/etc/nova/nova.conf

Can not find novnc html/js/css files at /usr/share/novnc.

因为vnc的包没有装

[root@linux-node1 ~]# wget https://github.com/kanaka/noVNC/archive/v0.4.tar.gz

[root@linux-node1 ~]# ll

总用量 13620

-rw-------. 1 root root 1149 7月 22 19:08 anaconda-ks.cfg

-rw-r--r-- 1 root root 13167616 3月 18 09:04 cirros-0.3.2-x86_64-disk.img

drwxr-xr-x 2 root root 4096 5月 4 16:08 init.d

-rw-r--r-- 1 root root 14344 7月 16 13:03 init.d.zip

-rw-r--r--. 1 root root 23833 7月 22 19:08 install.log

-rw-r--r--. 1 root root 7688 7月 22 19:07 install.log.syslog

-rw-r--r-- 1 root root 129 8月 18 22:09 keystone-admin

-rw-r--r-- 1 root root 711551 8月 19 06:37 v0.4.tar.gz

[root@linux-node1 ~]# tar zxf v0.4.tar.gz

[root@linux-node1 ~]# ls

anaconda-ks.cfg cirros-0.3.2-x86_64-disk.img init.d init.d.zip install.log install.log.syslog keystone-admin noVNC-0.4 v0.4.tar.gz

[root@linux-node1 ~]# mv noVNC-0.4/ /usr/share/novnc

[root@linux-node1 ~]# /etc/init.d/openstack-nova-novncproxy start

正在启动 openstack-nova-novncproxy [确定]

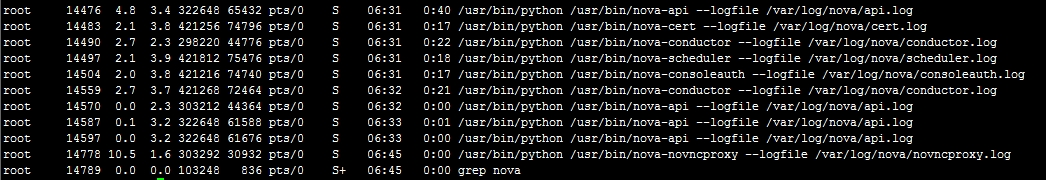

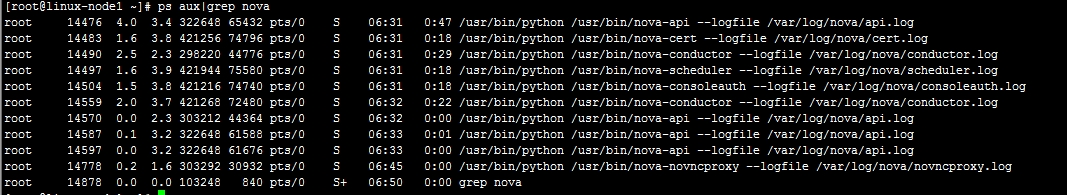

[root@linux-node1 ~]# ps aux|grep nova

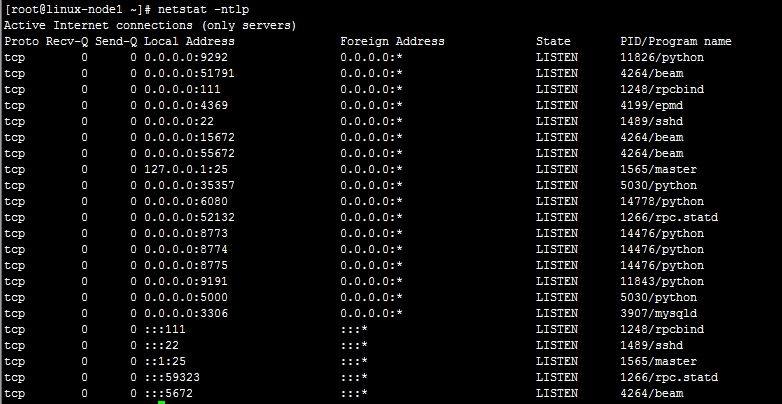

[root@linux-node1 ~]# lsof -i:6080

COMMAND PID USER FD TYPE DEVICE SIZE/OFF NODE NAME

nova-novn 14778 root 3u IPv4 188276 0t0 TCP *:6080 (LISTEN)

[root@linux-node1 ~]# ps aux|grep 14778

root 14778 0.2 1.6 303292 30932 pts/0 S 06:45 0:00 /usr/bin/python /usr/bin/nova-novncproxy --logfile /var/log/nova/novncproxy.log

root 14851 0.0 0.0 103248 840 pts/0 S+ 06:49 0:00 grep 14778

安装horizon

[root@linux-node1 ~]# cd /usr/local/src/horizon-2014.1

[root@linux-node1 horizon-2014.1]# python setup.py install

[root@linux-node1 horizon-2014.1]# cd openstack_dashboard/local

[root@linux-node1 local]# ls

enabled __init__.py local_settings.py.example

[root@linux-node1 local]# mv local_settings.py.example local_settings.py

[root@linux-node1 local]# vim local_settings.py

OPENSTACK_HOST = "192.168.33.11"

OPENSTACK_KEYSTONE_URL = "http://%s:5000/v2.0" % OPENSTACK_HOST

OPENSTACK_KEYSTONE_DEFAULT_ROLE = "_member_"

[root@linux-node1 horizon-2014.1]# cd openstack_dashboard/local

[root@linux-node1 local]# ls

enabled __init__.py local_settings.py.example

[root@linux-node1 local]# mv local_settings.py.example local_settings.py

[root@linux-node1 local]# vim local_settings.py

[root@linux-node1 local]# cd ../..

[root@linux-node1 horizon-2014.1]# cd ../

[root@linux-node1 src]# pwd

/usr/local/src

[root@linux-node1 src]# yum install httpd mod_wsgi

[root@linux-node1 src]# cd ~

[root@linux-node1 ~]# mv /usr/local/src/horizon-2014.1 /var/www/

[root@linux-node1 ~]# id apache

uid=48(apache) gid=48(apache) 组=48(apache)

[root@linux-node1 ~]# chown -R apache:apache /var/www/horizon-2014.1/

配置Apache的配置文件

[root@linux-node1 ~]# cd /etc/httpd/conf.d/

[root@linux-node1 conf.d]# ls

README welcome.conf wsgi.conf

[root@linux-node1 conf.d]# rz

z waiting to receive.**B0100000023be50

[root@linux-node1 conf.d]# ll

总用量 16

-rw-r--r-- 1 root root 955 8月 19 2014 horizon.conf

-rw-r--r-- 1 root root 392 7月 23 22:18 README

-rw-r--r-- 1 root root 299 7月 18 14:27 welcome.conf

-rw-r--r-- 1 root root 43 6月 11 19:00 wsgi.conf

[root@linux-node1 conf.d]# vim horizon.conf

<VirtualHost *:80>

ServerAdmin admin@unixhot.com

ServerName 192.168.33.11

DocumentRoot /var/www/horizon-2014.1/

ErrorLog /var/log/httpd/horizon_error.log

LogLevel info

CustomLog /var/log/httpd/horizon_access.log combined

WSGIScriptAlias / /var/www/horizon-2014.1/openstack_dashboard/wsgi/django.wsgi

WSGIDaemonProcess horizon user=apache group=apache processes=3 threads=10 home=/var/www/horizon-2014.1

WSGIApplicationGroup horizon

SetEnv APACHE_RUN_USER apache

SetEnv APACHE_RUN_GROUP apache

WSGIProcessGroup horizon

Alias /media /var/www/horizon-2014.1/openstack_dashboard/static

<Directory /var/www/horizon-2014.1/>

Options FollowSymLinks MultiViews

AllowOverride None

Order allow,deny

Allow from all

</Directory>

</VirtualHost>

WSGISocketPrefix /var/run/horizon

[root@linux-node1 conf.d]# mkdir /var/run/horizon

[root@linux-node1 conf.d]# pwd

/etc/httpd/conf.d

启动Apache

[root@linux-node1 ~]# /etc/init.d/httpd start

正在启动 httpd [确定]

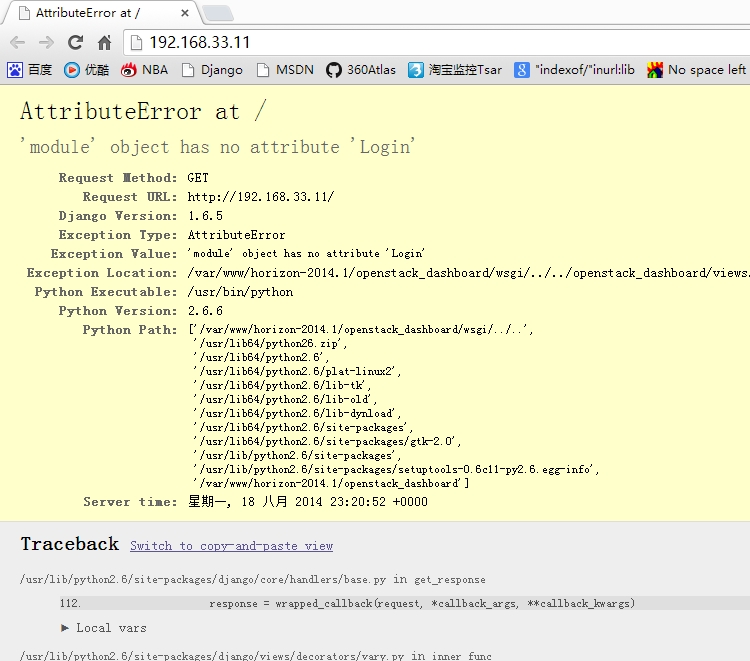

访问一下但是有报错

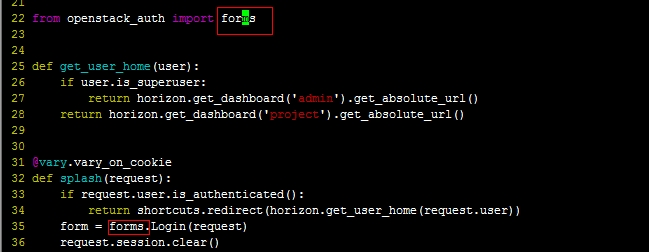

[root@linux-node1 ~]# vim /var/www/horizon-2014.1/openstack_dashboard/views.py +35

[root@linux-node1 ~]# vim /var/www/horizon-2014.1/openstack_dashboard/views.py +35

@vary.vary_on_cookie

def splash(request):

if request.user.is_authenticated():

return shortcuts.redirect(horizon.get_user_home(request.user))

form = forms.Login(request)

[root@linux-node1 ~]# vim /var/www/horizon-2014.1/openstack_dashboard/views.py +22

from openstack_auth import forms

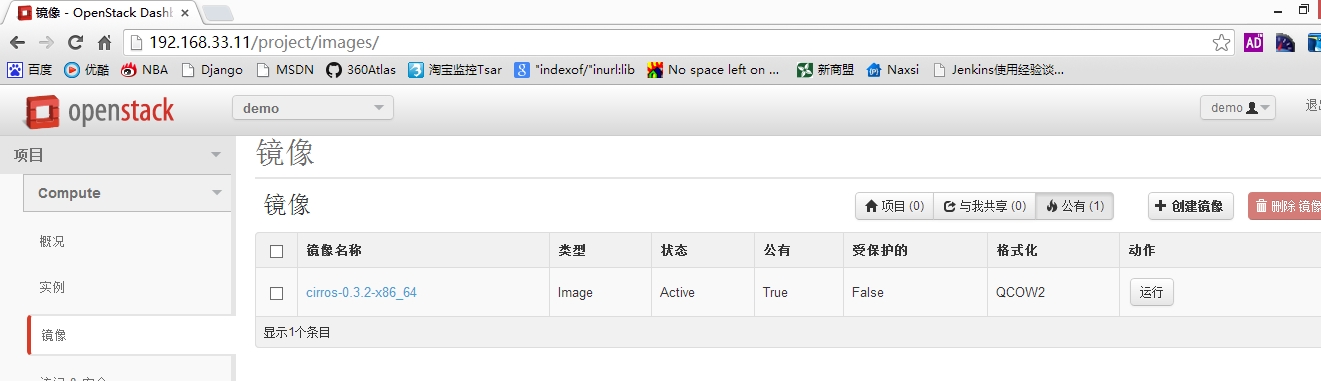

在浏览器地址栏输入http://192.168.33.11/

我们可以用demo用户登录

本文出自 “8055082” 博客,谢绝转载!