首页 > 代码库 > 第四章 分词

第四章 分词

4.1 分词器的核心类

1. Analyzer

Lucene内置分词器SimpleAnalyzer、StopAnalyzer、WhitespaceAnalyzer、StandardAnalyzer

主要作用:

KeywordAnalyzer分词,没有任何变化;

SimpleAnalyzer对中文效果太差;

StandardAnalyzer对中文单字拆分;

StopAnalyzer和SimpleAnalyzer差不多;

WhitespaceAnalyzer只按空格划分。

2. TokenStream

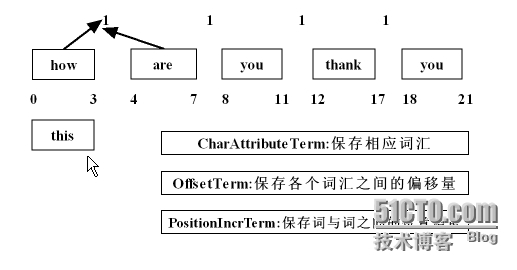

分词器做好处理之后得到的一个流,这个流中存储了分词的各种信息,可以通过TokenStream有效的获取到分词单元信息

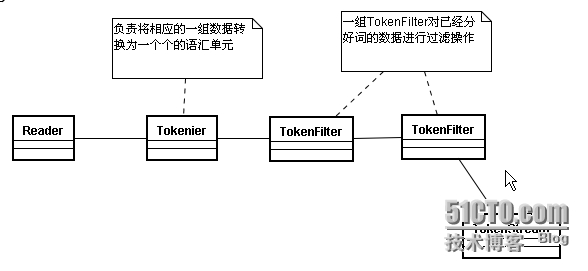

生成的流程

在这个流中所需要存储的数据

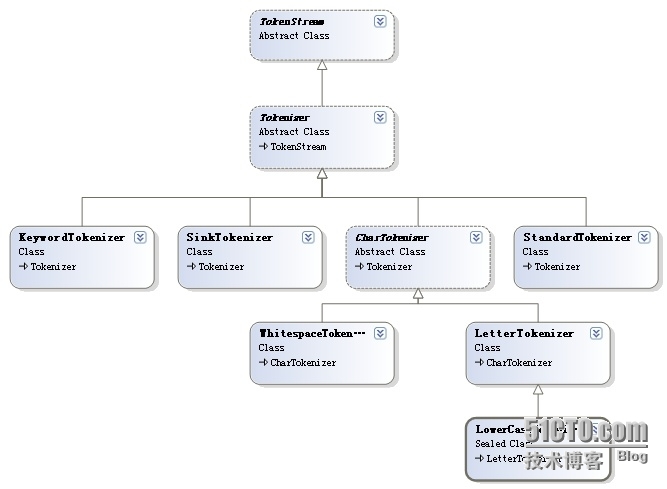

3. Tokenizer

主要负责接收字符流Reader,将Reader进行分词操作。有如下一些实现类

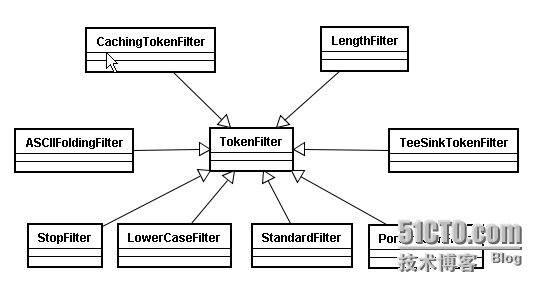

4. TokenFilter

将分词的语汇单元,进行各种各样过滤

5.扩展:TokenFilter各类介绍:

(1),TokenFilter

输入参数为另一个TokerStream的TokerStream,其子类必须覆盖incrementToken()函数。

(2),LowerCaseFilter

将Token分词转换为小写。

(3),FilteringTokenFilter

TokenFilters的一个抽象类,可能会删除分词。如果当前分词要保存,则需要实现accept()方法

并返回一个boolean值。incrementToken()方法将调用accept()方法来决定是否将当前的分词返回

给调用者。

(4),StopFilter

从token stream中移除停止词(stop words).

protected boolean accept() {

return!stopWords.contains(termAtt.buffer(), 0, termAtt.length());//返回不是stop word的分词

}(5),TypeTokenFilter

从token stream中移除指定类型的分词。

protected boolean accept() {

returnuseWhiteList == stopTypes.contains(typeAttribute.type());

}(6),LetterTokenizer

是一个编译器,将文本在非字母。说,它定义了令牌的最大字符串相邻的字母

(7),TokenFilter的顺序问题

此时停止词 the 就未被去除了。先全部转换为小写字母,再过滤停止词(The 转换成 the 才可以与停止词词组里的 the 匹配),如果不限制大小写,停止词的组合就太多了。

import java.io.Reader;

import java.util.Set;

import org.apache.lucene.analysis.Analyzer;

importorg.apache.lucene.analysis.LetterTokenizer;

importorg.apache.lucene.analysis.LowerCaseFilter;

import org.apache.lucene.analysis.StopAnalyzer;

import org.apache.lucene.analysis.StopFilter;

import org.apache.lucene.analysis.TokenStream;

import org.apache.lucene.util.Version;

public class MyStopAnalyzer extends Analyzer {

privateSet<Object> words;

publicMyStopAnalyzer(){}

publicMyStopAnalyzer(String[] words ){

this.words=StopFilter.makeStopSet(Version.LUCENE_35,words, true);

this.words.addAll(StopAnalyzer.ENGLISH_STOP_WORDS_SET)

}

@Override

publicTokenStream tokenStream(String fieldName, Reader reader) {

// TODO Auto-generatedmethod stub

return newStopFilter(Version.LUCENE_35,new LowerCaseFilter(Version.LUCENE_35, newLetterTokenizer(Version.LUCENE_35,reader)),this.words);

}

}4.2Attribute

public static void displayAllTokenInfo(Stringstr,Analyzer a) {

try{

TokenStreamstream = a.tokenStream("content",new StringReader(str));

//位置增量的属性,存储语汇单元之间的距离

PositionIncrementAttributepia =

stream.addAttribute(PositionIncrementAttribute.class);

//每个语汇单元的位置偏移量

OffsetAttributeoa =

stream.addAttribute(OffsetAttribute.class);

//存储每一个语汇单元的信息(分词单元信息)

CharTermAttributecta =

stream.addAttribute(CharTermAttribute.class);

//使用的分词器的类型信息

TypeAttributeta =

stream.addAttribute(TypeAttribute.class);

for(;stream.incrementToken();){

System.out.print(pia.getPositionIncrement()+":");

System.out.print(cta+"["+oa.startOffset()+"-"+oa.endOffset()+"]-->"+ta.type()+"\n");

}

}catch (Exception e) {

e.printStackTrace();

}

}4.3 自定义分词器

1.自定义Stop分词器

package com.mzsx.analyzer;

import java.io.Reader;

import java.util.Set;

import org.apache.lucene.analysis.Analyzer;

importorg.apache.lucene.analysis.LetterTokenizer;

importorg.apache.lucene.analysis.LowerCaseFilter;

import org.apache.lucene.analysis.StopAnalyzer;

import org.apache.lucene.analysis.StopFilter;

import org.apache.lucene.analysis.TokenStream;

import org.apache.lucene.util.Version;

public class MyStopAnalyzer extends Analyzer {

privateSet<Object> words;

publicMyStopAnalyzer(){}

publicMyStopAnalyzer(String[] words ){

this.words=StopFilter.makeStopSet(Version.LUCENE_35,words, true);

this.words.addAll(StopAnalyzer.ENGLISH_STOP_WORDS_SET);

}

@Override

publicTokenStream tokenStream(String fieldName, Reader reader) {

returnnew StopFilter(Version.LUCENE_35,new LowerCaseFilter(Version.LUCENE_35, newLetterTokenizer(Version.LUCENE_35,reader)),this.words);

}

}//测试代码

@Test

publicvoid myStopAnalyzer() {

Analyzera1 = new MyStopAnalyzer(new String[]{"I","you","hate"});

Analyzera2 = new MyStopAnalyzer();

Stringtxt = "how are you thank you I hate you";

AnalyzerUtils.displayAllTokenInfo(txt,a1);

//AnalyzerUtils.displayToken(txt,a2);

}

2.简单实现同义词索引

package com.mzsx.analyzer;

public interface SamewordContext {

publicString[] getSamewords(String name);

}package com.mzsx.analyzer;

import java.util.HashMap;

import java.util.Map;

public class SimpleSamewordContext implementsSamewordContext {

Map<String,String[]>maps = new HashMap<String,String[]>();

publicSimpleSamewordContext() {

maps.put("中国",new String[]{"天朝","大陆"});

maps.put("我",new String[]{"咱","俺"});

maps.put("china",new String[]{"chinese"});

}

@Override

publicString[] getSamewords(String name) {

returnmaps.get(name);

}

}package com.mzsx.analyzer;

import java.io.IOException;

import java.util.Stack;

import org.apache.lucene.analysis.TokenFilter;

import org.apache.lucene.analysis.TokenStream;

importorg.apache.lucene.analysis.tokenattributes.CharTermAttribute;

importorg.apache.lucene.analysis.tokenattributes.PositionIncrementAttribute;

import org.apache.lucene.util.AttributeSource;

public class MySameTokenFilter extendsTokenFilter {

privateCharTermAttribute cta = null;

privatePositionIncrementAttribute pia = null;

privateAttributeSource.State current;

privateStack<String> sames = null;

privateSamewordContext samewordContext;

protectedMySameTokenFilter(TokenStream input,SamewordContext samewordContext) {

super(input);

cta= this.addAttribute(CharTermAttribute.class);

pia= this.addAttribute(PositionIncrementAttribute.class);

sames= new Stack<String>();

this.samewordContext= samewordContext;

}

@Override

publicboolean incrementToken() throws IOException {

if(sames.size()>0){

//将元素出栈,并且获取这个同义词

Stringstr = sames.pop();

//还原状态

restoreState(current);

cta.setEmpty();

cta.append(str);

//设置位置0

pia.setPositionIncrement(0);

returntrue;

}

if(!this.input.incrementToken())return false;

if(addSames(cta.toString())){

//如果有同义词将当前状态先保存

current= captureState();

}

returntrue;

}

privateboolean addSames(String name) {

String[]sws = samewordContext.getSamewords(name);

if(sws!=null){

for(Stringstr:sws) {

sames.push(str);

}

returntrue;

}

returnfalse;

}

}package com.mzsx.analyzer;

import java.io.Reader;

import org.apache.lucene.analysis.Analyzer;

import org.apache.lucene.analysis.TokenStream;

import com.chenlb.mmseg4j.Dictionary;

import com.chenlb.mmseg4j.MaxWordSeg;

importcom.chenlb.mmseg4j.analysis.MMSegTokenizer;

public class MySameAnalyzer extends Analyzer {

privateSamewordContext samewordContext;

publicMySameAnalyzer(SamewordContext swc) {

samewordContext= swc;

}

@Override

publicTokenStream tokenStream(String fieldName, Reader reader) {

Dictionarydic = Dictionary.getInstance("D:/luceneIndex/dic");

returnnew MySameTokenFilter(

newMMSegTokenizer(new MaxWordSeg(dic), reader),samewordContext);

}

}//测试代码

@Test

publicvoid testSameAnalyzer() {

try{

Analyzera2 = new MySameAnalyzer(new SimpleSamewordContext());

Stringtxt = "我来自中国海南儋州第一中学,welcome to china !";

Directorydir = new RAMDirectory();

IndexWriterwriter = new IndexWriter(dir,new IndexWriterConfig(Version.LUCENE_35, a2));

Documentdoc = new Document();

doc.add(newField("content",txt,Field.Store.YES,Field.Index.ANALYZED));

writer.addDocument(doc);

writer.close();

IndexSearchersearcher = new IndexSearcher(IndexReader.open(dir));

TopDocstds = searcher.search(new TermQuery(new Term("content","咱")),10);

Documentd = searcher.doc(tds.scoreDocs[0].doc);

System.out.println("原文:"+d.get("content"));

AnalyzerUtils.displayAllTokenInfo(txt,a2);

}catch (CorruptIndexException e) {

e.printStackTrace();

}catch (LockObtainFailedException e) {

e.printStackTrace();

}catch (IOException e) {

e.printStackTrace();

}

}本文出自 “梦朝思夕” 博客,请务必保留此出处http://qiangmzsx.blog.51cto.com/2052549/1549902

第四章 分词