首页 > 代码库 > red hat 6.2 部署Hadoop 2.2.0版

red hat 6.2 部署Hadoop 2.2.0版

今天我们来实际搭建一下Hadoop 2.2.0版,实战环境为目前主流服务器操作系统RedHat6.2系统,本次环境搭建时,各类介质均来自互联网,在搭建环境之前,请提前准备好各类介质。

一、 环境规划

功能 | Hostname | IP地址 |

Namenode | Master | 192.168.200.2 |

Datanode | Slave1 | 192.168.200.3 |

Datanode | Slave2 | 192.168.200.4 |

Datanode | Slave3 | 192.168.200.5 |

Datanode | Slave4 | 192.168.200.6 |

软件 | 版本 |

操作系统 | RedHat 6.2-64 |

Hadoop | Hadoop 2.2.0 |

Jdk | Jdk 1.7-linux |

二、基础环境配置

1. 安装操作系统并进行基本配置

规划好服务器用途后,对服务器进行系统安装,并配置网络。

此处省略

(1)对操作系统安装完成后,关闭所有节点的防火墙服务和selinux服务。

service iptablesstop

chkconfigiptables off

cat/etc/selinux/config

# This filecontrols the state of SELinux on the system.

# SELINUX= cantake one of these three values:

# enforcing - SELinux security policy isenforced.

# permissive - SELinux prints warningsinstead of enforcing.

# disabled - No SELinux policy is loaded.

SELINUX=disabled

# SELINUXTYPE=can take one of these two values:

# targeted - Targeted processes areprotected,

# mls - Multi Level Security protection.

SELINUXTYPE=targeted

(2)复制hadoop软件,jdk软件到服务器中。

[root@masterhome]# ls

jdk-7u67-linux-x64.rpm hadoop-2.2.0.tar.gz

(3)修改各个服务器主机名和网络

cat/etc/sysconfig/network

NETWORKING=yes

HOSTNAME=slave2

cat/etc/sysconfig/network-scripts/ifcfg-eth0

DEVICE=eth0

HWADDR=f2:85:cd:9a:30:0d

NM_CONTROLLED=yes

ONBOOT=yes

IPADDR=192.168.200.4

BOOTPROTO=none

NETMASK=255.255.255.0

TYPE=Ethernet

GATEWAY=192.168.200.254

IPV6INIT=no

USERCTL=no

(4)在各服务器上配置/etc/hosts文件

127.0.0.1 localhost localhost.localdomain localhost4localhost4.localdomain4

::1 localhost localhost.localdomainlocalhost6 localhost6.localdomain6

192.168.200.2 master

192.168.200.3 slave1

192.168.200.4 slave2

192.168.200.5 slave3

192.168.200.6 slave4

2. 创建用户

一般我们不会经常使用root用户运行hadoop,所以要创建一个平常运行和管理Hadoop的用户;

master和slave节点机都要创建相同的用户和用户组,即在所有集群服务器上都要建hdtest用户和用户组;

使用以下命令创建用户

useradd hdtest

password hdtest

把hadoop-2.2.0.tar.gz拷贝到hdtest用户下,并修改所属组。

3. 安装jdk包

此次使用的jdk1.7,从官网上下载jdk1.7-linux ,复制到每台服务器上进行安装。

使用root用户安装

rpm -ivh jdk-7u67-linux-x64.rpm

4. 配置环境变量

本次使用的是hdtest用户安装hadoop,故需要对hdtest用户进行配置。

需要在master和slave所有节点上配置环境变量

4.1 配置java环境变量

[root@master ~]# find / -name java

………………

/usr/java/jdk1.7.0_67/bin/java

……………………

[root@master home]# su - hdtest

[hdtest@master ~]$ cat .bash_profile

# .bash_profile

…………

PATH=$PATH:$HOME/bin

export PATH

export JAVA_HOME=/usr/java/jdk1.7.0_67

export PATH=$JAVA_HOME/bin:$PATH

exportCLASSPATH=$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar:./

export HADOOP_HOME=/home/hdtest/hadoop-2.2.0

export PATH=$PATH:$HADOOP_HOME/bin/

exportJAVA_LIBRARY_PATH=/home/hdtest/hadoop-2.2.0/lib/native

4.2 配置用户互信

在所有节点使用hdtest用户生成秘钥

[hdtest@master.ssh]$ ssh-keygen -t rsa

[hdtest@slave1 .ssh]$ ssh-keygen -t rsa

[hdtest@slave2 .ssh]$ ssh-keygen -t rsa

[hdtest@slave3 .ssh]$ ssh-keygen -t rsa

[hdtest@slave4 .ssh]$ ssh-keygen -t rsa

[hdtest@slave2 .ssh]$ ll

total 16

-rw------- 1 hdtest hdtest 1675 Sep 4 14:53 id_rsa

-rw-r--r-- 1 hdtest hdtest 395 Sep 4 14:53 id_rsa.pub

-rw-r--r-- 1 hdtest hdtest 783 Sep 4 14:58 known_hosts

各节点生成公钥复制到其中一台机器,并进行合并。

[hdtest@slave1 .ssh]$ scp id_rsa.pub192.168.200.2:/home/hdtest/.ssh/slave1.pub

[hdtest@slave2 .ssh]$ scp id_rsa.pub192.168.200.2:/home/hdtest/.ssh/slave2.pub

[hdtest@slave3 .ssh]$ scp id_rsa.pub192.168.200.2:/home/hdtest/.ssh/slave3.pub

[hdtest@slave4 .ssh]$ scp id_rsa.pub192.168.200.2:/home/hdtest/.ssh/slave4.pub

[hdtest@master .ssh]$ cat *.pub >>authorized_keys

把master上生成的authorized文件分别拷贝到每台机器上。

scp authorized_keys slave1:/home/hdtest/.ssh/

scp authorized_keys slave2:/home/hdtest/.ssh/

scp authorized_keys slave3:/home/hdtest/.ssh/

scp authorized_keys slave4:/home/hdtest/.ssh/

在所有节点修改文件权限

[hdtest@master ~]$ chmod 700 .ssh/

[hdtest@master .ssh]$ chmod 600authorized_keys

以上步骤完成后,进行测试

[hdtest@master .ssh]$ ssh slave1

Last login: Thu Sep 4 15:58:39 2014 from master

[hdtest@slave1 ~]$ ssh slave3

Last login: Thu Sep 4 15:58:42 2014 from master

[hdtest@slave3 ~]$

使用ssh登陆各服务器,不用输入密码,证明配置完成。

三、 安装hadoop

在安装hadoop之前,需要新建几个目录

[hdtest@master ~]$ pwd

/home/hdtest

mkdir dfs/name -p

mkdir dfs/data -p

mkdir mapred/local -p

mkdir mapred/system

1. 修改配置文件

每台机器服务器都要配置,且都是一样的,配置完一台其他的只需要拷贝,每台

机上的core-site.xml和mapred-site.xml都是配master服务器的hostname,因为都是配

置hadoop的入口

[hdtest@master hadoop]$ pwd

/home/hdtest/hadoop-2.2.0/etc/hadoop

(1)core-site.xml配置文件

[hdtest@master hadoop]$ cat core-site.xml

<?xml version="1.0"encoding="UTF-8"?>

<?xml-stylesheettype="text/xsl" href="http://www.mamicode.com/configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the"License");

youmay not use this file except in compliance with the License.

Youmay obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS"BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

Seethe License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific propertyoverrides in this file. -->

<configuration>

<property>

<name>io,native.lib.available</name>

<value>true</value>

</property>

<property>

<name>fs.default.name</name>

<value>hdfs://master:9000</value>

<final>true</final>

</property>

</configuration>

(2)hdfs-site.xml配置文件

[hdtest@master hadoop]$ cat hdfs-site.xml

<?xml version="1.0"encoding="UTF-8"?>

<?xml-stylesheettype="text/xsl" href="http://www.mamicode.com/configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the"License");

youmay not use this file except in compliance with the License.

Youmay obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS"BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

Seethe License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific propertyoverrides in this file. -->

<configuration>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:/home/hdtest/dfs/name</value>

<description>Determines where on the local filesystemthe DFS name node should store the name table.If this is a comma-delimited listof directories,then name table is replicated in all of the directories,forredundancy.</description>

<final>true</final>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:/home/hdtest/dfs/data</value>

<description>Determines where on the local filesystemthe DFS name node should store the name table.If this is a comma-delimited listof directories,then name table is replicated in all of the directories,forredundancy.</description>

<final>true</final>

</property>

<property>

<name>dfs.replication</name>

<value>2</value>

<description>副本数量</description>

</property>

<property>

<name>dfs.permission</name>

<value>false</value>

</property>

</configuration>

(3)mapred-site.xml配置文件

[hdtest@master hadoop]$ cat mapred-site.xml

<?xml version="1.0"?>

<?xml-stylesheettype="text/xsl" href="http://www.mamicode.com/configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the"License");

youmay not use this file except in compliance with the License.

Youmay obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS"BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

Seethe License for the specific language governing permissions and

limitationsunder the License. See accompanying LICENSE file.

-->

<!-- Put site-specific propertyoverrides in this file. -->

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>mapred.job.tracker</name>

<value>hdfs://master:9001</value>

<final>true</final>

</property>

<property>

<name>mapreduce.map.memory.mb</name>

<value>1536</value>

</property>

<property>

<name>mapreduce.map.java.opts</name>

<value>-Xmx1024M</value>

</property>

<property>

<name>mapreduce.reduce.memory.mb</name>

<value>3072</value>

</property>

<property>

<name>mapreduce.reduce.java.opts</name>

<value>-Xmx2560M</value>

</property>

<property>

<name>mapreduce.task.io.sort.mb</name>

<value>512</value>

</property>

<property>

<name>mapreduce.task.io.sort.factor</name>

<value>100</value>

</property>

<property>

<name>mapreduce.reduce.shuffle.parallelcopies</name>

<value>50</value>

</property>

<property>

<name>mapred.system.dir</name>

<value>file:/home/hdtest/mapred/system</value>

<final>true</final>

</property>

<property>

<name>mapred.local.dir</name>

<value>file:/home/hdtest/mapred/local</value>

</property>

</configuration>

(4)yarn-site.xml配置文件

[hdtest@master hadoop]$ cat yarn-site.xml

<?xml version="1.0"?>

<!--

Licensed under the Apache License, Version 2.0 (the"License");

youmay not use this file except in compliance with the License.

Youmay obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS"BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

Seethe License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<configuration>

<property>

<name>yarn.resourcemanager.address</name>

<value>

<spanstyle="font-family:Arial,Helvetica,sans-serif">master</span>

<spanstyle="font-family:Arial,Helvetica,sans-serif">:8080</span>

</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address</name>

<value>master:8081</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address</name>

<value>master:8082</value>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.aux-services.mapreduce_shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

<!-- Site specificYARN configuration properties -->

</configuration>

(5)环境变量配置文件

修改hadoop-env.sh,vi yarn-env.sh,mapred-env.sh文件

修改以下路径

export JAVA_HOME=/usr/java/jdk1.7.0_67

2. Hadoop集群配置文件

只需要配置namemode节点机,这里的HDM01即做namenode也兼datanode,一般情况

namenode要求独立机器,namemode不兼datanode

[hdtest@master hadoop]$ pwd

/home/hdtest/hadoop-2.2.0/etc/hadoop

[hdtest@master hadoop]$ cat masters

192.168.200.2

[hdtest@master hadoop]$ cat slaves

192.168.200.3

192.168.200.4

192.168.200.5

192.168.200.6

3.复制hadoop包

以上配置完成后,需要把hadoop目录分发到各slave节点上。

[hdtest@master ~]$ scp -r hadoop-2.2.0slave1:/home/hdtest/

[hdtest@master ~]$ scp -r hadoop-2.2.0slave2:/home/hdtest/

[hdtest@master ~]$ scp -r hadoop-2.2.0slave3:/home/hdtest/

[hdtest@master ~]$ scp -r hadoop-2.2.0slave4:/home/hdtest/

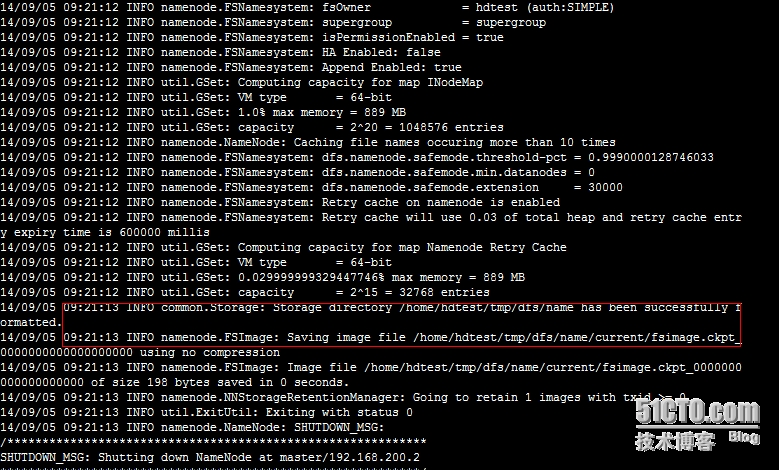

4.格式化namenode

[hdtest@master bin]$ pwd

在master节点运行以下命令格式化

/home/hdtest/hadoop-2.2.0/bin

[hdtest@master bin]$ ./hadoop namenode –format

出现以下字符表示格式化成功。

重新format时,系统提示如下:

Re-format filesystem in /home/hadoop/tmp/dfs/name ? (Y or N) 必须输入大写Y,输入小写y不会报输入错误,但format出错。

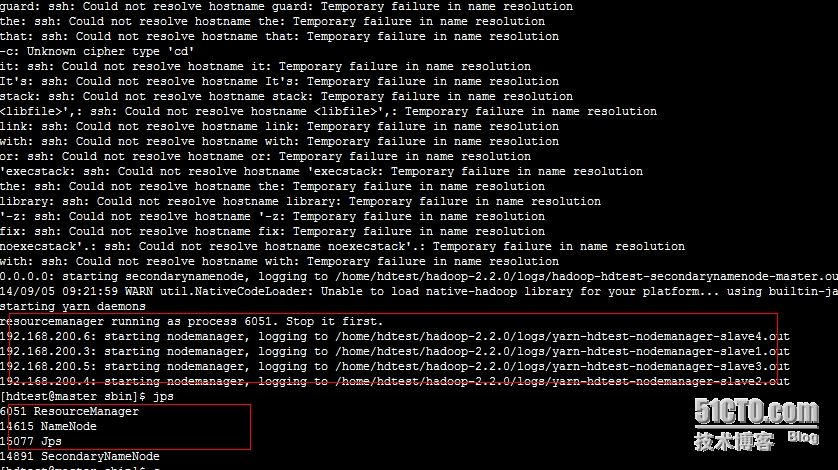

4. 启动hadoop服务

使用以下命令启动hadoop服务,只需在namenode节点启动。

[hdtest@master sbin]$ pwd

/home/hdtest/hadoop-2.2.0/sbin

[hdtest@master sbin]$ ./start-all.sh (stop-all.sh停止服务)

出现以下字符表示启动成功,并可以使用jps命令进行验证。

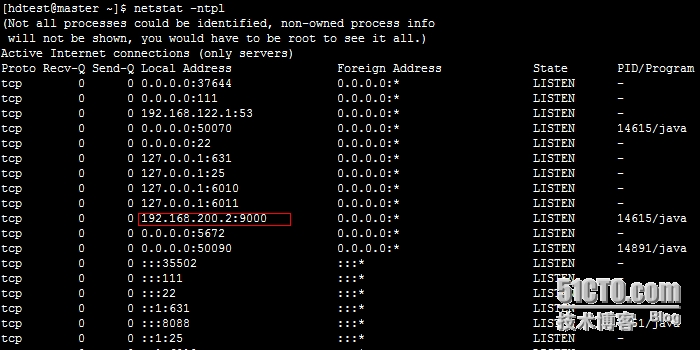

5. 验证测试

1. 使用命令验证

(1)查看端口是否启动

[hdtest@master ~]$ netstat -ntpl

(2)使用hadoop dfsadmin –report查看集群状态

[hdtest@master sbin]$ hadoop dfsadmin-report

DEPRECATED: Use of this script to executehdfs command is deprecated.

Instead use the hdfs command for it.

Java HotSpot(TM) 64-Bit Server VM warning:You have loaded library /home/hdtest/hadoop-2.2.0/lib/native/libhadoop.so.1.0.0which might have disabled stack guard. The VM will try to fix the stack guardnow.

It‘s highly recommended that you fix thelibrary with ‘execstack -c <libfile>‘, or link it with ‘-z noexecstack‘.

14/09/05 10:48:23 WARNutil.NativeCodeLoader: Unable to load native-hadoop library for yourplatform... using builtin-java classes where applicable

Configured Capacity: 167811284992 (156.29GB)

Present Capacity: 137947226112 (128.47 GB)

DFS Remaining: 137947127808 (128.47 GB)

DFS Used: 98304 (96 KB)

DFS Used%: 0.00%

Under replicated blocks: 0

Blocks with corrupt replicas: 0

Missing blocks: 0

-------------------------------------------------

Datanodes available: 4 (4 total, 0 dead)

Live datanodes:

Name: 192.168.200.5:50010 (slave3)

Hostname: slave3

Decommission Status : Normal

Configured Capacity: 41952821248 (39.07 GB)

DFS Used: 24576 (24 KB)

Non DFS Used: 7465213952 (6.95 GB)

DFS Remaining: 34487582720 (32.12 GB)

DFS Used%: 0.00%

DFS Remaining%: 82.21%

Last contact: Fri Sep 05 10:48:23 CST 2014

Name: 192.168.200.3:50010 (slave1)

Hostname: slave1

Decommission Status : Normal

Configured Capacity: 41952821248 (39.07 GB)

DFS Used: 24576 (24 KB)

Non DFS Used: 7465467904 (6.95 GB)

DFS Remaining: 34487328768 (32.12 GB)

DFS Used%: 0.00%

DFS Remaining%: 82.21%

Last contact: Fri Sep 05 10:48:24 CST 2014

Name: 192.168.200.6:50010 (slave4)

Hostname: slave4

Decommission Status : Normal

Configured Capacity: 41952821248 (39.07 GB)

DFS Used: 24576 (24 KB)

Non DFS Used: 7467925504 (6.96 GB)

DFS Remaining: 34484871168 (32.12 GB)

DFS Used%: 0.00%

DFS Remaining%: 82.20%

Last contact: Fri Sep 05 10:48:24 CST 2014

Name: 192.168.200.4:50010 (slave2)

Hostname: slave2

Decommission Status : Normal

Configured Capacity: 41952821248 (39.07 GB)

DFS Used: 24576 (24 KB)

Non DFS Used: 7465451520 (6.95 GB)

DFS Remaining: 34487345152 (32.12 GB)

DFS Used%: 0.00%

DFS Remaining%: 82.21%

Last contact: Fri Sep 05 10:48:22 CST 2014

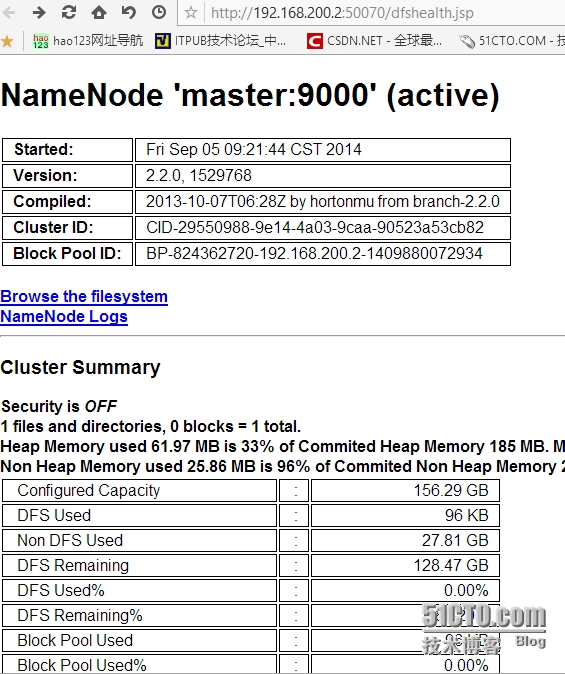

2. 浏览器访问

通过浏览器访问以下地址http://192.168.200.2:50070/

访问以下地址http://192.168.200.2:8088/cluster

本文出自 “跃跃之鸟” 博客,请务必保留此出处http://jxplpp.blog.51cto.com/4455043/1561146

red hat 6.2 部署Hadoop 2.2.0版