首页 > 代码库 > 通过MMM构建MYSQL高可用集群系统

通过MMM构建MYSQL高可用集群系统

本文为南非蚂蚁的书籍《循序渐进linux-第二版》-8.4的读笔记

MMM集群套件(MYSQL主主复制管理器)

MMM套件主要的功能是通过下面三个脚本实现的

1)mmm_mond

这是一个监控进程,运行在管理节点上,主要负责都所有数据库的监控工作,同时决定和处理所有节点的角色切换

2)mmm_agentd

这是一个代理进程,运行在每个MYSQL服务器上,主要完成监控的测试工作以及执行简单的远端服务设置

3)mmm_control

简单的管理脚本,用来查看和管理集群运行状态,同事管理mmm_mond进程

MMM方案并不太适应于对数据安全性要求很高并且读、写频繁的环境中

================================================

8.4.2 MMM典型应用方案

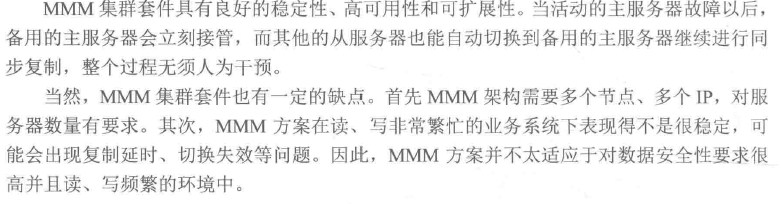

MMM双Master节点应用架构

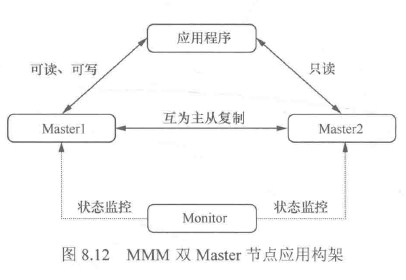

在双Master节点的基础上,增加多个Slave节点,即可实现双主多从节点应用架构

双主多从节点的MYSQL架构适合读查询量非常大的业务环境,通过MMM提供的读IP和写IP可以轻松实现MYSQL的读写、分离架构

================================================

8.4.3 MMM高可用MYSQL方案架构图

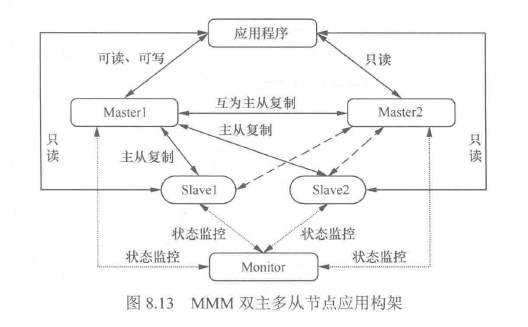

双主双从的MYSQL高可用集群架构

服务器配置环境如表:

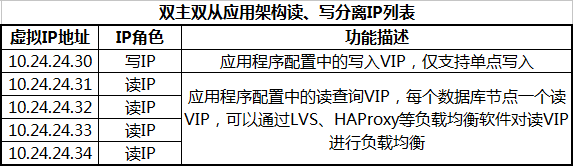

MMM双主双从应用架构对应的读、写分离IP列表:

8.4.4 MMM的安装与配置

4个主从节点上使用yum安装mysql数据库并设置密码

# yum -y install mysql mysql-server

启动mysql

# /etc/init.d/mysqld start

创建mysql密码:(jzh0024)

# mysql_secure_installation

默认密码为空,一直y即可

至此,mysql数据库安装完成。

-----------------------------

1.修改mysql配置文件,所有mysql主机上设置read_only参数,

/etc/my.cnf配置,[mysqld]段添加:

server-id = 1

log-bin=mysql-bin

relay-log = mysql-relay-bin

replicate-wild-ignore-table=mysql.%

replicate-wild-ignore-table=test.%

replicate-wild-ignore-table=information_schema.%

read_only=1

其中\

Master1的server-id = 1

Master2的server-id = 2

Slave1的server-id = 3

Slave1的server-id = 4

重启mysql数据库

# /etc/init.d/mysqld restart

-----------------------------

1.MMM套件的安装

使用yum方式安装MMM套件,所有节点安装epel的yum源

# cd /server/tools/

上传epel-release-6-8.noarch.rpm源文件

# rpm -Uvh epel-release-6-8.noarch.rpm

在monitor节点执行命令:

[root@Monitor tools]# yum -y install mysql-mmm*

4个MYSQL_db节点只需要安装mysql-mmm-agent即可,执行命令

[root@Master1 tools]# yum -y install mysql-mmm-agent

安装完成后查看安装的mmm版本

[root@Monitor tools]# rpm -qa|grep mysql-mmm

mysql-mmm-2.2.1-2.el6.noarch

mysql-mmm-tools-2.2.1-2.el6.noarch

mysql-mmm-monitor-2.2.1-2.el6.noarch

mysql-mmm-agent-2.2.1-2.el6.noarch

至此,MMM集群套件安装完成。

-------------------------------------

2. MMM集群套件的配置

在进行MMM套件配置之前,需要事先配置Master1到Master2之间的主主互为同步,

同时还需要配置Master1到Slave1、Slave2之间为主从同步

一、配置Master1到Master2之间的主主互为同步

Master1先创建一个数据库及表,用于同步测试

mysql> create database master001;

mysql> use master001;

创建表

mysql> create table permaster001(member_no char(9) not null,name char(5),birthday date,exam_score tinyint,primary key(member_no));

查看表内容

mysql> desc permaster001;

Master1进行锁表并备份数据库

mysql> flush tables with read lock;

Query OK, 0 rows affected (0.00 sec)

不要退出终端,否则锁表失败;新开启一个终端对数据进行备份,或者使用mysqldump进行备份

# cd /var/lib/

# tar zcvf mysqlmaster1.tar.gz mysql

# scp -P50024 mysqlmaster1.tar.gz root@10.24.24.21:/var/lib/

root@10.24.24.21‘s password:

mysqlmaster1.tar.gz 100% 214KB 213.9KB/s 00:00

注意:此处需要开启Master2授权root远程登录

# vim /etc/ssh/sshd_config

#PermitRootLogin no

并重启ssh连接

[root@Master1 lib]# /etc/init.d/sshd restart

数据传输到Master2后,依次重启Master1,Master2的数据库

[root@Master1 lib]# /etc/init.d/mysqld restart

Stopping mysqld: [ OK ]

Starting mysqld: [ OK ]

[root@Master2 tools]# /etc/init.d/mysqld restart

Stopping mysqld: [ OK ]

Starting mysqld: [ OK ]

3.创建复制用户并授权

Master1上创建复制用户,

mysql> grant replication slave on *.* to ‘repl_user‘@‘10.24.24.21‘ identified by ‘repl_password‘;

Query OK, 0 rows affected (0.00 sec)

刷新授权表

mysql> grant replication slave on *.* to ‘repl_user‘@‘10.24.24.21‘ identified by ‘repl_password‘;

Query OK, 0 rows affected (0.00 sec)

mysql> flush privileges;

Query OK, 0 rows affected (0.00 sec)

mysql> show master status;

+------------------+----------+--------------+------------------+

| File | Position | Binlog_Do_DB | Binlog_Ignore_DB |

+------------------+----------+--------------+------------------+

| mysql-bin.000002 | 345 | | |

+------------------+----------+--------------+------------------+

1 row in set (0.00 sec)

然后在Master2的数据库中将Master1设为自己的主服务器

# cd /var/lib/

# tar xf mysqlmaster1.tar.gz

mysql> change master to \

-> master_host=‘10.24.24.20‘,

-> master_user=‘repl_user‘,

-> master_password=‘repl_password‘,

-> master_log_file=‘mysql-bin.000002‘,

-> master_log_pos=345;

需要注意master_log_file和master_log_pos选项,这两个值是刚才在Master1上查询到的结果

Master2上启动从服务器,并查看DB2上的从服务器运行状态

mysql> start slave;

Query OK, 0 rows affected (0.00 sec)

mysql> show slave status\G;

*************************** 1. row ***************************

Slave_IO_State: Waiting for master to send event

Master_Host: 10.24.24.20

Master_User: repl_user

Master_Port: 3306

Connect_Retry: 60

Master_Log_File: mysql-bin.000002

Read_Master_Log_Pos: 345

Relay_Log_File: mysql-relay-bin.000002

Relay_Log_Pos: 251

Relay_Master_Log_File: mysql-bin.000002

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

Replicate_Do_DB:

Replicate_Ignore_DB:

Replicate_Do_Table:

至此,Master1到Master2的MYSQL主从复制已完成。

验证数据的完整性,自己创建库或者表来进行验证数据是否同步。

---------------------------------------------

二、配置Master2到Master1的主从复制

Master2数据库中创建复制用户

mysql> grant replication slave on *.* to ‘repl_user1‘@‘10.24.24.20‘ identified by ‘repl_password1‘;

刷新授权表

mysql> flush privileges;

Query OK, 0 rows affected (0.00 sec)

mysql> show master status;

+------------------+----------+--------------+------------------+

| File | Position | Binlog_Do_DB | Binlog_Ignore_DB |

+------------------+----------+--------------+------------------+

| mysql-bin.000002 | 347 | | |

+------------------+----------+--------------+------------------+

1 row in set (0.00 sec)

在Master1的数据库中将Master2设为自己的主服务器

mysql> change master to \

-> master_host=‘10.24.24.21‘,

-> master_user=‘repl_user1‘,

-> master_password=‘repl_password1‘,

-> master_log_file=‘mysql-bin.000002‘,

-> master_log_pos=347;

在Master1上启动从服务器

mysql> start slave;

Query OK, 0 rows affected (0.00 sec)

查看Master1上从服务器的运行状态

mysql> show slave status\G;

*************************** 1. row ***************************

Slave_IO_State: Waiting for master to send event

Master_Host: 10.24.24.21

Master_User: repl_user1

Master_Port: 3306

Connect_Retry: 60

Master_Log_File: mysql-bin.000002

Read_Master_Log_Pos: 347

Relay_Log_File: mysql-relay-bin.000002

Relay_Log_Pos: 251

Relay_Master_Log_File: mysql-bin.000002

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

Replicate_Do_DB:

Replicate_Ignore_DB:

Replicate_Do_Table:

Replicate_Ignore_Table:

Replicate_Wild_Do_Table:

Replicate_Wild_Ignore_Table: mysql.%,test.%,information_schema.%

Slave_IO_Running和Slave_SQL_Running都处于YES状态。表明DB1上复制服务运行正常,mysql双主模式主从复制配置完毕。

验证数据的完整性,自己创建库或者表来进行验证数据是否同步。

-------------------------------------------------------

三、配置Master1和Slave1、Slave2之间的主从同步

需要注意的是,在Slave1、Slave2和主Master同步时,"Master_Host"的地址要添加Master节点的物理IP地址,而不是虚拟IP地址。

Master1先创建一个数据库及表,用于同步测试

mysql> create database slave012;

mysql> use slave012;

创建表

mysql> create table persalve012(member_no char(9) not null,name char(5),birthday date,exam_score tinyint,primary key(member_no));

查看表内容

mysql> desc persalve012;

Master1进行锁表并备份数据库

mysql> flush tables with read lock;

Query OK, 0 rows affected (0.00 sec)

不要退出终端,否则锁表失败;新开启一个终端对数据进行备份,或者使用mysqldump进行备份

# cd /var/lib/

# tar zcvf mysqlslave12.tar.gz mysql

发送到Slave1:

[root@Master1 lib]# scp -P50024 mysqlslave12.tar.gz root@10.24.24.22:/var/lib/

The authenticity of host ‘[10.24.24.22]:50024 ([10.24.24.22]:50024)‘ can‘t be established.

RSA key fingerprint is 26:b4:7d:98:3e:ab:19:ba:08:c9:46:9b:fb:12:5d:72.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added ‘[10.24.24.22]:50024‘ (RSA) to the list of known hosts.

root@10.24.24.22‘s password:

mysqlslave12.tar.gz 100% 215KB 215.2KB/s 00:00

发送到Slave2:

[root@Master1 lib]# scp -P50024 mysqlslave12.tar.gz root@10.24.24.23:/var/lib/

The authenticity of host ‘[10.24.24.23]:50024 ([10.24.24.23]:50024)‘ can‘t be established.

RSA key fingerprint is 26:b4:7d:98:3e:ab:19:ba:08:c9:46:9b:fb:12:5d:72.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added ‘[10.24.24.23]:50024‘ (RSA) to the list of known hosts.

root@10.24.24.23‘s password:

mysqlslave12.tar.gz 100% 215KB 215.2KB/s 00:00

注意:此处需要开启Master2授权root远程登录

# vim /etc/ssh/sshd_config

#PermitRootLogin no

并重启ssh连接

[root@Master1 lib]# /etc/init.d/sshd restart

数据传输到Slave1、Slave2后,依次重启Master1,Slave1、Slave2的数据库

[root@Master1 lib]# /etc/init.d/mysqld restart

Stopping mysqld: [ OK ]

Starting mysqld: [ OK ]

[root@Slave1 tools]# /etc/init.d/mysqld restart

Stopping mysqld: [ OK ]

Starting mysqld: [ OK ]

[root@Slave2 tools]# /etc/init.d/mysqld restart

Stopping mysqld: [ OK ]

Starting mysqld: [ OK ]

3.创建复制用户并授权

Master1上创建slave1、slave2的复制用户

mysql> grant replication slave on *.* to ‘repl_user‘@‘10.24.24.22‘ identified by ‘repl_password‘;

mysql> grant replication slave on *.* to ‘repl_user‘@‘10.24.24.23‘ identified by ‘repl_password‘;

刷新授权表

mysql> flush privileges;

mysql> show master status;

+------------------+----------+--------------+------------------+

| File | Position | Binlog_Do_DB | Binlog_Ignore_DB |

+------------------+----------+--------------+------------------+

| mysql-bin.000002 | 1296 | | |

+------------------+----------+--------------+------------------+

1 row in set (0.00 sec)

然后在slave1、slave2的数据库中将Master1设为自己的主服务器

# cd /var/lib/

# tar xf mysqlslave12.tar.gz

mysql> change master to \

-> master_host=‘10.24.24.20‘,

-> master_user=‘repl_user‘,

-> master_password=‘repl_password‘,

-> master_log_file=‘mysql-bin.000002‘,

-> master_log_pos=1296;

需要注意master_log_file和master_log_pos选项,这两个值是刚才在Master1上查询到的结果

slave1、slave2上启动从服务器,并查看slave1、slave2上的从服务器运行状态

mysql> start slave;

Query OK, 0 rows affected (0.00 sec)

mysql> show slave status\G;

*************************** 1. row ***************************

Slave_IO_State: Waiting for master to send event

Master_Host: 10.24.24.20

Master_User: repl_user

Master_Port: 3306

Connect_Retry: 60

Master_Log_File: mysql-bin.000002

Read_Master_Log_Pos: 1296

Relay_Log_File: mysql-relay-bin.000002

Relay_Log_Pos: 251

Relay_Master_Log_File: mysql-bin.000002

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

Replicate_Do_DB:

Replicate_Ignore_DB:

Replicate_Do_Table:

Replicate_Ignore_Table:

Replicate_Wild_Do_Table:

Replicate_Wild_Ignore_Table: mysql.%,test.%,information_schema.%

Last_Errno: 0

Last_Error:

Skip_Counter: 0

Exec_Master_Log_Pos: 1296

Relay_Log_Space: 406

Until_Condition: None

Until_Log_File:

Until_Log_Pos: 0

Master_SSL_Allowed: No

Master_SSL_CA_File:

Master_SSL_CA_Path:

Master_SSL_Cert:

Master_SSL_Cipher:

Master_SSL_Key:

Seconds_Behind_Master: 0

Master_SSL_Verify_Server_Cert: No

Last_IO_Errno: 0

Last_IO_Error:

Last_SQL_Errno: 0

Last_SQL_Error:

1 row in set (0.00 sec)

至此,Master1到slave1、slave2的MYSQL主从复制已完成。

验证数据的完整性,自己创建库或者表来进行验证数据是否同步。

以上所有设置完成后,重新启动所有节点的mysql服务。

================================================

要配置MMM,需要现在所有mysql节点创建复制帐号之外的另外两个帐号

即monitor帐号和monitor agent帐号

monitor帐号是MMM管理服务器用来对所有mysql服务器做健康检查的

monitor agent帐号用来切换只读模式和同步

所有mysql节点上创建帐号并授权:

mysql> grant replication client on *.* to ‘mmm_monitor‘@‘10.24.24.%‘ identified by ‘monitor_password‘;

mysql> grant super,replication client,process on *.* to ‘mmm_agent‘@‘10.24.24.%‘ identified by ‘agent_password‘;

刷新授权表

mysql> flush privileges;

Query OK, 0 rows affected (0.00 sec)

通过yum安装MMM后,默认设置文件目录为/etc/mysql-mmm

4个配置文件:

mmm_mon.conf 仅在MMM管理端进行配置,主要用于配置一些监控参数;

mmm_common.conf 文件需要在所有的MMM集群节点进行配置,且内容完成一样,主要用于设置读、写节点的IP及配置虚拟IP;

mmm_agent.conf 也需要在所有mysql节点进行配置,用来设置每个节点的标识。

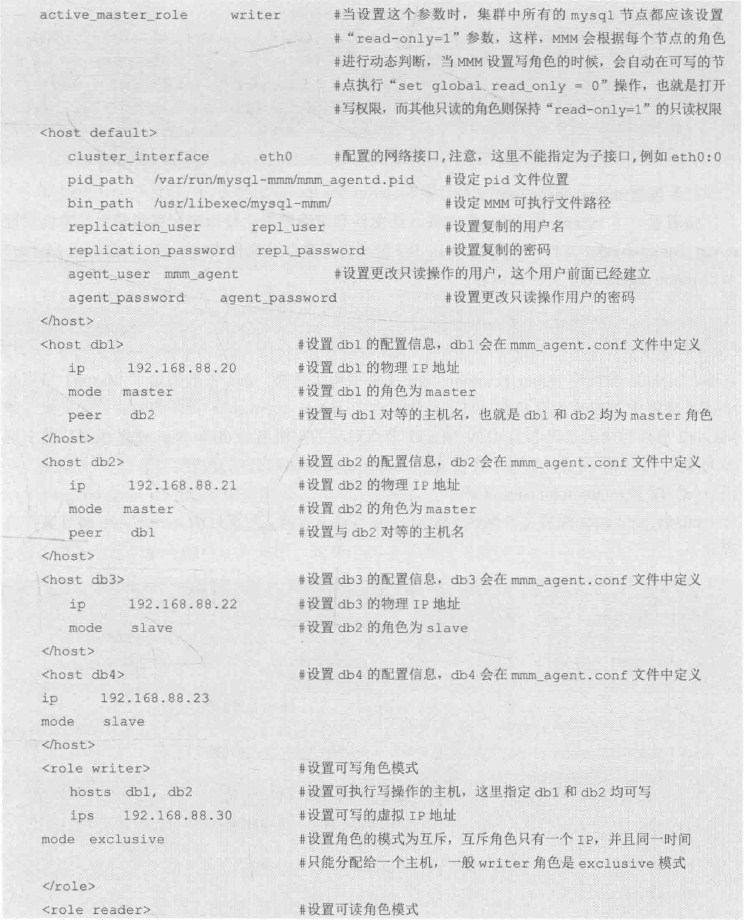

(1)Monitor MMM集群管理端和所有mysql节点配置/etc/mysql-mmm/mmm_common.conf 文件

# cp /etc/mysql-mmm/mmm_common.conf /etc/mysql-mmm/mmm_common.conf.bak

# vim /etc/mysql-mmm/mmm_common.conf

<<--------------------------------我是华丽的代码线------------------------------>>

active_master_role writer

<host default>

cluster_interface eth0

pid_path /var/run/mysql-mmm/mmm_agentd.pid

bin_path /usr/libexec/mysql-mmm/

replication_user repl_user

replication_password repl_password

agent_user mmm_agent

agent_password agent_password

</host>

<host db1>

ip 10.24.24.20

mode master

peer db2

</host>

<host db2>

ip 10.24.24.21

mode master

peer db1

</host>

<host db3>

ip 10.24.24.22

mode slave

</host>

<host db4>

ip 10.24.24.23

mode slave

</host>

<role writer>

hosts db1, db2

ips 10.24.24.30

mode exclusive

</role>

<role reader>

hosts db1, db2, db3, db4

ips 10.24.24.31, 10.24.24.32, 10.24.24.33, 10.24.24.34

mode balanced

</role>

<<--------------------------------我是华丽的代码线------------------------------>>

参数配置详解

(2)所有mysql节点配置mmm_agent.conf文件

# vim /etc/mysql-mmm/mmm_agent.conf

master1节点为:

include mmm_common.conf

this db1

master2节点为:

include mmm_common.conf

this db2

slave1节点为:

include mmm_common.conf

this db3

slave2节点为:

include mmm_common.conf

this db4

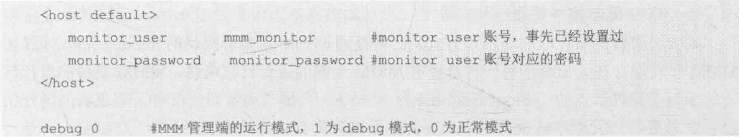

(3)MMM管理节点monitor上配置mmm_mon.conf

# vim /etc/mysql-mmm/mmm_mon.conf

<<--------------------------------我是华丽的代码线------------------------------>>

include mmm_common.conf

<monitor>

ip 127.0.0.1

pid_path /var/run/mysql-mmm/mmm_mond.pid

bin_path /usr/libexec/mysql-mmm

status_path /var/lib/mysql-mmm/mmm_mond.status

ping_ips 10.24.24.1, 10.24.24.20, 10.24.24.21, 10.24.24.22, 10.24.24.23

flap_duration 3600

flap_count 3

auto_set_online 0

# The kill_host_bin does not exist by default, though the monitor will

# throw a warning about it missing. See the section 5.10 "Kill Host

# Functionality" in the PDF documentation.

#

# kill_host_bin /usr/libexec/mysql-mmm/monitor/kill_host

#

</monitor>

<host default>

monitor_user mmm_monitor

monitor_password monitor_password

</host>

debug 0

<<--------------------------------我是华丽的代码线------------------------------>>

参数配置详解

(4)配置MMM集群所有节点设置

# vim /etc/default/mysql-mmm-agent

ENABLED=1

至此,MMM集群的4个主要配置文件配置完成,将mmm_common.conf文件从MMM集群管理节点依次复制到4个mysql节点即可;

上传配置文件

# cd /etc/mysql-mmm/

# rz

# chmod 640 mmm_common.conf

MMM集群中所有配置文件的权限最好都设置为640,否则启动MMM服务的时候可能会出错。

=======================================================

8.4.5 MMM的管理

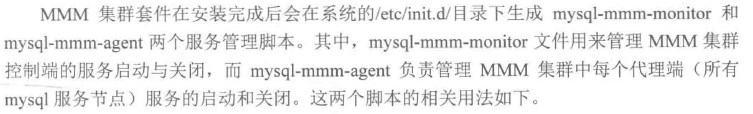

1. MMM集群服务管理

[root@Monitor ~]# /etc/init.d/mysql-mmm-monitor

Usage: /etc/init.d/mysql-mmm-monitor {start|stop|restart|condrestart|status}

[root@Master1 ~]# /etc/init.d/mysql-mmm-agent

Usage: /etc/init.d/mysql-mmm-agent {start|stop|restart|condrestart|status}

在完成MMM集群配置后,可以通过这两个脚本来启动MMM集群

在MMM集群管理端启动mysql-mmm-monitor服务

[root@Monitor ~]# /etc/init.d/mysql-mmm-monitor start

Starting MMM Monitor Daemon: [ OK ]

在每个mysql代理端依次启动agent服务

[root@Master1 ~]# /etc/init.d/mysql-mmm-agent start

Starting MMM Agent Daemon: [ OK ]

--------------------------------------------

2. MMM基本维护管理

<<--------------------------------我是华丽的代码线------------------------------>>

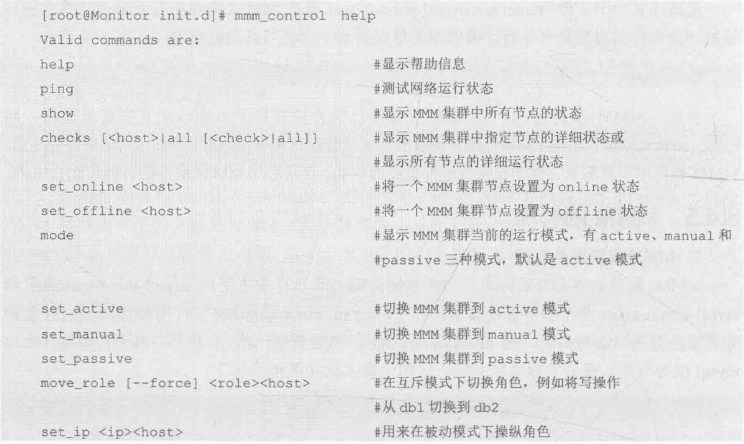

[root@Monitor ~]# mmm_control help

Valid commands are:

help - show this message

ping - ping monitor

show - show status

checks [<host>|all [<check>|all]] - show checks status

set_online <host> - set host <host> online

set_offline <host> - set host <host> offline

mode - print current mode.

set_active - switch into active mode.

set_manual - switch into manual mode.

set_passive - switch into passive mode.

move_role [--force] <role> <host> - move exclusive role <role> to host <host>

(Only use --force if you know what you are doing!)

set_ip <ip> <host> - set role with ip <ip> to host <host>

<<--------------------------------我是华丽的代码线------------------------------>>

参数详解如下:

几个常用的MMM集群维护管理的例子:

1)查看集群的运行状态

[root@Monitor mysql-mmm]# mmm_control show

db1(10.24.24.20) master/ONLINE. Roles: reader(10.24.24.33), writer(10.24.24.30)

db2(10.24.24.21) master/ONLINE. Roles: reader(10.24.24.34)

db3(10.24.24.22) slave/ONLINE. Roles: reader(10.24.24.31)

db4(10.24.24.23) slave/ONLINE. Roles: reader(10.24.24.32)

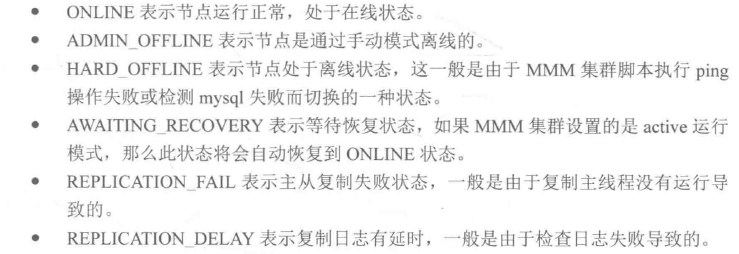

在MMM集群中,集群节点的状态有如下几种:

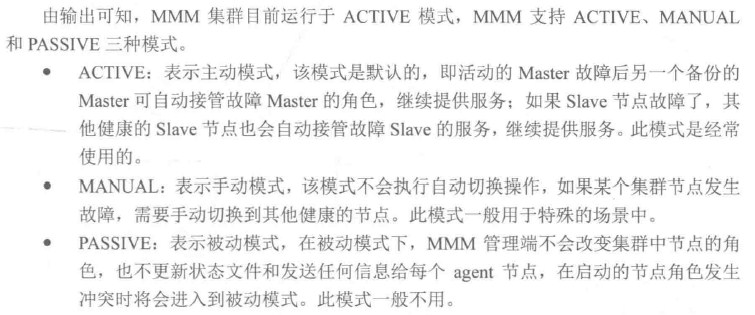

2)查看MMM集群目前处于什么运行模式

[root@Monitor mysql-mmm]# mmm_control mode

ACTIVE

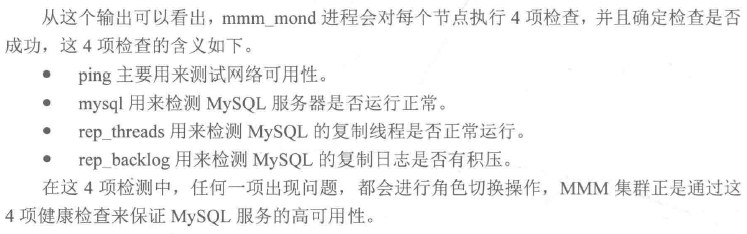

3)查看所有MMM集群节点的运行状态

[root@Monitor mysql-mmm]# mmm_control checks all

db4 ping [last change: 2016/09/13 15:11:34] OK

db4 mysql [last change: 2016/09/13 15:11:34] OK

db4 rep_threads [last change: 2016/09/13 15:11:34] OK

db4 rep_backlog [last change: 2016/09/13 15:11:34] OK: Backlog is null

db2 ping [last change: 2016/09/13 15:11:34] OK

db2 mysql [last change: 2016/09/13 15:11:34] OK

db2 rep_threads [last change: 2016/09/13 15:11:34] OK

db2 rep_backlog [last change: 2016/09/13 15:11:34] OK: Backlog is null

db3 ping [last change: 2016/09/13 15:11:34] OK

db3 mysql [last change: 2016/09/13 15:11:34] OK

db3 rep_threads [last change: 2016/09/13 15:11:34] OK

db3 rep_backlog [last change: 2016/09/13 15:11:34] OK: Backlog is null

db1 ping [last change: 2016/09/13 15:11:34] OK

db1 mysql [last change: 2016/09/13 15:11:34] OK

db1 rep_threads [last change: 2016/09/13 15:11:34] OK

db1 rep_backlog [last change: 2016/09/13 15:11:34] OK: Backlog is null

4)单独查看某个节点的运行状态

[root@Monitor mysql-mmm]# mmm_control checks db1

db1 ping [last change: 2016/09/13 15:11:34] OK

db1 mysql [last change: 2016/09/13 15:11:34] OK

db1 rep_threads [last change: 2016/09/13 15:11:34] OK

db1 rep_backlog [last change: 2016/09/13 15:11:34] OK: Backlog is null

=========================================================

8.4.6 测试MMM实现MYSQL高可用功能

1. 读、写分离测试

首先在master1,master2,slave1,slave2上添加远程访问授权;

mysql> grant all on *.* to ‘root‘@‘10.24.24.%‘ identified by ‘jzh0024‘;

mysql> flush privileges;

mysql> select user,host from mysql.user;

通过可写的VIP登录到了Master1节点,创建一张表mmm_test,并插入一条数据;

[root@mysql01 ~]# mysql -uroot -pjzh0024 -h 10.24.24.30

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 1701

Server version: 5.1.73-log Source distribution

mysql>

mysql> show variables like "%hostname%";

+---------------+---------+

| Variable_name | Value |

+---------------+---------+

| hostname | Master1 |

+---------------+---------+

1 row in set (0.00 sec)

mysql>

mysql> create database repldb;

Query OK, 1 row affected (0.00 sec)

mysql> use repldb;

Database changed

mysql> create table mmm_test(id int,email varchar(30));

Query OK, 0 rows affected (0.01 sec)

mysql> insert into mmm_test (id,email) values(186,"ywliyq@163.com");

Query OK, 1 row affected (0.00 sec)

mysql> select * from mmm_test;

+------+----------------+

| id | email |

+------+----------------+

| 186 | ywliyq@163.com |

+------+----------------+

1 row in set (0.00 sec)

此时可以登录Master2节点、slave1节点,slave2节点,查看数据是否同步。

mysql> show databases;

mysql> use repldb;

mysql> show tables;

mysql> select * from mmm_test;

发现数据已在Master2节点、slave1节点,slave2节点上全部同步。

-------------------------------------------

接着仍在mysql远程客户端上通过MMM提供的只读VIP登录MYSQL集群,

[root@mysql01 ~]# mysql -uroot -pjzh0024 -h 10.24.24.32

mysql> show variables like "%hostname%";

+---------------+--------+

| Variable_name | Value |

+---------------+--------+

| hostname | Slave1 |

+---------------+--------+

1 row in set (0.00 sec)

mysql> use repldb;

mysql> create table mmm_test1(id int,email varchar(100));

未测试通,有问题,可以写入数据;

-------------------------------------------

2. 故障转移功能测试

先检查MMM目前的集群运行状态

[root@Monitor mysql-mmm]# mmm_control show

db1(10.24.24.20) master/ONLINE. Roles: reader(10.24.24.33), writer(10.24.24.30)

db2(10.24.24.21) master/ONLINE. Roles: reader(10.24.24.34)

db3(10.24.24.22) slave/ONLINE. Roles: reader(10.24.24.31)

db4(10.24.24.23) slave/ONLINE. Roles: reader(10.24.24.32)

关闭Master1节点的mysql服务,在查看MMM集群运行状态

本文出自 “Linux运维的自我修养” 博客,请务必保留此出处http://ywliyq.blog.51cto.com/11433965/1856974

通过MMM构建MYSQL高可用集群系统