首页 > 代码库 > Memcached Server High availability

Memcached Server High availability

Memcached is a high performance centralized cache system that stores data as key/value pair. It is usually used as L2 cache that serves as a database front. However, memcached lack of features that typical no-sql store has:

Data is stored in memory only,there is no disk persistence etc, so data is lost on server failure

Memcached itself does not provide cluster support such as data partition and replication.

Without the above mentioned high availability features, critical business data can be compromised on memcached node failure. On those unfortunate events, request may directly hit databaseand incurs a heavy load.

Spymemcached client

This is probably the best memcached client.It has a lot of nice features such as java NIO, binary protocol support, customizable data serialization/deserialization, asynchronous API, data batching, failed node reconnection etc. It also provides some basic clustering features: When a memcached client is created, it passes a list of host address. Internally, itcreates a memcached connection that wraps multiple memcached nodes. Anabstraction called NodeLocator isused to select the primary node to perform the operation. The default implementation: ArrayModNodeLocator select the primary node based on hash(key)mod number of servers. If primary node fails, it will iterate through additional nodes to select a backup.

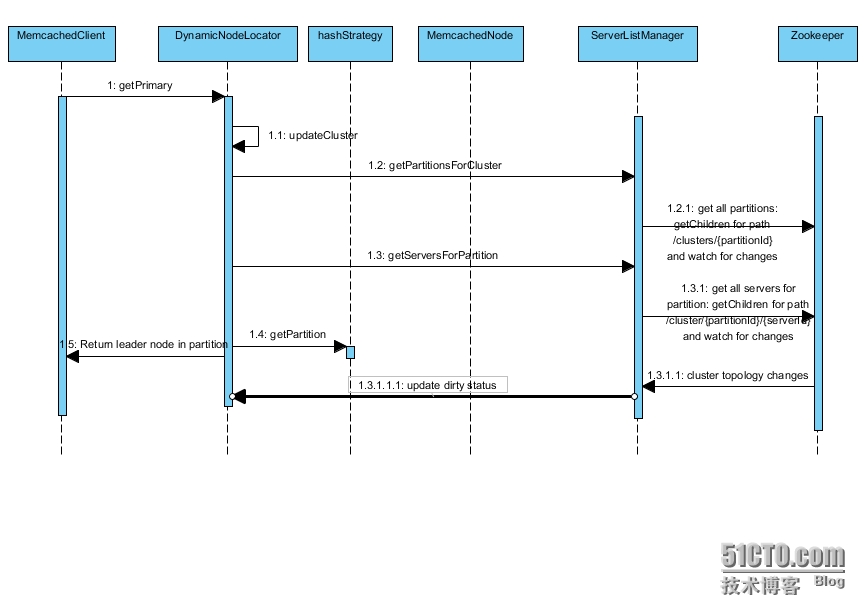

However, the static memcached node list lacks flexibility. With static memcached node list, we have to change the configuration manually and restart the memcached client, this is against the principle of seamlessly scalability. It is desirable that the client can detect cluster topology change automatically and adapt to it by creating/deleting memcachednode. This is the major issue that we want to address in this article.

Architecture:

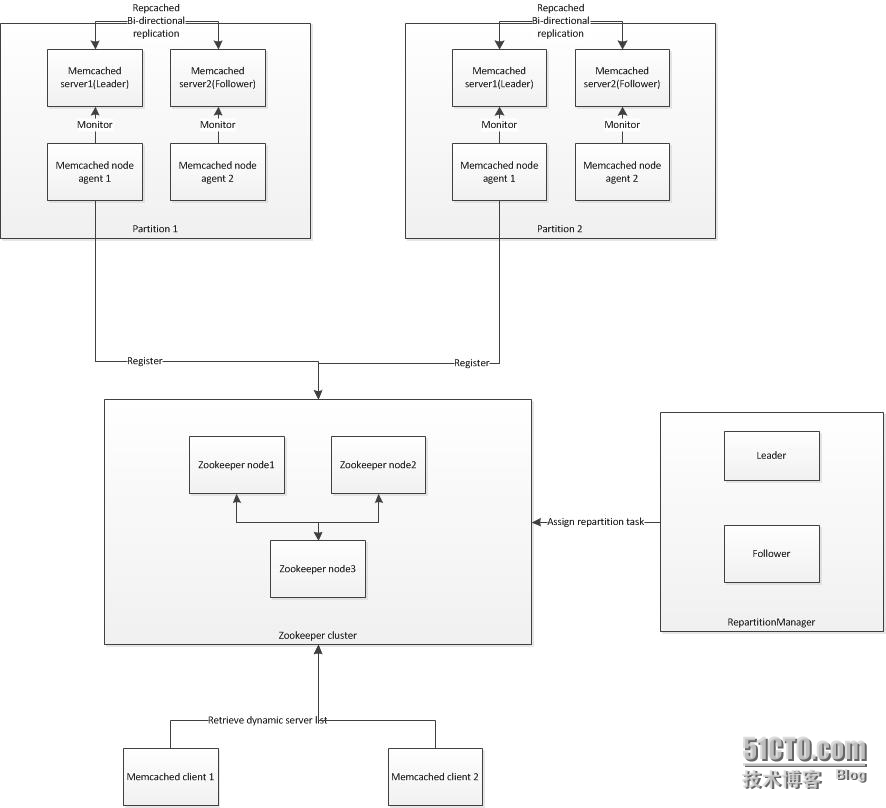

As the diagram shows:

MemcachedServer

A list of memcached servers in cluster arelogically divided into multiple partitions, each partition contains one leader server and multiple follower servers, bi-directional replication is enabled between the leader and follows via repcached -- an enhanced version of memcached with data replication capability. For any read/write request, a partition is first located based the key of the operation. The leader

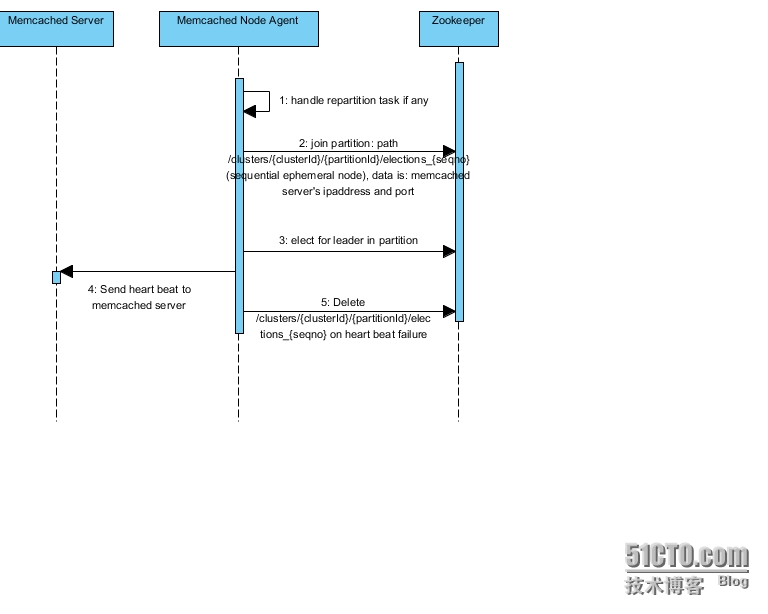

MemcachedNodeAgent

An agent is deployed on the same host as memcached server. It periodically sends heartbeat request to local memcached server to check its status. It also connects to zookeeper to register/unregister the memcached server on startup/shutdown or server failure. In addition, if the nodeAgent is the first one that joins the cluster, it handles data repartition automatically from a source memcached server. Data repartition is explained in the later section.

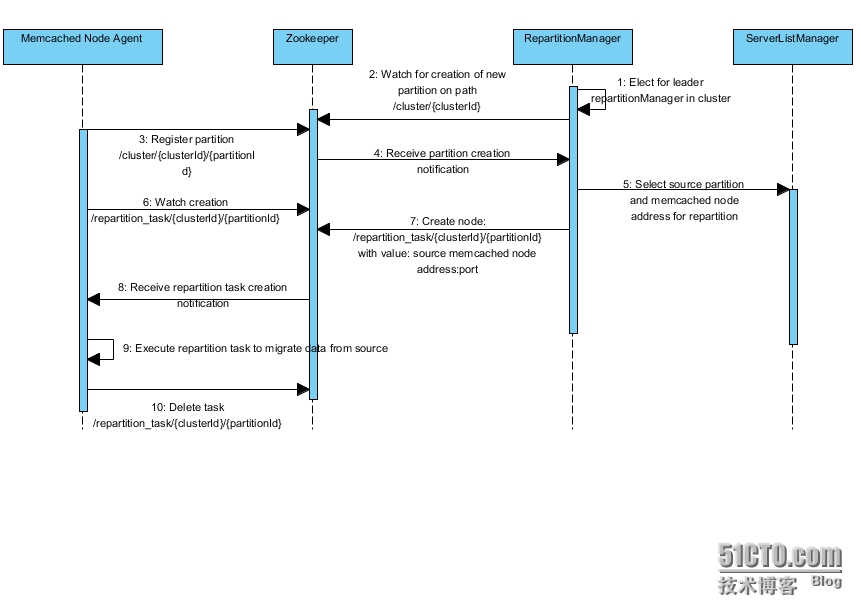

RepartitionManager

It connects to zookeeper and detects any newly created partition in the cluster. It selects a source partition and serverwhere data will be migrated from. It then creates a repartition task in zookeeper with pair (source server -> target partition). It does not execute the task though, so that the resource consuming task will not stress the repartitionManager. RepartitionManager also supports clustering with one active leader and multiple standby followers. It is a logical component that can be deployed on memcached server nodes or a dedicated server.

ServerListManager

The server list manager connects to zookeeper to retrieve cluster topology information. It also watches the clusterpath in zookeeper and receives notification on any cluster topology change and refreshes its internal data structure accordingly. This is a logical component. It is usually deployed together with the memcached client and repartitionManager, which leverage cluster topology information to perform their tasks

MemcachedClient

It extends existing spy memcachedclient,with enhancement on nodeLocator, and memcachedConnection that dynamically adapts to cluster topology change.

Data repartition:

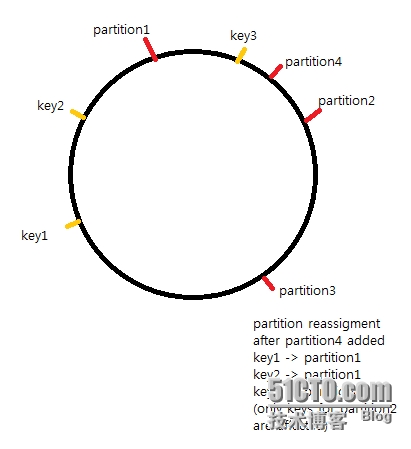

When a new partition is added to the cluster, some read request will be directed to memcached servers that belong tothe partition. However, the new server does not contain any data, so we must choose a memcached server in another partition and migrate its data to the newserver. We will choose one of the follower node in the source partition,provided that its data is fully synchronized with leader. Choosing a follower has the benefit that it does not add additional load to the leader which actively serves read/write request. Which source partition to choose is governed by the hash algorithm, if a key is hashed to partition p now hashed topartition p‘ -- the newly added partition. p is chosen as the source of repartition to p’. The consistent hashing algorithm must ensure that there is one single partition affected.

Deal with memcached server failure

With data replication and multiple serversin a partition, a single node failure will not impact the whole partition. If a follower node fails, we just restart it and rep-cached will automatically syncits data with the leader. The impact is minimal as it does not actively serve request. If a partition leader fails, data re-election process will be auto launched to choose a new follower as leader.

Auto leader election:

Auto leader election is a common feature in distributed system. Usually the leader performs tasks with a list of followers in standby mode. When the leader node fails, a leader election process must be re-triggered automatically among the rest of the followers to vote for a new leader. This effectively avoids single point of failure. In our scenario, a memcached node agent is elected as the leader in partition and serves read/write request. A repartitionManager is selected as the leader in the whole cluster to detect creation of new partition and assign repartition task.

We use standard leader election mechanism provided by zookeeper based on ephemeral sequential node. Node is auto assigned a sequence number that appends to the node name. The node with lowest sequence number is elected as the leader. Ephemeral nodes will be deleted automatically from zookeeper on failure and the other cluster members will watch the path and detect failure of leader and start a new election vote automatically.

Partition selection:

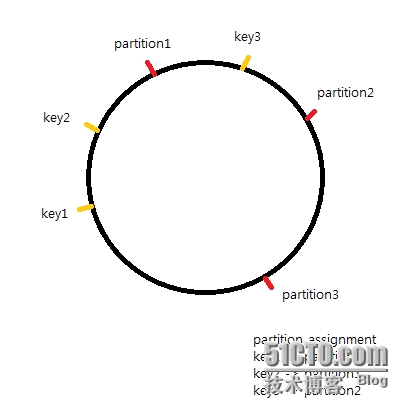

Partition is select based on hash algorithm.The traditional approach calculates hash(key) mod number of partitions to select one partition index. However, when a new partition is added to cluster, most ofthe keys will be affected and are indexed to a different partition. This will result in a lot of cache miss.

Consistent hashing is an alternative solution that deals with this problem. We calculate hash(partitionId) for each partition and maps it to a point on a circle. We then calculate hash(key) and also maps it to a point on the circle. The nearest point clockwise that maps to a partition is selected for the key. With this scheme, when a new partition is added, a single partition is affected only (the partition that is nearest tothe newly added partition anti-clockwise)

Couchbase uses a different approach, where a set of virtual nodes are created. Keys map to virtual nodes using traditional mod scheme. Number of virtual nodes must be large enough and it will remain unchanged when new physical nodes are added, whereas the mapping from virtual node to physical nodes will change.

Zookeeperdata structure:

Path | Data | Node Type | Description |

/clusters/{clusterId}/ | “” | Persistent | Store partitions under a cluster |

/clusters/{clusterId}/{partitionId}/ | “” | Persistent | Store servers under a partition |

/clusters/{clusterId}/{partitionId}/elections_{seqno} | Server’s ipaddress:port number | Ephemeral sequential | Store server’s actual ipaddress and port |

/clusters/{clusterId}/{partitionId}/leader_ | Leader’s ipaddress:port number | Ephemeral | Store leader’s actual ipaddress and port |

/repartition_election/{clusterId}/elections_{seqno} | RepartitionManager’s ipaddress and port number | Persistent | Store repartition managers under the cluster |

/repartition_task/{clusterId}/{partitionId}/ | Repartition Source’s ipaddress and port number | Persistent | Store repartition tasks under the partition |

Limitations:

We observe a number of limitations here

Repcached is built on memcached-1.2.8 whichis a very early version of memcached. There is no official build for laterversion, so binary protocol is not supported at all. Another major drawback isthat for repcached, there is no way to know when the replication has completed.Without knowing follower’s data replication status, we cannot take this into consideration on leader re-election and we may ends up choosing a leader within complete data.

How to perform replication is another challenge.Memcached does not have any API to iterate through all keys. There is an unofficial command “STAT CACHEDUMP [slabno] [count]” but this can only dump up to 1 mb data for each slab. So we cannot really have an elegant solution for repartition implementation.

We also do not consider the case where new write request may hit the repartition source server when data migration occurs.So data migrated will be out of dated. Mitigation to this problem is to record write in log during data repartition task running and apply the log after repartition task completes.

Couchbase is a mature technology that dealswith data partition and replication. It fully supports memcached protocol. Theclient is also an extension of existing sky memcached client with transparent dynamic cluster expansion support. I will write another blog to explore this technology.

Memcached Server High availability