首页 > 代码库 > 基于keepalived实现双主模型高可用lvs

基于keepalived实现双主模型高可用lvs

实验环境,使用的操作系统CentOS6.5:

Director:

node1:IP 172.16.103.2 安装keepalived VIP:172.16.103.20

node2:IP 172.16.103.3 安装keepalived VIP:172.16.103.30

RS:

RS1:IP 172.16.103.1 提供httpd服务

RS2:IP 172.16.103.4 提供httpd服务

实验效果:

前端的两台Director运行keepalived,自动生成各自的一组lvs规则,并独立运行,当一节点出现故障后,VIP会自动流转到另外一个节点上,服务不会中止

实验拓扑:

实验步骤:

一、配置后端的RS1和RS2,RS1和RS2的配置相同,只列出了RS1的配置(lvs集群模型为DR):

# echo 1 > /proc/sys/net/ipv4/conf/all/arp_ignore # echo 1 > /proc/sys/net/ipv4/conf/eth0/arp_ignore # echo 2 > /proc/sys/net/ipv4/conf/all/arp_announce # echo 2 > /proc/sys/net/ipv4/conf/eth0/arp_ignore # ifconfig lo:0 172.16.103.20 netmask 255.255.255.255 broadcast 172.16.103.20 # route add -host 172.16.103.20 dev lo:0 # ifconfig lo:1 172.16.103.30 netmask 255.255.255.255 broadcast 172.16.103.30 # route add -host 172.16.103.30 dev lo:1

在/var/www/html目录下创建一个默认主页文件,这个文件的内容在RS1和RS2内容不同,目的是为了在测试的时候可以看出请求转发到不同主机的效果,实际使用中两个RS上的网页内容应该是相同的。

在RS1上:

# echo "<h1>www1.cluster.com</h1>" > /var/www/html/index.html

在RS2上:

# echo "<h1>www2.cluster.com</h1>" > /var/www/html/index.html

分别在RS1和RS2上启动httpd服务:

# service httpd start

为保证后续的测试正常,要在其他的主机上测试RS1和RS2上的主页是否可以正常的访问到,测试方式:

[root@node1 ~]# curl http://172.16.103.1 <h1>www1.cluster.com</h1> # curl http://172.16.103.4 [root@node1 ~]# curl http://172.16.103.4 <h1>www2.cluster.com</h1>

两个后端的RS配置完成。

二、配置前端的Director

在node1和node2上分别安装keepalived

# yum install -y keepalived

配置node1:

! Configuration File for keepalived

global_defs {

notification_email {

acassen@firewall.loc

failover@firewall.loc

sysadmin@firewall.loc

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id LVS_DEVEL

}

vrrp_instance VI_1 {

state MASTER

interface eth0

virtual_router_id 61

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 123abc

}

virtual_ipaddress {

172.16.103.20

}

}

vrrp_instance VI_2 {

state BACKUP

interface eth0

virtual_router_id 71

priority 99

advert_int 1

authentication {

auth_type PASS

auth_pass helloka

}

virtual_ipaddress {

172.16.103.30

}

}

virtual_server 172.16.103.20 80 {

delay_loop 6

lb_algo rr

lb_kind DR

nat_mask 255.255.0.0

protocol TCP

real_server 172.16.103.1 80 {

weight 1

HTTP_GET {

url {

path /

status_code 200

}

connect_timeout 2

nb_get_retry 3

delay_before_retry 1

}

}

real_server 172.16.103.4 80 {

weight 1

HTTP_GET {

url {

path /

status_code 200

}

connect_timeout 2

nb_get_retry 3

delay_before_retry 1

}

}

}

virtual_server 172.16.103.30 80 {

delay_loop 6

lb_algo rr

lb_kind DR

nat_mask 255.255.0.0

protocol TCP

real_server 172.16.103.1 80 {

weight 1

HTTP_GET {

url {

path /

status_code 200

}

connect_timeout 2

nb_get_retry 3

delay_before_retry 1

}

}

real_server 172.16.103.4 80 {

weight 1

HTTP_GET {

url {

path /

status_code 200

}

connect_timeout 2

nb_get_retry 3

delay_before_retry 1

}

}

}说明:在配置文件中定义了两个vrrp_instance VI_1和VI_2分别使用不同的虚拟路由ID,配置的不同的VIP地址,而且为了达到在两个node节点上启动时每个实例定义的资源运行在不同的主机上,所以两个实例在一个配置文件中为一主一备,如果有一个node节点未启动keepalived服务时,另外一个node节点会启动这两个实例中定义的规则及VIP,以达到双主模型而且高可用的效果。后端的RS的健康状况使用的是七层应用协议检测机制请求站点的默认主页,如果返回码为200,说明可以正常的访问到站点,说明后端的RS工作正常。

配置node2:

! Configuration File for keepalived

global_defs {

notification_email {

acassen@firewall.loc

failover@firewall.loc

sysadmin@firewall.loc

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id LVS_DEVEL

}

vrrp_instance VI_1 {

state BACKUP

interface eth0

virtual_router_id 61

priority 99

advert_int 1

authentication {

auth_type PASS

auth_pass 123abc

}

virtual_ipaddress {

172.16.103.20

}

}

vrrp_instance VI_2 {

state MASTER

interface eth0

virtual_router_id 71

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass helloka

}

virtual_ipaddress {

172.16.103.30

}

}

virtual_server 172.16.103.20 80 {

delay_loop 6

lb_algo rr

lb_kind DR

nat_mask 255.255.0.0

protocol TCP

real_server 172.16.103.1 80 {

weight 1

HTTP_GET {

url {

path /

status_code 200

}

connect_timeout 2

nb_get_retry 3

delay_before_retry 1

}

}

real_server 172.16.103.4 80 {

weight 1

HTTP_GET {

url {

path /

status_code 200

}

connect_timeout 2

nb_get_retry 3

delay_before_retry 1

}

}

}

virtual_server 172.16.103.30 80 {

delay_loop 6

lb_algo rr

lb_kind DR

nat_mask 255.255.0.0

protocol TCP

real_server 172.16.103.1 80 {

weight 1

HTTP_GET {

url {

path /

status_code 200

}

connect_timeout 2

nb_get_retry 3

delay_before_retry 1

}

}

real_server 172.16.103.4 80 {

weight 1

HTTP_GET {

url {

path /

status_code 200

}

connect_timeout 2

nb_get_retry 3

delay_before_retry 1

}

}

}node2的配置与node1的不同之处在与两个实例中的角色刚好相反,node1为MASTER时,node2为BACKUP,其他配置相同。

三、测试配置结果:

首先启动一个节点,比如node1,查看资源自动结果:

[root@node1 keepalived]# ip addr show 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 16436 qdisc noqueue state UNKNOWN link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000 link/ether 00:0c:29:e1:37:51 brd ff:ff:ff:ff:ff:ff inet 172.16.103.2/16 brd 172.16.255.255 scope global eth0 inet 172.16.103.20/32 scope global eth0 inet 172.16.103.30/32 scope global eth0 inet6 fe80::20c:29ff:fee1:3751/64 scope link valid_lft forever preferred_lft forever

可以看到两个VIP地址172.16.103.20和172.16.103.30都配置在了node1的eth0网卡上。

ipvs规则的启动效果为,可以看到两组规则也都启动在了node1上。

[root@node1 keepalived]# ipvsadm -L -n IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn TCP 172.16.103.20:80 rr -> 172.16.103.1:80 Route 1 0 0 -> 172.16.103.4:80 Route 1 0 0 TCP 172.16.103.30:80 rr -> 172.16.103.1:80 Route 1 0 0 -> 172.16.103.4:80 Route 1 0 0

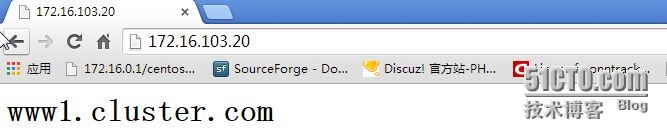

在浏览器内输入172.16.103.20,显示的结果为:

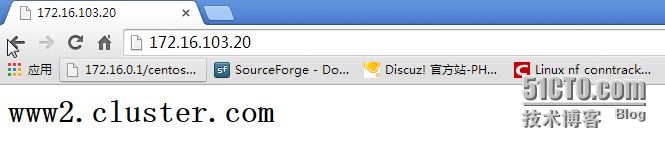

刷新几次测试,会有负载均衡的效果:

可以看到访问同一个VIP,显示的结果是后端的两台RS上的页面,在实际使用中,两台RS上的页面内容应该是一致的。

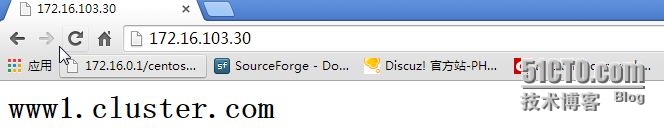

访问另一个VIP:172.16.103.30测试:

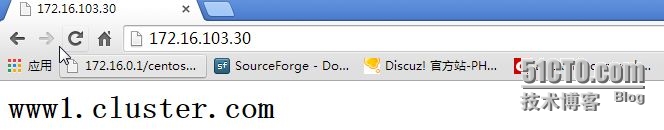

刷新后请求会转发至后端的另一个RS上去:

此时,启动node2,对应的VIP 172.16.103.30会转移到这个节点上来:

[root@node2 keepalived]# service keepalived start Starting keepalived: [ OK ]

查看一下VIP的启动结果,可以看到VIP 172.16.103.30配置在了eth0上:

[root@node2 keepalived]# ip addr show 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 16436 qdisc noqueue state UNKNOWN link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000 link/ether 00:0c:29:cf:64:8f brd ff:ff:ff:ff:ff:ff inet 172.16.103.3/16 brd 172.16.255.255 scope global eth0 inet 172.16.103.30/32 scope global eth0 inet6 fe80::20c:29ff:fecf:648f/64 scope link valid_lft forever preferred_lft forever

此时在浏览器内访问172.16.103.30:

可以看到访问依然没有问题。

此时如果node1出现问题,用停止服务的方式来模拟,那么VIP 172.16.103.20自动流转到node2上,两个VIP都会配置在node2上,就达到了双主模型的高可用lvs的效果:

[root@node1 keepalived]# service keepalived stop Stopping keepalived: [ OK ]

查看VIP配置的结果:

[root@node2 keepalived]# ip addr show 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 16436 qdisc noqueue state UNKNOWN link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000 link/ether 00:0c:29:cf:64:8f brd ff:ff:ff:ff:ff:ff inet 172.16.103.3/16 brd 172.16.255.255 scope global eth0 inet 172.16.103.30/32 scope global eth0 inet 172.16.103.20/32 scope global eth0 inet6 fe80::20c:29ff:fecf:648f/64 scope link valid_lft forever preferred_lft forever

说明:在实际的使用中两个VIP为公网IP地址,配置在上级的DNS服务器上,用户在请求访问站点时,基于域名的方式,而请求会通过DNS的轮询的效果分别调度到前端的两个Director上,而后端的RS使用的是同一组主机,这样达到了负载均衡(用户的请求负载均衡到不同的Director,同时每个Director还可以将请求调度到后端的不同的RS)的效果,前端的Director也有高可用的效果。而且前端的Director起到互备的作用。

基于keepalived实现双主模型高可用lvs