首页 > 代码库 > LNMT/LAMT实现动静分离、负载均衡和会话保持

LNMT/LAMT实现动静分离、负载均衡和会话保持

1、本次试验主要是通过nginx代理到tomcat处理动态响应;

2、通过httpd代理到tomcat做动态请求的处理;

3、通过httpd和tomcat实现session会话的绑定;

4、通过httpd和tomcat实现session会话的保持;

5、通过httpd实现tomcat负载均衡效果;

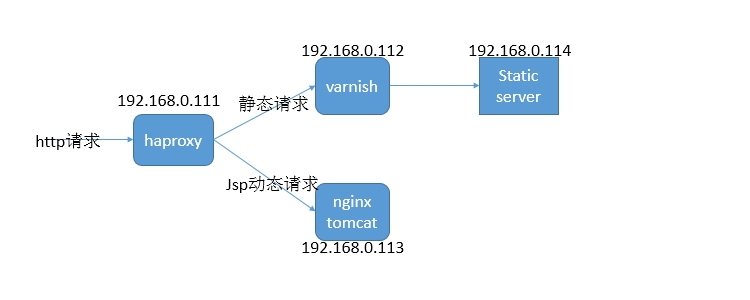

一、LNMT的试验配置

LNMT:

| 主机 | IP |

| haproxy | 192.168.0.111 node1.soul.com |

| varnish | 192.168.0.112 node2.soul.com |

| nginx+tomcat | 192.168.0.113 node3.soul.com |

| httpd | 192.168.0.114 node4.soul.com |

1)配置haproxy

#直接yum安装haproxy即可;

[root@node1 ~]# vim /etc/haproxy/haproxy.cfg

frontend main *:80

acl url_static path_beg -i /static /images /javascript /stylesheets

acl url_static path_end -i .jpg .gif .png .css .js .html .htm

acl url_dynamic path_end -i .jsp .do

use_backend static if url_static

use_backend dynamic if url_dynamic

default_backend static

#---------------------------------------------------------------------

# static backend for serving up images, stylesheets and such

#---------------------------------------------------------------------

backend static

balance roundrobin

server static 192.168.0.112:80 check

#---------------------------------------------------------------------

# round robin balancing between the various backends

#---------------------------------------------------------------------

backend dynamic

balance roundrobin

server node3 192.168.0.113:80 check

[root@node1 ~]# service haproxy start

Starting haproxy: [ OK ]

[root@node1 ~]# ss -tunl | grep 80

tcp LISTEN 0 128 *:80 *:*

#测试启动正常2)配置varnish:

#安装就不介绍;前面有介绍

[root@node2 ~]# vim /etc/sysconfig/varnish

VARNISH_LISTEN_PORT=80 更改监听端口

#

# # Telnet admin interface listen address and port

VARNISH_ADMIN_LISTEN_ADDRESS=127.0.0.1

VARNISH_ADMIN_LISTEN_PORT=6082

#

# # Shared secret file for admin interface

VARNISH_SECRET_FILE=/etc/varnish/secret

#

# # The minimum number of worker threads to start

VARNISH_MIN_THREADS=50

#

# # The Maximum number of worker threads to start

VARNISH_MAX_THREADS=1000

#

# # Idle timeout for worker threads

VARNISH_THREAD_TIMEOUT=120

#

# # Cache file location

VARNISH_STORAGE_FILE=/var/lib/varnish/varnish_storage.bin

#

# # Cache file size: in bytes, optionally using k / M / G / T suffix,

# # or in percentage of available disk space using the % suffix.

VARNISH_STORAGE_SIZE=1G

#

# # Backend storage specification

#VARNISH_STORAGE="file,${VARNISH_STORAGE_FILE},${VARNISH_STORAGE_SIZE}"

VARNISH_STORAGE="malloc,100M" 更改存储类型3)配置vcl文件:

[root@node2 ~]# vim /etc/varnish/test.vcl

backend static {

.host = "192.168.0.114";

.port = "80";

}

acl purgers {

"127.0.0.1";

"192.168.0.0"/24;

}

sub vcl_recv {

if(req.request == "PURGE") {

if(!client.ip ~ purgers) {

error 405 "Method not allowd.";

}

}

if (req.restarts == 0) {

if (req.http.x-forwarded-for) {

set req.http.X-Forwarded-For = req.http.X-Forwarded-For + ", " + client.ip;

} else {

set req.http.X-Forwarded-For = client.ip;

}

}

return(lookup);

}

sub vcl_hit {

if(req.request == "PURGE") {

purge;

error 200 "Purged Success.";

}

}

sub vcl_miss {

if(req.request == "PURGE") {

purge;

error 404 "Not in cache.";

}

}

sub vcl_pass {

if(req.request == "PURGE") {

error 502 "Purged on a passed object.";

}

}

sub vcl_fetch {

if(req.url ~ "\.(jpg|png|gif|jpeg)$") {

set beresp.ttl = 7200s;

}

if(req.url ~ "\.(html|htm|css|js)$") {

set beresp.ttl = 1200s;

}

}

sub vcl_deliver {

if (obj.hits > 0) {

set resp.http.X-Cache = "HIT from " + server.ip;

}else {

set resp.http.X-Cache = "MISS";

}

}4)编译启用:

[root@node2 ~]# varnishadm -S /etc/varnish/secret -T 127.0.0.1:6082 varnish> vcl.load test2 test.vcl 200 VCL compiled. varnish> vcl.use test2 200 varnish>

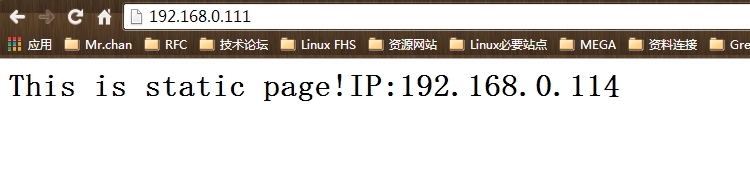

提供静态页面:

[root@node4 ~]# service httpd start Starting httpd: [ OK ] [root@node4 ~]# vim /var/www/html/index.html <h1>This is static page!IP:192.168.0.114</h1>

5)配置nginx和tomcat:

#安装不做说明;配置nginx

[root@node3 ~]# vim /etc/nginx/conf.d/default.conf

#在location中定义proxy_pass即可

location / {

# root /usr/share/nginx/html;

# index index.html index.htm;

proxy_pass http://192.168.0.113:8080; #全部代理到后端8080端口

}配置tomcat

#安装不做说明;也无需做配置;安装完成后启动即可 [root@node3 conf]# ll /etc/rc.d/init.d/tomcat -rwxr-xr-x 1 root root 1288 May 11 18:28 /etc/rc.d/init.d/tomcat [root@node3 conf]# [root@node3 conf]# service tomcat start Using CATALINA_BASE: /usr/local/tomcat Using CATALINA_HOME: /usr/local/tomcat Using CATALINA_TMPDIR: /usr/local/tomcat/temp Using JRE_HOME: /usr/java/latest Using CLASSPATH: /usr/local/tomcat/bin/bootstrap.jar:/usr/local/tomcat/bin/tomcat-juli.jar [root@node3 ~]# ss -tunl | grep 8080 tcp LISTEN 0 100 :::8080 :::*

提供jsp页面文件

[root@node3 ROOT]# pwd

/usr/local/tomcat/webapps/ROOT

[root@node3 ROOT]# vim dynamic.jsp

<%@ page language="java" %>

<%@ page import="java.util.*" %>

<html>

<head>

<title>JSP test page.</title>

</head>

<body>

<% out.println("This is dynamic page!"); %>

</body>

</html>6)测试访问:

测试访问正常;动静分离正常。到此LNMT配置完成。mysql可以安装测试与LAMP大致相同。

测试访问正常;动静分离正常。到此LNMT配置完成。mysql可以安装测试与LAMP大致相同。

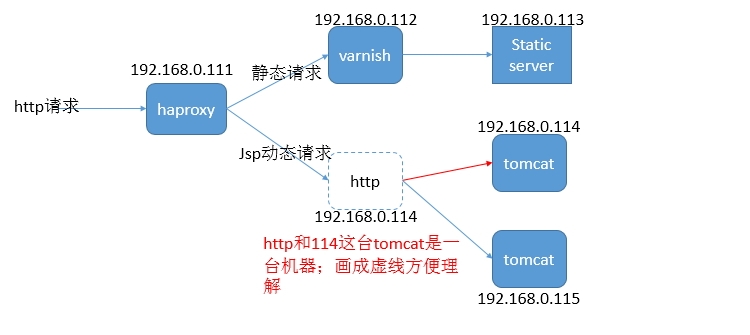

二、LAMT的配置:

大致规划:

| 主机 | IP |

| haproxy | 192.168.0.111 node1.soul.com |

| varnish | 192.168.0.112 node2.soul.com |

| httpd | 192.168.0.113 node3.soul.com |

| httpd+tomcat | 192.168.0.114 node4.soul.com |

| tomcat | 192.168.0.115 node5.soul.com |

这里是接着上面的配置来的;所以关于haproxy和varnish的配置不做说明了;重点来看httpd和tomcat的整合和负载均衡;其中对应的IP需要更改下:

haproxy:

[root@node1 ~]# vim /etc/haproxy/haproxy.cfg

#---------------------------------------------------------------------

# round robin balancing between the various backends

#---------------------------------------------------------------------

backend dynamic

balance roundrobin

server node4 192.168.0.114:80 check #这里的后端更改为114主机node2上的varnish:

[root@node2 ~]# vim /etc/varnish/test.vcl

backend static {

.host = "192.168.0.113";

.port = "80";

}重新编译后测试即可.

基于Apache做为Tomcat前端的架构来讲,Apache通过mod_jk、mod_jk2或mod_proxy模块与后端的Tomcat进行数据交换。而对Tomcat来说,每个Web容器实例都有一个Java语言开发的连接器模块组件,在Tomcat6中,这个连接器是org.apache.catalina.Connector类。这个类的构造器可以构造两种类别的连接器:HTTP/1.1负责响应基于HTTP/HTTPS协议的请求,AJP/1.3负责响应基于AJP的请求。但可以简单地通过在server.xml配置文件中实现连接器的创建,但创建时所使用的类根据系统是支持APR(Apache Portable Runtime)而有所不同。

APR是附加在提供了通用和标准API的操作系统之上一个通讯层的本地库的集合,它能够为使用了APR的应用程序在与Apache通信时提供较好伸缩能力时带去平衡效用。

同时,需要说明的是,mod_jk2模块目前已经不再被支持了,mod_jk模块目前还apache被支持,但其项目活跃度已经大大降低。因此,目前更常用 的方式是使用mod_proxy模块。

本次以mod_proxy模块做实验:

1)配置node4上的httpd和tomcat:

#配置httpd;基于mod_proxy模块与后端tomcat联系;这里直接为mod_proxy模块写一个单独的配置文件:

[root@node4 conf.d]# pwd

/etc/httpd/conf.d

[root@node4 conf.d]# vim mod_proxy.conf

ProxyVia on

ProxyRequests off

ProxyPreserveHost on

<proxy balancer://lb> #定义一个组

BalancerMember ajp://192.168.0.114:8009 loadfactor=1 route=TomcatA

BalancerMember ajp://192.168.0.115:8009 loadfactor=1 route=TomcatB

</proxy> #组内使用ajp协议进行后端代理

ProxyPass / balancer://lb/ #代理到后端的组

ProxyPassReverse / balancer://lb/

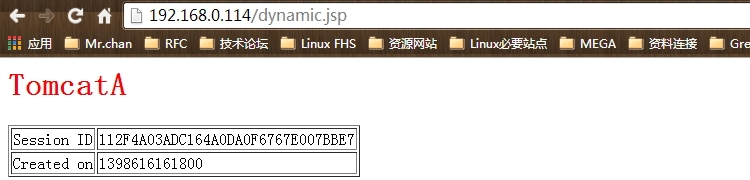

#tomcat的安装就不再赘述;提供node4和node5上的页面文件:

[root@node4 ~]# vim /usr/local/tomcat/webapps/ROOT/dynamic.jsp

<%@ page language="java" %>

<html>

<head><title>TomcatA</title></head>

<body>

<h1><font color="red">TomcatA </font></h1>

<table align="centre" border="1">

<tr>

<td>Session ID</td>

<% session.setAttribute("abc","abc"); %>

<td><%= session.getId() %></td>

</tr>

<tr>

<td>Created on</td>

<td><%= session.getCreationTime() %></td>

</tr>

</table>

</body>

</html>

------------------------------------------------------------------

[root@node5 ~]# vim /usr/local/tomcat/webapps/ROOT/dynamic.jsp

<%@ page language="java" %>

<html>

<head><title>TomcatB</title></head>

<body>

<h1><font color="blue">TomcatB </font></h1>

<table align="centre" border="1">

<tr>

<td>Session ID</td>

<% session.setAttribute("abc","abc"); %>

<td><%= session.getId() %></td>

</tr>

<tr>

<td>Created on</td>

<td><%= session.getCreationTime() %></td>

</tr>

</table>

</body>

</html>

#完成后重启httpd测试。

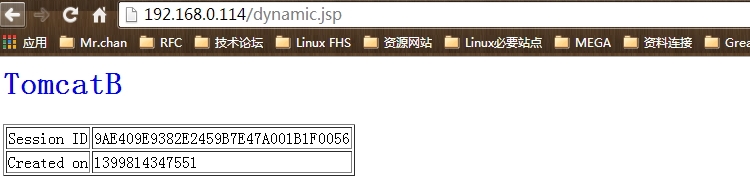

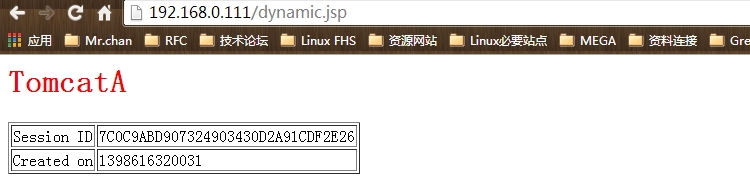

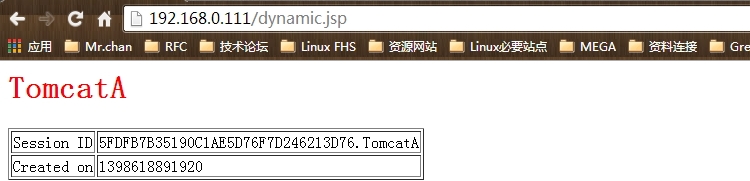

测试haproxy的地址192.168.0.111同样生效:

2)绑定session会话和开启负载均衡管理界面:

#更改httpd代理的配置文件

[root@node4 ~]# vim /etc/httpd/conf.d/mod_proxy.conf

ProxyVia on

ProxyRequests off

ProxyPreserveHost on

<proxy balancer://lb>

BalancerMember ajp://192.168.0.114:8009 loadfactor=1 route=TomcatA

BalancerMember ajp://192.168.0.115:8009 loadfactor=1 route=TomcatB

</proxy>

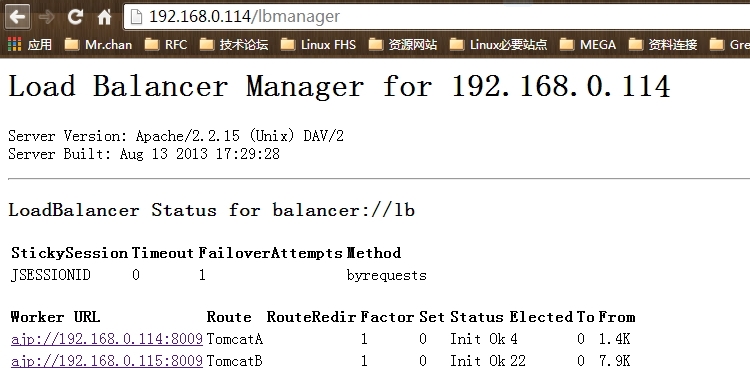

<Location /lbmanager> #定义负载均衡管理界面

SetHandler balancer-manager

</Location>

ProxyPass /lbmanager ! #该界面是不做代理

ProxyPass / balancer://lb/ stickysession=JSESSIONID #开启session绑定

ProxyPassReverse / balancer://lb/

#除了httpd配置文件;还需要更改tomcat的配置文件;后端两个节点都需要对应的更改:

[root@node4 ~]# vim /usr/local/tomcat/conf/server.xml

<Engine name="Catalina" defaultHost="localhost" jvmRoute="TomcatA">

#在该行添加jvmRoute="TomcatA"这条信息;node5对应添加为TomcatB重启httpd和tomcat测试:

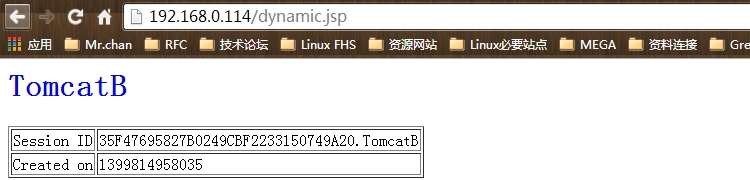

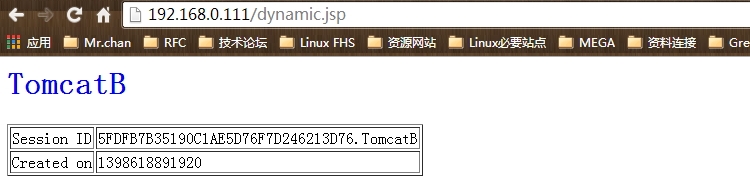

可以发现后面已有session信息;且刷新页面不会在调度到TomcatA主机上。

测试管理界面也是正常的。可以在此处做简单的管理操作。

3)下面配置tomcat的session会话集群:

由于各版本可能有差异;可以参考官方文档:http://tomcat.apache.org/tomcat-7.0-doc/cluster-howto.html

#更改tomcat的配置文件;在engine段添加如下:

[root@node4 ~]# vim /usr/local/tomcat/conf/server.xml

<Engine name="Catalina" defaultHost="localhost" jvmRoute="TomcatA">

<Cluster className="org.apache.catalina.ha.tcp.SimpleTcpCluster"

channelSendOptions="8">

<Manager className="org.apache.catalina.ha.session.DeltaManager"

expireSessionsOnShutdown="false"

notifyListenersOnReplication="true"/>

<Channel className="org.apache.catalina.tribes.group.GroupChannel">

<Membership className="org.apache.catalina.tribes.membership.McastService"

address="228.0.40.4" #广播地址

port="45564"

frequency="500"

dropTime="3000"/>

<Receiver className="org.apache.catalina.tribes.transport.nio.NioReceiver"

address="auto"

port="4000"

autoBind="100"

selectorTimeout="5000"

maxThreads="6"/>

<Sender className="org.apache.catalina.tribes.transport.ReplicationTransmitter">

<Transport className="org.apache.catalina.tribes.transport.nio.PooledParallelSender"/>

</Sender>

<Interceptor className="org.apache.catalina.tribes.group.interceptors.TcpFailureDetector"/>

</Channel>

<Valve className="org.apache.catalina.ha.tcp.ReplicationValve"

filter=""/>

<Valve className="org.apache.catalina.ha.session.JvmRouteBinderValve"/>

<Deployer className="org.apache.catalina.ha.deploy.FarmWarDeployer"

tempDir="/tmp/war-temp/"

deployDir="/tmp/war-deploy/"

watchDir="/tmp/war-listen/"

watchEnabled="false"/>

<ClusterListener className="org.apache.catalina.ha.session.JvmRouteSessionIDBinderListener"/>

<ClusterListener className="org.apache.catalina.ha.session.ClusterSessionListener"/>

</Cluster>

#完成后复制一份到node5上;对应的jvmRoute更改即可;还需在web.xml中添加:

[root@node4 ~]# cp /usr/local/tomcat/conf/web.xml /usr/local/tomcat/webapps/ROOT/WEB-INF/

#因为本次使用的就是默认目录;所以拷贝到默认目录下;具体情况以自己的为准

[root@node4 ~]# vim /usr/local/tomcat/webapps/ROOT/WEB-INF/web.xml

<distributable/>

#在正文空白中添加上面一行即可;同复制一份到node5;

#注释掉调度器的黏性

[root@node4 ~]# vim /etc/httpd/conf.d/mod_proxy.conf

# stickysession=JSESSIONID 注释掉或删掉即可。

#重启httpd和tomcat测试。

可以看见上述虽然节点改变了;但是sessionID还是相同的;到此实现了session会话保持。

如有错误;恳请纠正。

本文出自 “Soul” 博客,请务必保留此出处http://chenpipi.blog.51cto.com/8563610/1409622