首页 > 代码库 > 一个关于heka采集系统的问题

一个关于heka采集系统的问题

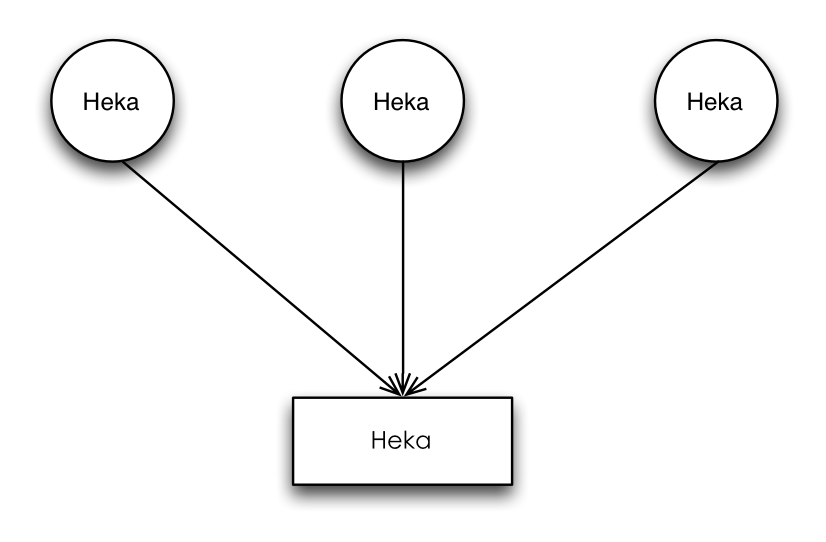

配置架构:

a. Heka’s Agent/Aggregator架构

b:以一台agent为例进行说明,agent1配置文件如下:

[NginxLogInput] type = "LogstreamerInput" log_directory = "/usr/local/openresty/nginx/logs/" file_match = ‘access\.log‘ decoder = "NginxLogDecoder" hostname = "ID:XM_1_1" [NginxLogDecoder] type = "SandboxDecoder" filename = "lua_decoders/nginx_access.lua" [NginxLogDecoder.config] log_format = ‘$remote_addr - $remote_user [$time_local] "$request" $status $body_bytes_sent "$http_referer" "$http_user_agent"‘ type = "nginx-access" [ProtobufEncoder] [NgxLogOutput] type = "HttpOutput" message_matcher = "TRUE" address = "http://127.0.0.1:10000" method = "POST" encoder = "ProtobufEncoder" # [NgxLogOutput.headers] # content-type = "application/octet-stream" # [NgxLogOutput] # type = "LogOutput" # message_matcher = "TRUE" # encoder = "ProtobufEncoder"

aggregator配置如下:

[LogInput]

type = "HttpListenInput"

#parser_type = "message.proto"

address = "0.0.0.0:10000"

decoder = "ProtobufDecoder"

[ProtobufDecoder]

[ESJsonEncoder]

index = "%{Type}-%{2006.01.02}"

es_index_from_timestamp = true

type_name = "%{Type}"

[PayloadEncoder]

[LogOutput]

type = "LogOutput"

message_matcher = "TRUE"

encoder = "ESJsonEncoder"

2. 问题描述:通过以上配置以后本应该可以将nginx log文件中数据发送到aggregator,并显示出来,但实际上并未显示;

3. 解决方法:修改protobuf.go中的Decode接口:

if err = proto.Unmarshal([]byte(*pack.Message.Payload), pack.Message); err == nil {

// fmt.Println("ProtobufDecoder:", string(pack.MsgBytes))

//fmt.Println("ProtobufDecoder", pack.Message.Fields)

packs = []*PipelinePack{pack}

} else {

atomic.AddInt64(&p.processMessageFailures, 1)

}

通过以上代码可以看出我们是将Unmarshal接口中的第一个参数pack.MsgBytes修改为pack.Message.Payload这样既可将agent端发送的数据在aggregator正确解析;

一个关于heka采集系统的问题

声明:以上内容来自用户投稿及互联网公开渠道收集整理发布,本网站不拥有所有权,未作人工编辑处理,也不承担相关法律责任,若内容有误或涉及侵权可进行投诉: 投诉/举报 工作人员会在5个工作日内联系你,一经查实,本站将立刻删除涉嫌侵权内容。